Does this mean that the 780m sli will still be the best mobile gpu around when's the 900 series out

-

-

900 series *SHOULD* be 20nm Maxwell which *SHOULD* nearly double the performance of the 6xx series GPU's.Robbo99999 and cathal1292 like this.

-

When are they expected out Q1 2015 ?

-

Thanks HTWingNut last question im going to get clevo in february which gpu be best as i would have enough for any single gpu ? Sli might be too much

-

I think June 2014. It will give them many months to do mass produce 20nm GPUs.

Login to DIGITIMES archive & research -

I thought that most of that production was being cannibalized by Apple, and nVidia wouldn't see it until at least Q4 2014.

880m then. Will be king of the heap at the time. -

I have no idea how much of the production tools Apple will occupy. Apple used to produce 28nm A6-chips @ Samsung, but they are exclusively making their A8-chips @ TSMC.

We all know how many iPad/iPhones Apple sell each year which is waaaay higher amount than all Nvidia GPUs combined. So they will undoubtly need a hefty 20nm production per month. But then again, Nvidia have used TSMC for many many years while Apple is a newcomer, so they can`t just barge right in the factory and walk all over Nvidia/Qualcomm and the rest. -

No, but if they have a bulk of the business, they sure will have an influential factor.

TSMC on track to Produce Apple's 2014 20nm A8 Processor - Patently Apple

TSMC poised to be leading 20nm chip supplier: analysts - Taipei Times

50,000 wafers per quarter, should be pretty significant, and says 165,000 A8 chips to Apple. How many (high yield) chips on a wafer? 200 maybe? So perhaps it is a possibility. But why release 800m series in Feb and a new arch in June?Cloudfire likes this. -

Good links there HT

One must take in to consideration that the SOCs Apple produce are much smaller than the chips Nvida design.

Take A6-chip for example. The die is 95mm^2. GTX 680M is 294mm^2. 3 times as big. So Apple can fit a lot more A8 chips on the wafer than Nvidia can with their Maxwell chips. Which means Apple might not need to produce so many wafers after all.

In your link they said

6.5%. I`m sure Apple have made some good deal on production because of the big quantity which makes revenue go down compared to what it ideally should be, but 6.5% doesn`t sound like they are "taking over" is it?

TSMC is moving to 450mm wafers with 20nm production I read somewhere. I know GK104 is 17.2mm x 17.2mm. Using a calculator shows roughly 460 die`s per wafer. But it will be more with 20nm since die size will go down. So lets say 600 die/wafer. Say Maxwell is 3 times bigger. It means 1800 die/wafer with A8-chips. Say Apple use only 20% of those 50 000 wafers. 10 000 wafers. 10 000 wafers x 1800 die = 18 million A8 chips/quarter.

Is that reasonable amount? That leaves 40 000 wafers for the rest. Doesn`t sound too bad

-

AWWW YESSSS :thumbsup:

Maxwell gives us a glimpse of the Project Denver debacle | SemiAccurateunityole likes this. -

Great info, Cloudfire! Is there any way to find out the voltage on 880M? I mean on stock 780M had 1.012v where svl7 vbios allowed 850Mhz at 1.0v. So 993Mhz on 780M could be achieved at around 1.025v. If it stays lower than that, owners might hit 1.1Ghz on the core, which is not that bad) Of course, not talking about power consumption and temperatures here!

-

So you think there will be an 890M built on Maxwell?

If this comes out... tough decisions... buy a new house... or take kids to Disney... or buy a new 890m SLI rig... lulz.reborn2003 likes this. -

880MX = maxwell

-

So then the performance will only be like what, 10% faster than 880M? Pffft, I am dissapoint.

-

I have no idea. Got to check vbios to find that out.

But I know GTX 780 Ti is made with B1 stepping while GTX Titan with A1 stepping. And GTX Titan @ 1019MHz @ 1.2V. GTX 780 Ti @ 1019MHz@ 1.187V. So we should assume that GTX 880M also will run on lower voltage than GTX 780M.

Maybe it will run at 1.012V while doing 1GHz because of B1.

I`m pretty sure it will beat 780M OCed for those who are looking to take top spot.

Screw the familiy. GTX 890M SLI FTW

Yeah I think so too.

-

Mine runs stable with +100 core and +300 vRAM with no overvolting and default vBIOS. With some overvolt +183 core should be very doable. I guess the key question is whether nVidia delibrately held back on the 780M, and 880M is merely a higher binned 780M that has been pushed to its limits.

-

If you used the unlocked vBIOS 954MHz would only be +104 on the core

Don't put all your money on overvolting though. For example I can overvolt all the way to 1.1V and get the cards to run at 1050/3100 no problem BUT 3DMark 11 score would take a dip. GPU usage would be down from high 90s to high 80s with temperatures pushing 90. Overall I find the best results for me are achieved with no OV..

Don't put all your money on overvolting though. For example I can overvolt all the way to 1.1V and get the cards to run at 1050/3100 no problem BUT 3DMark 11 score would take a dip. GPU usage would be down from high 90s to high 80s with temperatures pushing 90. Overall I find the best results for me are achieved with no OV..

-

Remember that unstable VRAM clocks will also cause benchmark scores to drop. GDDR5 is crash-resistant but once it's pushed too hard, error detection and re-transmission will kick in, reducing performance. For example, the 576 Heaven 4.0 score in my sig instantly drops by 100 points with my memory clock at 2260 MHz even though the benchmark completes just fine with no visual anomalies.

Robbo99999 likes this. -

Robbo99999 Notebook Prophet

Do you know why it does that Sponge? Is it because it's reaching it's TDP limits, temperature limits, or as octiceps mentioned maybe the memory is overclocked too far? I thought if TDP or temperature limits were reached then clocks would just drop to a lower state, not remain at P0 state & show lower GPU usage. I've heard of this error detection characteristic that octiceps talks of before, and it's relation to overclocking. -

Meaker@Sager Company Representative

No the difference would be the same as 675M -> 680M most likely. -

Just it won't be like 580m --> 680m is all I'm getting at.

-

wait, the logic is killing me?

675m =/= 580m? yes no what errr, my brain exploded. -

DAMMIT.

I'm going to the US from mid-Jan to 2nd of Fed. Would have totally bought one of these with an 880m when out there if they were available. Guess that won't be happening as I doubt a February launch means February 1st...reborn2003 likes this. -

Well this is certainly interesting if true.

GM107 will be the first Maxwell chip out and is suppose to be revealed at CES next week. It will be 28nm.

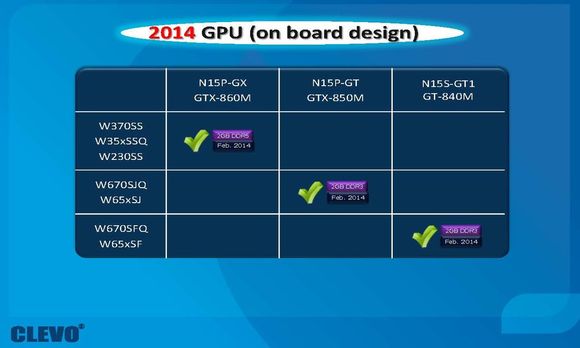

So based on previous Kepler cards, we saw GK107 out first. GT 640M/650M/GTX660M. So this means that GT 840M/850M and GTX 860M might actually be Maxwell (GM107). I doubt it since Clevo roadmap listed them as N15E, but you never know with Nvidia.

NVIDIA Maxwell details to be revealed at CES 2014? | VideoCardz.comRobbo99999 likes this. -

Robbo99999 Notebook Prophet

885M or 890M Maxwell chip anyone! (not referring to GM107 though). (900 series for the die shrink version!)

reborn2003 and Cloudfire like this. -

yeap, or they give us OC kepler and do a refresh using the MX ref for the new cards..

Cloudfire likes this. -

hate to break it to you but its unlikely. mobile gpu always based on previous generation desktop graphics. theres a slim chance but wouldn't count on it. 880m/885m or mx will probably be on 28nm.. sucks

-

Robbo99999 Notebook Prophet

Haha, yes, it's fun to postulate though! I don't think I'll be upgrading till Volta anyway, only recently got my 670MX for a bargain!reborn2003 and unityole like this. -

Specs for GM107 popped up today.

As always take it with a grain of salt.

GM107:

1152 Cuda Cores

96TMU

16ROP

128Bit memory bus

2GB GDDR3/GDDR5

It fit with the Clevo roadmap (2GB and 128bit bus)

-

As a follow up, Nvidia will have a Press conference at CES Sunday January 5th.

Exciting

NVIDIA Newsroom - Releases - NVIDIA Announces 2014 CES Press Conference, Live Webcast Coverage, Dedicated CES Newsroom

reborn2003, unityole, Robbo99999 and 1 other person like this. -

hopefully in a few weeks I'll wake up to find 885m is based on 20nm then I can finally spend $2500 on two pairs of graphics mmm yeahreborn2003 and Cloudfire like this.

-

20nm? hah, one could always wish

I think we will have to wait til atleast June for 20nm flagships. 28nm Maxwell however. Who knows...unityole likes this. -

i wouldnt mind if it was based on 28nm unless we see some drastic performance improvement over 780m. a mere 10% isnt something I'd want that can be covered by overclocking. something like a config core increase is what people need, not some dumb core/memory clock tiny increment. if they manage to fit more units with same 28nm then it'll probably just run hotter and consumes more power, donno how they are going to do this

-

Yeah I`m thinking the same. If it is 20%+ better than GTX 780M and consume less or the same, I think a lot, me included perhaps, will buy it. It depends a little on how far 20nm is away too. I can wait 3-4 months if thats what it takes. 6 months+, that 28nm Maxwell is definitely going inside my machine.

--------------------------------------------------------------------------------------------------------------------------------

Just remember guys, Tomorrow @ 8pm PT the presentation will be broadcasted here:

http://blogs.nvidia.com/ThePerfectStorm likes this. -

ThePerfectStorm Notebook Deity

Totally agree. If they give us a big enough performance jump, I'll buy the 880M. But I am definitely not waiting till June - so, unfortunately, I will probably end up with a rebranded card in my next laptop. Both nVidia and ATI have seriously f**ked up with the rebranding crap. -

28nm = Heat = Useless = Turd = Business

-

http://s472165864.onlinehome.fr/support/pdf/Specifications/2013WW43 Clevo Roadmap.pdf

Same here, new cards are always rebrands and they introduce the real ones later. For decent performance gains it will not matter much. What does matter IMO, is whether new cards are more power and thermal efficient. If not its not going to help notebook tech. Kepler was good but Haswell (MQ's) was a bloody dissapointment! -

"As a follow up, Nvidia will have a Press conference at CES Sunday January 5th."

I hope we hear something. Not holding my breath though.

"At CES, NVIDIA will showcase new NVIDIA® Tegra® mobile technologies, gaming innovations and advanced automotive display technologies"Cloudfire likes this. -

They'll probably announce Maxwell and their plans for G-SYNC during 2014. It would be awesome if Alienware was the first to offer G-SYNC in their laptop lineup.

Cloudfire likes this. -

They may not even launch a GPU tomorrow, just have a presentation about Maxwell and whats new. Then announce that they will roll out the first Maxwell`s in a few months.

Or they could screw us over and do a lame Tegra presentation lol.

I`d love to see G-SYNC in the upcoming notebooks as well. It would be a really nice perk and I don`t think that the PCB with the controller takes much room and can be easily fitted inside a notebook -

G-Sync will likely only be in non-Optimus notebooks considering it would just be another power consuming chip, which is opposite the benefit that Optimus provides.

-

ThePerfectStorm Notebook Deity

I wonder if they would implement a way to switch G-Sync on and off. If they do, no need to sacrifice battery life when not gaming. -

Great, just what we need, another chip to be switchable and go wrong.

-

-

So it is basically a 780m with a little faster clocks is all, and 8GB vRAM (what a waste of money IMHO).

-

I think they are counting on people thinking "more is better"...

Let's see how high 8GB clock compared to 4...

Let's see how high 8GB clock compared to 4...

Hope these pics help. I was actually surprised to see people here still guessing after we told them 3 weeks ago that the GTX880 is essentially just a K5100M with higher clocks.Cloudfire likes this.

Clevo notebooks with 800M series coming out February 2014

Discussion in 'Gaming (Software and Graphics Cards)' started by Cloudfire, Dec 11, 2013.

![[IMG]](images/storyImages/magic-eight-ball.jpg)