-

-

What did I say that was against Anandtechs words? Some models of GT750M are GK107, but the GT 650M does not support GPU boost 2.0, only the 700M series. And GT 750M comes with GK106 apparantly, which was only for GTX 670MX before.

Why should GK106 mean higher power consumption? It just as easily be less power consumption too

GK208 is rubbish. DDR3 and 64bit memory bus lol. But then again, it is probably dirt cheap and will be of good use in Ultrabooks like you say because it will compete against IGPs which it will crush. -

That article doesn't tell the whole story...the vBIOS that I have here don't match that list. One of the cards listed as GK208 is GK107 and another listed as GK107 is GK208...

-

Something doesn't add up..

Interesting thread

-

28nm Fermi??? That chart is more than a little off..

-

What's wrong with 28nm fermi?

-

You are welcome to add it to the thread Prema. I`m guessing it doesn`t matter since its a 64bit bus anyways?

I guess this could be what Prema is talking about? GT 720M and 710M are GK208 since they are 64bit. Wouldn`t shock me if they were Fermi though since we had some of the 600M series as Fermi. -

Nevermind, I thought Fermi was exclusive with 40nm silicon. I was wrong

-

failwheeldrive Notebook Deity

I was wondering the same thing, didn't know there were 28nm fermi cards lol.

And seriously, when are they going to drop the fermi cards from their lineup? Wouldn't surprise me to still see fermi rebrands when Maxwell is out

-

The card(s) are still based off of the same technology as previous legacy Nvidia with just higher clock speeds. The newer versions with new architecture are due out next year. The good news is it will drop the price on the 680m cards that were priced 250 dollars more than the 7970m. It is irrelevant to me though, because I bought the AMD 7970m, which had the best price to performance ratio of the two. The driver issues were taken out due to the Sager 9370 not having Enduro capabilities. Although, Nvidia may be more tempting if you are headed into the tablet/smartphone realm.

-

Well for example those two:

0FE1 >>> NVIDIA GeForce GT 730M >>> GK107

1292 >>> NVIDIA GeForce GT 740M >>> GK208 -

Man this is confusing. Now we have 384 cores on GK106, GK107 and GK208. Earlier it was only for GK107.

There is something strange about GT 740M as GK208 though, it will be with 128bit while the rest is with 64bit. Are you sure?

EDIT: GT 740M comes with both GK208 and GK107

http://videocardz.com/40819/nvidia-preparing-geforce-gtx-titan-le -

-

Karamazovmm Overthinking? Always!

there are over 19k isos, which one are you talking about? and how does that enter what we are discussing.

I dont think you understand gross exaggeration for proving a point, that is also called proof by absurdity.

From an engineering perspective off course they are doing fine. What I dont like is to have a notebook with the SAME gpu from another that offers me a very different performance outcome, while still in their rated use of settings. This is absurd. While we had different gpus with the same name (looking at you 555m), the difference in performance will not be that far, though the example of the 555m may be too drastic for this, since there was a good gap in there from the best to the worst. And guess what we complained about that.

For example, apple is using a higher standard of construction in their project for their campus than what is required or accepted, they both will perform the same duties, while using the standard will immensely diminish the cost of the building. Though will impair on the designed fit and finish.

I dont think I can make it any more clear to you. -

10char........

-

Karamazovmm Overthinking? Always!

again there are 19500 isos, which one are we talking about and how that impacts here.

Proof by absurdity is used in math.

You truly are no philosopher for sure, that much is clear.

From an engineering perspective off course they are doing fine. What I dont like is to have a notebook with the SAME gpu from another that offers me a very different performance outcome, while still in their rated use of settings. This is absurd. While we had different gpus with the same name (looking at you 555m), the difference in performance will not be that far, though the example of the 555m may be too drastic for this, since there was a good gap in there from the best to the worst. And guess what we complained about that. -

10char........

-

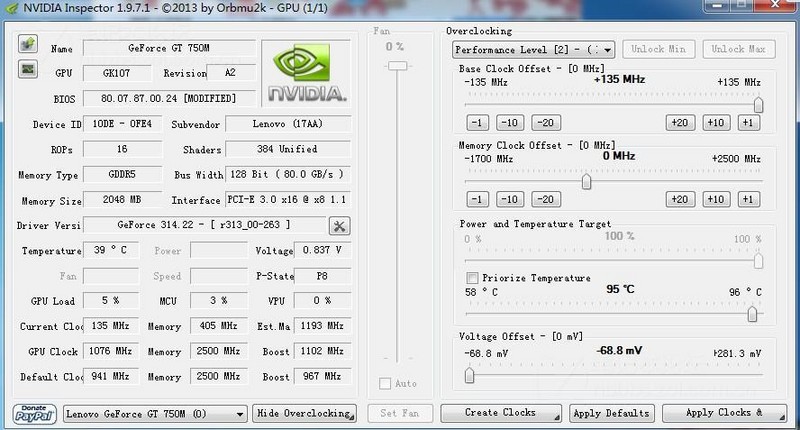

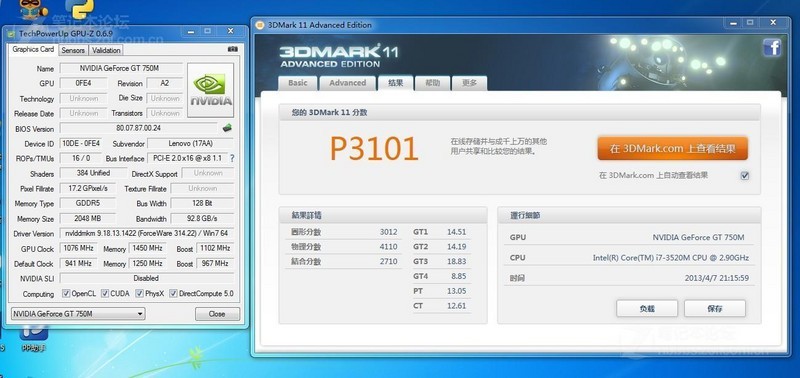

People have been playing a little with the GT 750M.

This is from Lenovo Y400

GT 750M been overclocked from 967Mhz (stock) to 1102MHz. Memory overclocked from 1250MHz to 1450Mhz (insane high). The overclocker set the max temp to 95C (a limit for GPU Boost 2.0).

3DMark11 with this overclock. GPU score 3012. GTX 670M score 2700, GTX 675M score 3130. So its only 5% from a GTX 675M.

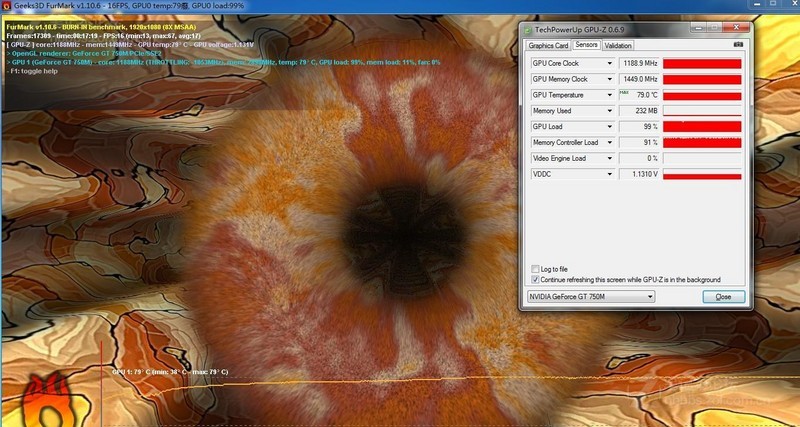

As mentioned earlier, 700M series comes with GPU Boost 2.0. Meaning it will clock even higher if the temperatures allow it. Turns out the GPU Boost clocks the GPU all the way up to 1190Mhz(LOL) doing a Furmark run. Max temperature is 79C during that run that lasted 17 minutes. On Furmark!!!

Source: http://translate.google.no/translate?sl=zh-CN&tl=en&js=n&prev=_t&hl=no&ie=UTF-8&eotf=1&u=http%3A%2F%2Fideapad.zol.com.cn%2F61%2F160_603542.html -

Very impressive results.

-

This is the 'old' GT650M with less voltage...it's the same old stuff over and over again with a new name and vBIOS...

-

Yup it does seem like the 750M runs on higher voltage than GT 650M.

GT 650M runs @ 1.0120V on Furmark while GT 750M runs @ 1.1310V.

I`m very curious to see what clocks we can get with the GTX 780M with GPU Boost 2.0. 900Mhz when GPUB is active on 1536 cores maybe? If so, we may be talking 35-40% faster than 680M. -

I am preparing by getting a 330W PSU for my single GPU Clevo...

-

Sounds a little overboard but atleast you`re prepared for anything

Isn`t that what they use on 680M SLI configurations from Dell? -

They use two of those on current SLI machines

...have an XM, too so things add up fast with 1.xv OV & 3D screen...

...have an XM, too so things add up fast with 1.xv OV & 3D screen...

They just silently upgraded the stock Clevo PSU for single GPU systems from 220W to 240W... -

I know it's too early to ask, but the 800M series is expected to launch in Q2 2014, right?

-

failwheeldrive Notebook Deity

Sometime in 2014, but no one knows exactly when. It'll probably be sometime between March and July. -

Karamazovmm Overthinking? Always!

Im done talking, when Im done talking.

Aside that, take a look at your condescending attitude. You basically never constructed something that is passable as an argument, some throw there about things that I supposedly dont know, ISOs that you dont relate.

And now you claim that Im angry?

this is really rich, you actually made my day -

Good news guys! Lenovo just released there Y500/Y400 series with a 750m!

Affordable Entertainment Laptops | IdeaPad Y Series | Lenovo | (US) -

10char.......

-

Karamazovmm Overthinking? Always!

1. iso 9001 have nothing to do with that

2. and 3. its simple, when you are in a desktop environment, those shenanigans about average tdp and max tdp lose a lot of value. However on a thermally constrained system with a variation of performance so great, we indeed have a not only a valid point, but also a the right to receive what we paid. I dont think you remember how was the 555m case, and how we persistently tried to swerve consumers from purchasing the lower performance cards, and getting the high performing ones.

Its simple you dont make a product that has such a variation and market in the same line that the much higher performance does. Seriously 30% better performance is nothing to sneeze at.

4. never say that again, its shameful

Now Im a fanboy? And how Im a fanboy of what? I dont think you saw me bashing left and right all companies for their mistakes or flaws. I like quality and deliverance. Now the fanboy argument illustrates really how you dont know how to argue, how you consistently swerve to the poster and not the post, and how you dont even deconstruct anything. You just say you are wrong, throw something random that doesnt matter, and its done

also, you noticed that the tdp of the 650 is 80w, now the tdp of the 750m is 33w, while the 650m is 45w, there is no magic there, just simple P4 magic -

10char........

-

Karamazovmm Overthinking? Always!

Given that you throw at me a managerial qualification as proof of what? I can guess that you are unable to read or understand whatever I have been explaining to you. Nor you have any idea of what goes for a coherent discussion, again you resort to attack the poster instead of trying to throw me some number, or disprove me in some engineering concept that i missed or that I dont know.

P4 is Pentium 4, I also guess that you also dont know what intel did there

Now now what I dont understand?

That in a thermally constrained scenario, which notebooks are, things will vary in performance way above nvidia 10% variation?

You actually didnt knew that nvidia uses a variation of 10%, now based on 3dmark vantage, to put the different gpus in the same family, did you?

That when a card that works only at its nominal clocks will have clear and giant disadvantage that when that same card could work with gpu boost 2.0?

And that difference according to what we have, from results of overclocked 650m and 660m are going to be more than 10% that nvidia actually specifies as its standard?

Thus, making use of a mainstream tdp to lower, the same said tdp and to gain more market, while providing the same marketing for products that obviously wont perform not only the same but with a large differentiation in terms of what they do actually deliver?

My advise is to tone down, and go read a little bit -

10char.......

-

There is some confusing information on the web.

Some sites like notebookcheck say that the entire 700M family is based on GK10X chips and not GK11X ones.

Also, what new computing capabilities can we see on the 700M series?

Will 760M / 770M / 780M support CUDA 5 functionalities such as dynamic parallelism?? -

There will most likely only be one new chip from the 700M series: GK208

The rest will be based on the existing GK106/107/104.

Good news is that GTX 780M will most likely be a FULL GK104 this time with all cores enabled.

GTX 760M and 770M will be GK106 as shown by the latest HWinfo.

These chips already exis. The changes compared to 600 series is GPU Boost 2.0 which shown many times in this thread. A really nice feature. Nvidia have hopefully improved the architecture too which I think they have since the GT 750M is 33W (650M is 45W) despite having higher clocks than GT 650M. If Techpowerup is right that is.

Computing capabilities is still crippled since its Kepler with very few FP32/FP64 cores. I don`t know enough to talk about CUDA 5 so maybe someone else could fill you in on that. -

Karamazovmm Overthinking? Always!

its a losing battle.

the 9001 have absolutely nothing to do with what we are discussing. nothing at all.

I guess i know what the problem is, you are either too young or too old, and Im not talking about the real age, but a mental one. It happens to everyone, its kind like a n distribution curve.

You try to maintain a previous point that has nothing to do with the discussion, since the standards of production and the managerial quality that is set within the 9001 have never been approached in this discussion. It was always about the acceptable variation of performance from the nvidia stand point, and the variation that will present itself to the consumer if it doesnt enable gpu bost 2.0 due to being thermally constricted

I cant make this more clear

gpu boost 2.0 against a normal clocked card is going to present a grand gap in performance

this is what we are discussing, got that? now if you wanna talk about iso and other stupid things go do that with someone else -

hey mushroom man and manger man. If your so big and knowledgeable about life then why are you two grown men arguing about a piece of silicon wedged into a plastic chassis?

get over it.

Computing is fun, fighting is usually pointless unless its genuinely worth fighting for -

10char.....

-

Karamazovmm Overthinking? Always!

Get a tougher skin or learn to treat others, I treated with the same respect I treat everyone, till I start to complain on how you debate things. In all those complaints there was some underhanded jab.

Its simple, learn to debate, you dont focus on what Im saying, you focus on whatever things you want to swerve the discussion, which is fine, if they were related at all to the discussion.

Its pretty clear to me that people like gpu boost 2.0, which I think is an intel job, there was so much bad mouthing when they implemented turbo boost that people now dont care and just accept it. However turbo boost and amd version for it are within the tdp of the product, while nvidia created medium and max tdp ratings.

again there is nothing about a managerial quality standard when the problem is obviously somewhere else.

I dont really care what your job is, Im 28, I have worked in diversified fields, IBM (I was in unit design), Pilkington, as a broker and some other areas, I have 2 grad degrees under my belly for international relations and economy, Im going to get my 3rd for computer science this year. Given that I think Im finally prepared Im going for a masters in CS, and probably a PHD after that (we do have a family thing going about PhDs). Now I said what I do, does it means that you know me now? Does it means that you actually have grasp on what I stand? What are my beliefs? Of wich Im a fanboy? Nope.

You time and time again, use top to bottom arguments, you try to deliver time and time again jabs about you being more knowledgeable, you swim on authoritative arguments.

there is also a report button. My advise is, learn to debate and dont fall on those fallacies that you employ. It might work on students, doesnt work on me, almost 10 years in university makes you grow a tougher skin. -

Christ are there any mods on notebook review ?

FFS -

Any moderator, can you please remove my posts that are edited to "10char......" from this thread? nothing constructive was made during those arguments and should be removed from the forum (I erased them but they are looking ugly right now as if I am spamming the forum), thanks and sorry for any trouble!

-

Still trying to figure out difference between 740m and 750m...

-

The specs in OP is wrong for GT 740M.

GT 740M: 384 @ Up to 895MHz + GPU Boost. DDR3 @ 900MHz

GT 750M: 384 @ Up to 967MHz + GPU Boost. DDR3/GDDR5 @ Up to 1250MHz.

Here is a recent review of a notebook with 740M btw

GT 740M:

![[IMG]](images/storyImages/asus-k56cb-gpu-z-nvidia-gt-740m.jpg)

Tested with a weak CPU

GT 750M

![[IMG]](images/storyImages/7d2kTMe.jpg)

-

Meaker@Sager Company Representative

See GT640M and GT650M, will be the same relationship. -

Right but 640m specs show 625MHz & DDR3 or GDDR5 / 650m 900MHz & DDR3 or GDDR5

740m specs show 980MHz with DDR3/GDDR5

750m specs show 967MHz with DDR3/GDDR5

If it's anything like 640m/650m then that means the higher GPU clocks are only with the DDR3 versions, lower clocks with GDDR5.

In any case 650m clearly has the stock higher clocks where 740m shows higher stock clocks than the 750m.

Although from Cloudfire's images it shows the 740m with slower clocks and DDR3. I guess the whole "up to" is a bit misleading and confusing. -

Typical Nvidia mess, HTWingNut. They make base specification that no OEM actually follows

-

15% more performance for 15% more in price or 150% ?

-

Meaker@Sager Company Representative

Sorry I should say 660M and 650M.

740M will have UP TO the same clocks as the 750M but only have DDR3 or have GDDR5 but be clocked lower. -

there's so much more to a good graphics card than "straight line speed"

this Boost 2.0 sounds like a nice feature, but when things are tough, your GPU is blasting 100% and your CPU is toasty, your going to be beyond the envelope of operation for Boost 2.0, so right when things are getting tough, when things are really heating up, it turns off, and down clocks?

it might be 5%, 10% or 25% "faster" with this new feature but is that a useful 5% or 10% or is it wasted when gpu load is low anyway and you don't really need the extra frames.......

Just my opinion.

I'm going to head on a slight tangent, I hope it will provoke thought.

I've been an engineering various mechanical solutions for 15 plus years and work in research and development, and what I've learnt about "figures" is they mean absolutely nothing. I ran a race team (Road Racing - the legal sort!), it was easy to look at the figures and graphs and point out a few peaks and say thats faster. especially when dyno testing the engines but thats not the way it works.

When looking a graph, ignore the minimum and maximum, and like any good scientific assessment disregard any pointless results outside your operating area, translate this to a graphics card, ignore min, max and average, remove results outside your range, lets say ignore anything above 120fps as on a 60hz monitor its reasonable to assume this is largely wasted to most of us, now plot the remaining data and rather than look at it's maximum, look at its average and minimum - now we have comparable results, and for a general idea of "power" the AREA of the shape under the graph line is the sum of performance - this will give a better idea of noticeable performance, and the key values for us games is probably the lower and mid potions of the graph as we won't notice if FPS jumps from 60 to 80 or 160, but we will noice if its going from 80 to 40 to 20....

I hope this makes sense lol, ultimately - you can't trust these basic "benchmarks" and overall values - there are so many different ways to look at the face value - and my opinion is it's largely pointless - blinding consumers with values that are largely irrelevant to them. I'd love to devise a method of standard benchmark values, so you min/max/average/calibre41's result lol -

Typical marketing. nVidia, Intel, AMD all do it. They show relative performance to their own components and theoretical max which is definitely misleading. I agree with you 100%, I've thought of ways over the years to improve on benchmark values but the problem is it becomes criticized and your typical user/gamer doesn't read the details only the benchmark results. They'll see 47FPS average (using your recommendation) when other places show 80FPS average and they'll spew vomit and hatred your way. Unless you're a highly established publication, it would be hard to establish a new way of benchmarking and thinking.

In any case I take all this pre-release marketing performance with a massive grain of salt. Until I see actual performance numbers, it means nothing.

NVIDIA Releases New GeForce GT 700M Mobile GPUs

Discussion in 'Gaming (Software and Graphics Cards)' started by Cloudfire, Apr 1, 2013.

![[IMG]](images/storyImages/gk208.jpg)