everything i have read in the past 12 months says maxwell is 20nm. Where have you seen anything stating it is 28nm? There was even a little hope granted 6 months ago that the x200 series was in 20n but that turned out to be obviously false. I find it highly unlikely they release another architecture on the same 28nm

-

HopelesslyFaithful Notebook Virtuoso

-

It is because 20nm manufacturing process not ready yet, need another half year or more and Maxwell is coming earlier than that. Obviously than they keepin 28nm, plus here is a reading about that.

tilleroftheearth and HopelesslyFaithful like this. -

Yup, the reoccurring rumors is that Apple is sucking up all 20nm manufacturing capacity, and Nvidia/AMD won't be getting it until the second half of 2014 at the earliest. Which means I will be skipping first-generation Maxwell.

But really, we do need an updated roadmap, because with the extension of Kepler and the delay of 20nm, everything has been turned on it's head. I'm just expecting bigger and hotter 28nm at this point, however. -

Don't expect 20nm chips until second half 2014, and as usual AMD might be the first one out with a 20nm GPU. Now as for which company will come out with 20nm chips first, its probably Samsung.

-

HopelesslyFaithful Notebook Virtuoso

No intel been first for almost 2 years ....seriously it is bad...intel will have had 22nm for 2-3 years before everyone else -_- It used to only be 1 year. No wonder intel isn't keeping their old time frame...no reason :/ I do think intel is smart to use their extra space up though for resale

....seriously it is bad...intel will have had 22nm for 2-3 years before everyone else -_- It used to only be 1 year. No wonder intel isn't keeping their old time frame...no reason :/ I do think intel is smart to use their extra space up though for resale

I am surprised they haven't in the past. Imagine if intel used the extra space for AMD's GPUs

I am surprised they haven't in the past. Imagine if intel used the extra space for AMD's GPUs

14nm GPU next year OMG!!! (geeking out as you can tell)

14nm GPU next year OMG!!! (geeking out as you can tell)

I am curious on how intel is staying so far a head. Are they just smarter or is it their premium price allows them better R&D budget to just throw money at it. -

Part of it has to do with money, although Intel has only overtaken Samsung as the biggest fab spender this year according to this. The most significant reason Intel is so far ahead, in my opinion, is due to a lot of advance planning. While TSMC and others are still trying to figure out the best ways to get to smaller nodes, Intel had everything up to current generation mapped out back in 2006. And their executives have stated they've got 10nm figured out now. Their tick-tock model also prevents them from becoming complacent.

As to why they're renting out fab space, traditional processor markets are slowing or declining, so Intel (presumably) can't fully fill their fabs with their own chips anymore. As a result, they're letting others use them, provided the others don't directly compete with Intel. -

Karamazovmm Overthinking? Always!

they rent fab space because otherwise those machines would become scrap.

not everything that goes inside is made at the same process node, for example crystalwell and various parts like the PCH

but that still doesnt cover what those factories can dish out, so they try to get contracts on those.

a similar example, would be how the US car makers dumped low quality cars in south america to utilize that machinery that otherwise would go to the trash, so they brought that machinery and made cars that those machines could make, i.e. garbage. -

Intel isn't only making stuff for other companies on its older fabs. It's renting out space on the current 14nm fabs as well. See Intel Makes 14nm ARM for Altera | EE Times

-

Karamazovmm Overthinking? Always!

thats interesting, I think I read something about it but completely forgot about it.

that begs the question how much depressed demand is intel waiting for cpus? -

Jayayess1190 Waiting on Intel Cannonlake

-

^So according to that, unless Apple switches to the H series in the 13" Retina MBPs, we're going to be stuck with 2 cores and GT3 (non-e) graphics again.

-

-

Karamazovmm Overthinking? Always!

you mean skylake

my hopes of broadwell coming with ddr4 are getting smaller by the minute, albeit industry slowing down the production of ddr3 and the prices going up considerably -

Broadwell will only support DDR4 on the server side.

-

Lets revisit that article you guys got that from shall we?

http://www.computerworld.com/s/article/print/9244215/DDR4_memory_may_not_find_way_into_PCs_tablets_until_2015

Broadwell will support DDR4.

This analyst doesn`t know. He assumes.

Then the analyst goes on and say:

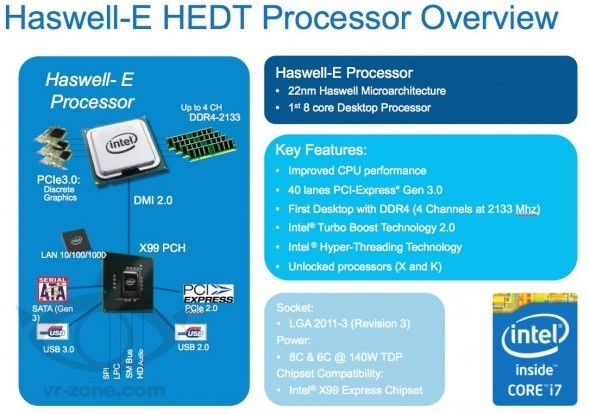

Already said something wrong there. Haswell-E certainly isn`t 18 months away from now, and it will be on client PCs...

So yeah, like I said, odds are improving that mobile broadwell in June 2014 will also support DDR4.

Micron and Samsung are already massproducing DDR4 as we speak. Since they will be available for consumers by June 2014 and desktop people already using DDR4, why shouldn`t we also get it?HopelesslyFaithful likes this. -

Karamazovmm Overthinking? Always!

we already knew that haswell E is going to have DDR4, and I didn't get that from that article I haven't read it.

its a simple matter of given that haswell E is going to have it, there is a much smaller chance that broadwell has it. while its not impossible, its certainly doesn't play in its favour.

I don't know I don't have much in terms of expectations. I do hope that next year there is DDR4 and sata express, because I really want a new pc, its going to be 3 years already with this one. -

My desktop should turn 4 by the time DDR4 comes out. Upgrade tiiiime

-

Based on what...?

You are just making up assumptions based on nothing.HopelesslyFaithful likes this. -

HopelesslyFaithful Notebook Virtuoso

agreed that sentence makes no senses. If haswell E didn't have it than it would be less likely but it does....so it makes it more likely :/Cloudfire likes this. -

Exactly. Atleast there is someone else but me that also see that since Haswell-E support DDR4, Intel might think that the regular users like you and me should finally get to use it.

DDR4 @ 2133MHz (which shown at that slide above) consume 25% less power than DDR3 @ 1866MHz ( source), so if anything that makes the move toward notebooks with batteries more needed than desktops if you ask me.HopelesslyFaithful likes this. -

Karamazovmm Overthinking? Always!

because the supplies should be tight on the new tech, it favours the OEMs with their supplies of DDR3, with the change from DDR2 to DDR3 not all cpus supported it, and the list goes on

and I'm not even saying it aint going to happen, just saying that I don't believe it will. -

Meaker@Sager Company Representative

Not all chipsets supported it. Now the imc is on the cpu it becomes a different game. With the igp getting stronger and stronger intel have a large incentive to push ddr4 to keep an edge over amds apus which is about their only threat.

-

Jayayess1190 Waiting on Intel Cannonlake

-

Jayayess1190 Waiting on Intel Cannonlake

-

18 cores? What?

-

Glue some bay trails together on a die?

-

On the GPU? What are the Intel GPUs at for "cores" these days?

-

Karamazovmm Overthinking? Always!

we already have 12 core cpus, and no the gpu tops at 40 EU, so we are already above that -

Must be talking server market because there sure are no 12 core consumer CPU's.

-

Doubt they coukd fit a 12 core in a laptop without running it a 1GHz, not to mention costing a fortune.

-

Also, for processes that are not highly parallel, there is a sharp drop in performance gained per core added. Functionally, this means it is not worth the cost of producing a processor of more than eight cores for client workloads. Higher core counts are only useful in graphics and other programs that are specially designed to be nearly 100% parallel (as in supercomputers).

-

Karamazovmm Overthinking? Always!

off course, there is no 6, 8... cores on the real consumer line

when you move to the enthusiast you are left with 6 tops -

I remember hearing about Intel's 18 core Haswell Xeon processors a few weeks back on a podcast; I think it was on Twich.

Could any workstation-type application/processes even make use of that many cores or is it more of a proof of concept?

From the business side of things; the pay off (meaning productivity) has to be worth it for the user to justify the price, no (I am sure it will carry a hefty price tag.)?

Sent from my Nexus 5 using Tapatalk -

Karamazovmm Overthinking? Always!

yes they can

here are some examples

AnandTech | The Mac Pro Review (Late 2013) -

Interesting article but how does a review of a Mac workstation relate to my question, maybe I missed something?

Sent from my Nexus 5 using Tapatalk -

Meaker@Sager Company Representative

Because it looks at threaded applications due to the 2011 socket used?

-

Confused here as well.

-

Karamazovmm Overthinking? Always!

thats the point.

imagine that other apps like MASSIVE also make fair use of multiple cpu cores as well. -

Sooo, no more M CPUs? End of an era for CPU upgrades?

Aside from that i don't see much changes from Haswell. -

I don't even think massive prime could make fair use of 18 cores. In their product page they state..."a workstation with four cores may run sims up to 3.9x faster." By that logic 18 cores would increase sims by 17.55x, I am willing to bet that isn't even remotely near the actual performance gains.

http://www.rfx.com/products/49

My conjecture is that I don't think that there is currently software available to make use of 18 cores...that's okay though, the apps will catch-up.

But I digress, I don't want to get the thread off track.

Sent from my Nexus 5 using Tapatalk -

Maybe clock for clock Broadwell will run hotter.

-

Jayayess1190 Waiting on Intel Cannonlake

There will still be PGA socket "M" cpu's but just like now with Haswell they won't be used as much as ULV's or quad cores. -

HopelesslyFaithful Notebook Virtuoso

This is F@H on a 3720qm (Full speed is 3.5GHz and ~45w TDP)

2000 MHz

17 TDP

117.6470588 Perf/W

57% of Max Perf

38% of Max TDP

151% Efficiency

2GHz was better than 1.8GHz and 2.6GHz but maybe 2.2 might have been better but never tested that freq to find the exact value.

With those numbers you could get a way with a 16 core chip at 22nm at 2GHz it would run ~60 TDP. I assume it would be around 60 and not 68 because some things would not have to be duplicated like extra GPU cores idling and other parts of the CPU.

I have not gotten my hands on a haswell chip to test scaling but i bet it would be better down the road with the new things being done. A 16 core 2GHz chip is very do able in a good laptop like an m18x or clevo.

2.6Ghz was 23.5 watts BTW so if you had a beast of a cooling system you could get an 16 core 2.6 GHz 22nm IB in a laptop. I was able to jerry rig my G51j to support 68 watts at 80C IIRC. (920XM)

You could get away with a 16 core CPU in an m18x if it was programmed correctly. You could run 4 cores at 4-5GHz and 6-8 cores at ~4GHz and and 16 cores at 2-2.6GHz. It would work just they haven't programmed windows to manage the CPU well enough to know what parameters to follow. Plus no one really thinks it is worth while to do.

I want to get my hands on a haswell but preferably a broadwell to see how the IVR works and see how it does compared to an IB CPU. If IVR does work than a 16 core laptop is easily do able with IVR

For whatever reason no one wants to get creative with technology :/ The tech is out but everyone is ok with status quo. -

The "programmed correctly" part is the most relevant. Amdahl's law dictates that there are limits that define the degree that increasing core count will increase performance, and those limits are based on how much of any given program can be executed in parallel. Very few programs are entirely parallel, which means they are limited by serial performance, and as such derive less gain as more CPU cores are added. According to the chart provided on the second page of this article, programs that are 50% parallel will run a maximum of twice as fast on a multicore processor compared to a single-core processor, and this maximum benefit isn't realized until you get to 16 cores. Even 95% highly parallel programs gain an absolute maximum of 20x performance from any amount of multiple cores, and that threshold occurs when the core count gets to 4096. The increase in performance per core is less as more cores are added.

When you figure in economics, there comes a point where it is no longer cost effective for companies to invest in adding more CPU cores when they will have less benefit. For programs that are 50% or more parallel, a dual-core processor is between 1.5-2x as powerful as a single-core processor, which is definitely worth it compared to the ideal of 2x. A quad-core processor is between 1.75-3.5x as powerful as a single-core, so not quite as good a deal compared to the ideal (4x), but still okay for more parallel programs. 8-core CPUs are between 1.9-6x more powerful, and 16-cores are between 2-9x more powerful than single-core CPUs.

So while it is definitely possible for companies to put out 16-core processors now, customers are not going to see many real usage performance improvements compared to quad-core processors using the same design unless the programs they use are all highly parallel, and not enough programs are highly parallel for the vast majority of customers to pay the substantial (4x) price increase that would be required for a processor with 16 cores for only getting 50% or less of a performance increase over quad-cores for most real-world applications.

I disagree with your conclusion that higher-core processors are not available because no one wants to get creative or people are satisfied with the status quo. Instead, I believe the reason many-core processors have not further proliferated is because there aren't enough programs that are parallel enough to take advantage of them and they aren't cost-effective to make or purchase for the vast majority of consumers. When this is combined with the slowing gains in computing performance at each new process node, performance is approaching the physical limits of its base (silicon), and completely new technologies will need to be developed if significant performance gains are going to once again be possible. -

Thanks for inadvertently answering my question

Sent from my Nexus 5 using Tapatalk -

HopelesslyFaithful Notebook Virtuoso

You missed the point that i was making with IVR. You can throw a 16 core chip in laptops now and just run it at 2-6 cores with marginal added heat and when you do need the added cores it would work quite well at those 2-2.6 GHz speeds. Plenty of people on these forums would take and see a difference in have more than 4 cores. Also they already sell 16 core xeon laptops but my point was you could easily through an 8-16 core with no issue and there are benefits. The main point cooling does exist for it and the new IVR tech should allow it to scale well but windows would never scale it appropriately. Furthermore, intel got real damn lazy with turbo boost voltage. I talked about it before when i was testing IB scalability to compare it to Haswell or broadwell down the road (comparing IVR vs no IVR). Intel uses some real half baked algorithm for turbo voltage. I think it is a static addition. For every extra multiplier it is a set voltage. Reason for me believing this is because if you turn your IB CPU to base multi 1.6GHz instead of 2.6GHz base and have it turbo to 2.6GHz it runs a good 1-3 TDP higher if i recall. It appears they programed turbo voltage to assume the voltage that is needed based off of a base of 2.6 and not be able to adjust to whatever the base is set at. So the problem is that windows and Intel fail to properly control the CPU to maximize performance.

I personally could see use for a 6-8 core CPU now...16 core? Not so much. Besides running F@H on 16 cores just because but 6-8 cores i definitely could use. -

Details of upcoming Intel`s 9-series chipset (the one that is required to run Broadwell CPUs):

No mention of DDR4, no mention of SATA Express. Poop!

So the only improvement in my eyes is support for PCIe M2 SSDs. That is what we will see in notebooks this year. Slot for mSATA SSDs running 1GB/s with RAID0 support for 2GB/s.

Specs and highlights of IntelHopelesslyFaithful likes this. -

HopelesslyFaithful Notebook Virtuoso

i love the die shrunks but....no DDR4 and no SATA express :/ i might still wait.

EDIT: sorry referring to desktop....I am good on my M17x for now. Granted desktop is always easier to upgrade ^^ so swapping MOBO and CPU isn't that bad.Cloudfire likes this. -

Well unless you can keep everything in cache access to RAM will be one core at a time, that'll slow things down.

Business wise I wouldn't think all innovations would be given in one hit, need to save some to make the next thing appealing. Maybe start of with 6 cores and leave the 8 for later. -

Are these "U" cpus any good, do they match the performance of "M" cpus?

Forget Intel Haswell, Broadwell on the Way

Discussion in 'Hardware Components and Aftermarket Upgrades' started by Jayayess1190, Mar 16, 2010.