Maybe it is the retarded "Adaptive" behavior instead of throttling. If you set the NVIDIA Control Panel profile for this game to "Prefer maximum performance" it might stop that, but it will not if the game doesn't create enough demand to hold the GPUs in 3D gaming mode. If the demands is too minimal the cards will switch to 2D desktop mode.

Have you guys tried using NVIDIA K-Boost with EVGA Precision X yet? That might help. It should lock your core and memory clocks to whatever fixed rate you want to use and keep it there 24/7 regardless of 3D demand or until you disable K-Boost. You will find K-Boost on the little voltage adjustment app.

-

-

Looks like underutilization?

Sent with love from my Galaxy S4 -

You can complain all you want but the problem lies within the vbios you are using and the boost function Nvidia use on the cards today.

To quote Anandtech:

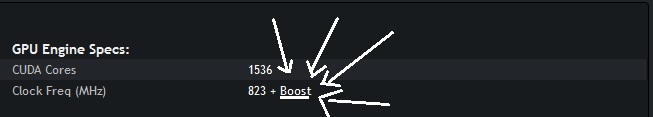

Here is GTX 780M. Everything above 823MHz is BOOST and is controlled by what Anandtech explains above

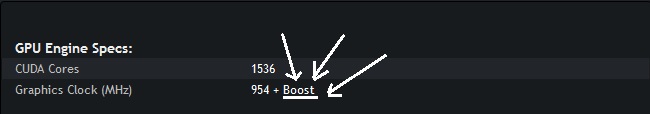

Here is GTX 880M. Everything above 954MHz is BOOST and is controlled by what Anandtech explains above.

To quote you:

You hit almost 90C with the GTX 880M which you mention in this thread. And the CPU runs hot even when undervolted.

I explained earlier that you have such low Firestrike score due to the "Combined Score" being so low (the rest: Physics (CPU) and GPU are perfectly ok)

3DMark Firestrike in Combined mode explained:

Your problem is when running extremely taxing benchmarks (and maybe some games like 64 man Battlefield etc), your GPU will not go beyond 954MHz because GPU boost 2.0 gets in the way. It most likely is the temperature limit coded in the vbios, which acts as a safeguard put there by the ones who made the vbios, not Nvidia. In these benchmarks and games, you tax your CPU and GPU pretty hard at the same time, and according to you the CPU run pretty hot. Well some of the heat from the CPU will be shared with the GPU under a restrained enviroment like a notebook.

The guys at T|I have made overclocking vbios for many Kepler cards, and what they do is removing GPU Boost due to the issue you are having, increasing voltage slightly to allow more room for higher clocks on the cores, and increasing power target so that the vbios doesnt quit on you when it would normally throttle the GPU due to you going over the power limit.

I can`t make it any clearer than this.

The GPU Boost have some of the blame as well, which are not exclusive for GTX 880M but many Kepler GPUs. And since GTX 880M are known to run pretty hot due to its high core count and high clocks, you are playing on a thin line between throttling and working like it should. This is well known among most people here. Thats why I said you can`t expect much more when already using an overclocked GTX 780M.

With a new vbios from T|I and the gang, you might get it to work better.deadsmiley and Robbo99999 like this. -

Yeah there has been an update to my temp issue... it was the modded vbios causing the temp issue. There is no temp issue now, even with heavy Watch Dogs @ 1080p, Ultra + 2xTXAA, the temps averaged 80C and fans weren't even maxed out.

My fire strike actually improved on physics and combined and decreased on graphics using the stock vbios. Why? Because stock vbios *will not* boost over 954MHz.

I'm going to try that with the K-boost and report back Mr. Fox

And I just ran Bioshock Infinite... it throttled the GPUs but once again, I don't see a problem when its only 45% average utilization per card. The temps are much nicer...

GPU1:

![[IMG]](images/storyImages/2568rwn.png)

GPU2:

![[IMG]](images/storyImages/14dklt5.png)

Things are a bit different with Watch Dogs

GPU1

![[IMG]](images/storyImages/2hp3u2t.png)

GPU2

![[IMG]](images/storyImages/6dr4gn.png)

You can clearly see that things are working quite well in this game up until I enabled 8xMSAA - it shouldn't be too hard to notice where that happened looking at the graphs.

Going to try the K-BOOST with 3DMark and report back. -

You don't need to be clearer. What you have said is reasonable, and all that gibberish talk is fine and dandy, but it still does not explain why the 880M demonstrates aberrant behavior with GPU Boost disabled. Hypothetical reasoning is worthless. Remember, we measure results, not "good" intentions. We don't care about what NVIDIA's model is, we care about what we can do with it. Unfortunately, the answer now is "not so much" in terms of what we can do with it. If we cared about what NVIDIA wanted we wouldn't be turning off GPU Boost and taking matters into our own hands by using the vBIOS mods from svl7 and johnksss. Is a stock 880M better than a stock 780M? Yup. But, so what. Don't care... doesn't matter.

The 680M and 780M are absolute savage beasts with a modded vBIOS, but 880M is not because NVIDIA totally borked something. If the GTX 880M was simply a straight across rebrand with a new name and higher stock core clocks like so many say it is, then it would respond to a vBIOS mod the same as 680M and 780M do with a vBIOS mod. We would have a very predictable high performance outcome with a few simple advantages like more vRAM. It would not be a step backward in performance like we are seeing. Is it almost the same? Yup, certainly is. But just almost. NVIDIA changed something either in the vBIOS, hardware level or drivers, or a combination of the above, to make 880M an undesirable product based on how it behaves. That's a bad thing if you measure performance instead of "good" intentions and they need to undo whatever it is they did that screwed it up. If they don't, we can expect the complaining to be without end. I know I would be livid about it. -

But what if the modded vbios is the problem? Lets not forget that when I was sent the first test, there was an error that bricked my cards that didn't affect the test machine that had Optimus. Mistakes do happen. I have a feeling that the modded vbios needs to be refined based on whatever nVidia changed and things will work out. You said yourself that the 780M was a mess when it launched, did you not?

As for K-Boost... it sort of works. It locks the main GPU but not the secondary. This is the Fire Strike with the primary GPU locked at boost and the secondary running 954MHz

NVIDIA GeForce GTX 880M video card benchmark result - Intel(R) Core(TM) i7-4940MX CPU @ 3.10GHz,Notebook P377SM-A

That's still off from my modded vbios score

NVIDIA GeForce GTX 880M video card benchmark result - Intel(R) Core(TM) i7-4940MX CPU @ 3.10GHz,Notebook P377SM-A

But its still better than the 8640 I got without K-Boost.

This would actually be a totally viable solution if it locked both cards, not just the primary.Mr. Fox likes this. -

I am running mine with the modded vbios and 975mV, which is higher than stock but 25mV less than the modded vbios. The core is locked at 993.

I think it is just like you say. The 880M is Kepler stretched to the max in most laptops due to the cooling requirements. Really good cooling is required to push these GPUs much beyond stock specs.

Sent with love from my Galaxy S4 -

I noticed that the card that was locked with K-Boost was @ 1.018v - why it didn't throttle for power limit is beyond me but yeah, these cards suck up a lot of power.

You got a better card than I did. I can't get any stability with undervolting at stock clocks lol

I still think there is something else at play though. At the exact same clocks, I'm running between 6C and 13C lower on the stock vbios than on the modded vbios.Mr. Fox likes this. -

K-Boost should lock both GPUs, but you have to disable SLI, reboot, select GPU0, apply it, select GPU1, apply it, reboot and then re-enable SLI. That's the only way I can it it to lock both video cards. Once it takes it works great, but it's not a one-click-and-go feature.

The problem could be something else needs to be done with the modded vBIOS. But, it doesn't change the fact that having to resort to any vBIOS mod is kind of a sucky thing. The 680M and 780M should have performed stock as well as they do with a modded vBIOS. Unfortunately, they did not, but thankfully svl7 and johnksss worked together and fixed NVIDIA's problems. If they were paying attention you would think that they would have taken some clues from people that know what their customers want and released an 880M that worked like a vBIOS modded 780M. Remember the definition of insanity is continuing to do the same thing and expecting a different outcome. -

Well the silicon is improved over the 780M. So its not the card unless you are unlucky recieve a bad one.

vbios ultimately controls the GPU so if OEMs make a sucky vbios, the GPU will suck.

One thing is staying at 954MHz due to GPU Boost, but dropping down to below 800MHz in Bioshock is not ok. Is it game related though? Are you using a FPS cap where the GPU drop down due to frame rate being met?

Have you checked out other games than those two? -

Yeah, it just so sad that people that spend big bucks with no intentions or expectations to settle on the lackluster stock GPU performance cannot realize a tangible benefit from that improved silicon... at least not yet. Hopefully, NVIDIA will fix it or our heroes (svl7 and johnksss) will once again save the day for NVIDIA owners.

-

If its a driver issue, I agree, its their responsibility. If its a vbios issue, where some parameters are not optimal on high load, Nvidia wont do a thing. T|I are pretty much the only help, unless massive complaints cause the OEM to issue out a vbios update (have that happened before?).

Possible issues I can think of:

Vbios (OEMs responsibility)

Drivers (Nvidia)

Games (Developers)

System BIOS (OEM)

GPU Boost (Nvidia, but they wont do anything)

Faulty card (Replace, contact shop)

Thats the nice thing about custom made bios. Remove GPU boost, and add in power and voltage enough, and just have a idle clock and a 3D clock to deal with

You are right. The card runs pretty hot on many systems. I feel sorry for especially the MSI gang.

But even they have the card running at 993MHz most of the time

Furmark+Prime95 however cause it to throttle down to 800MHz (Power or thermal issue)

http://www.notebookcheck.net/Review-MSI-GT70-2PE-890US-Gaming-Notebook.115293.0.html -

I don't really take issue with the throttling of Bioshock - it doesn't have any performance loss. That game is a breeze for the cards, why should it run higher clocks and higher temps needlessly?

Same thing with Child of Light. It throttled... But why shouldn't it? Utilization on one card was in the teens, what's the point of running 954MHz and driving up temps for absolutely no reason? I think that nVidia did well with dynamic clocking in these situations just like turbo on Intel chips. Just like my CPU, there is no noticeable lag time when the clock speed is dynamically changing but the temps are dramatically different.

Thanks for the tip about disabling SLI, I'll try that later (rebooting is tedious lol).

Sent from my HTC One_M8 using Tapatalk -

Well yeah thats what I`m thinking too. There could be some downclocking due too high frames that are detected but I`m not sure. Same happened with Rayman where it stayed at 770MHz.

Try out any demanding game and log clocks.

Good that you fixed the temp issue btw. -

Watch Dogs was my test with a demanding game. Up until I switched from 2xTXAA to 8xMSAA, it was boosting 993MHz on both cards. I think the MSAA is more a glitch in the game programming than a fault of the video card because if you look at the time stretch, the cards' utilization went down along with the clock speed.

I can run my cards with +450MHz on the RAM with the stock vbios but its really not needed for any game I've come across thus far. -

Here's what the time stretch looks like with 2xTXAA

![[IMG]](images/storyImages/4hw8kx.png)

-

Ok, I refreshed my AW 17. I've had no problems with my AW 18 by the way. I don't intend to over clock or mess with my AW 18 in any way shape or form for this exact reason. Anyway, I've refreshed and I'm on the latest (official driver) 337.88. I've run Heaven and my core clock runs at 993 at 73c (top) during the middle of the benchmark testing. I'm please with that, since, the last time I fired up Heaven, my driver (beta and 337.88) was crashing left and right. It's nice to see that it didn't actually set this flag on my single 880m. I'm going to flash the modifed vbios once I've installed all my programs. I will provide you all with an update. But at this point, I'm thinking that my problem was related to Windows 8.1 not mixing well with the beta driver (at least for me). I've tried EVGA, the same problem with Voltage Tuner still exists. However, I'm not getting multiple instances firing up now. It's just that the program will NOT display even though it's running in my task manager. I have seen on EVGA's forum, that members on there are also encountering glitchy problems on Windows 8.1. I guess Microshaft really did shaft us all over with this latest update (if you can call it that). I can also confirm that (Child of Light) now taxes my 880m. My 880m runs at 954mhz most of the time. But it also demanded 993mhz during the first 10 minutes of the game I played. Either way, make sure your options are set to - Prefer Maximum Performance in Nvida Control Panel.

If it weren't for DX 11.2 being exclusive to 8/8.1. I would of got rid of this OS a long time ago. I have to say, Nvidia Inspector isn't throwing a fit now either. It has to be Windows that was causing my problem, or at least a new windows update or something. Why can't any of this big brands get anything right these days. What happened to the good old XP days. Since then, we had the abomination that is - Vista. Then we get the REAL update that is - Windows 7. Then we get Windows 8 (which was okish) thrown down our throats with UEFI. Now we don't even get the choice. It's 8.1 or naff all. Apple's also heading down this route. These corporations have bone headed people running there OS division for them.Ethrem likes this. -

Hmmm... We have vastly differing opinions on Windows 8.1...

But I'm glad that you got it fixed! -

If I'm honest, I prefer Windows 7 UI any day over 8/8.1's UI. I've also found out, a few programs tend to be buggy in ways on 8.1. I don't think a lot of software devs have caught up with 8.1 as of yet.

Hey, don't jinx me. I'm yet to try the modified vbios. My problems could come back with that. I've just read a few members comments regarding K-boost. They don't recommend it. The main reason being that the GPU clock seems to bug out during gaming. They say it's highly recommended for benching, but not during gaming. I will never get to test it since voltage tuner is buggy for me. Have you tried it yet? -

I guess I've gotten used to Windows 8/8.1 - I don't use the Metro menu for much of anything but right-clicking on the start button is heaven and I love that I can get 1080p from the Netflix metro tile on the TV while I'm playing a game on the computer with hardly any performance hit.

I did try it but I didn't know you have to disable SLI, reboot, enable, etc., it boosted the entire time on my first card which brought my fire strike up to 9133 from 8640 so it definitely works.

I am starting to wonder if I swap my primary and secondary cards in the system, if I'll get better performance. My second card in the system is actually the master and it keeps getting throttled due to hitting power limits while the secondary card isn't being throttled at all. I think that its probably pointless though. Its quite likely that its being throttled for the tiny bump in voltage it gets for being the primary card... How lovely of Clevo to not account for that in the vbios -_- -

I and several others in this community have used K-Boost with SLI systems when gaming without issue. It might cause weird behavior on an Optimus based system. I've never tried it under those conditions.

-

Optimus is such garbage. I would rather reboot my machine ala M17xR1.

-

I agree... hate Optimus and Enduro. Both are stupid IMHO. The M18xR1/R2/18 must be rebooted to use integrated graphics or switch back to discrete graphics. I would be very unhappy if it were any other way.

-

I have to say that it's kind of a bummer I can't use the iGPU on my 9377 but I prefer that to the alternative of being forced to fight with Optimus.

Sent from my HTC One_M8 using Tapatalk -

Wow, I didn't know that. What's the deal? Clevo don't know how to build that functionality? I'm shocked it does not have manual switching. I know the P570WM does not, but those CPUs don't have Intel HD Graphics, so no biggie.

But, yeah... me, too. I would rather have only discrete graphics if the alternative was Optimus or Enduro. I don't want either one of those, ever, if the option exists to not have it. I have two laptops that have Optimus and wish both of them had manual switching instead. I did have a laptop with Enduro for a while and it was no better. The problem is not the concept, but the inability to exercise absolute control over what happens. It's more like your settings are "suggestions" to the PC instead of edicts that must be followed explicitly and without exception. That's what I expect when I use a PC... does whatever I say, when I say it, and without pause or protest... good or bad... just shut up and follow my orders, LOL. -

I thought I remember reading about an Fn+key function that caused a switch to HD4600. I won't have my NP9377 until Wednesday, so I can't check for it.

-

No manual switching because I have 120hz display. It has to be hooked up to the edp port I believe it is instead of the other one so it's directly on the GPU.

I believe Prema said no integrated graphics on 9377 at all though. It's not a big deal. High performance mode on battery still gets an hour and a half, I have no doubt the battery would go 2-3 hours on power saver.

Sent from my HTC One_M8 using Tapatalk -

Ah, OK that makes more sense. I did not notice your sig. The IGFX does not support that display as far as I know. I believe that is why the M17xR3/R4/17 with 3D capable 120Hz does not support switchable graphics. It would go at least 2-3 hours on IGFX. My M18xR2 gets close to 5 hours on a charge and when the battery was still new it was like 5:20 with dimmed display, no backlit keyboard, Windows Power saver mode, etc.

-

No iGPU functionality on any of the SLI Clevo laptops because of a lack of MUX, and display traces hardwired to master GPU. Optimus runs fine on single GPU systems.

-

Huh. I kinda wouldn't mind having the HD4600 take over when web browsing etc. Just to let my twin 880's chill out.

-

If you don't use the modded vbios, they chill out anyway. 135MHz clock rate for 2D, I believe.

With the modded vbios you can get them to chill out, you have to remove Shadow Play from Windows start up or they're locked at 993MHz all the time. -

Oh is that right? I've been swinging back and forth about using the modded vbios, and I've got to say, I might want to hang back on that for a while, see how it goes. As it stands, I'll be in no need of performance for the time being. All the games I've wanted to play (Bioshock Infinite, Thief, Far Cry 3) all play at 60 FPS or above on Ultra settings from what I've read. So when the time comes a year or so down the road and Half-Life 3 is released, and I need to get up to 60 FPS, Nvidia might have released some appropriate drivers by that point. Or slv7 and johnksss might revise their vbios with improvements, at which point I can flash and donate and all that happy horsecrap.

-

To be perfectly honest, its not worth the temperatures right now. I almost RMA'd my machine for no reason (actually I have an RMA request already submitted to Sager to swap for 9570 but I'm not going to use it). Even setting the clocks to 771MHz to match a 780M, it would still hit 80s... now I am back on the stock vbios and after 3 hours straight of Watch Dogs on ultra + 2xTXAA, I averaged 80C and never passed 87C where before I would have crashed within 30 minutes, probably due to temperatures.

Unfortunately if you want accurate benchmarks, the modded vbios is a must. Every benchmark I've tested has some sort of throttle on stock vbios. -

It sounds like someone needs to come up with a cooling mod for the Sager/Clevo's. I fully intend to prop up the rear end by a half-centimeter, which has always improved temps in every gaming laptop I've owned, sometimes significantly. But I'd like to see some brutal custom cooling mods. Brutal but aesthetic. It sounds like what these cards really need to compliment the modified vbios is a superior cooling solution, at least for the Sager/Clevo. -

I don't think its the cooling system's fault.

I locked the frequency to 819, which is the lowest you can get on stock vbios, left fans on auto, and my temps were still lower than the modded vbios at 771MHz with fans on max. There is something not right with the vbios, its doing something not nice to the cards. I'd avoid it for now to be perfectly honest. -

He He

I'm damn glad I have 120hz monitor instead of the integrated graphics card. I have much more pleasure from a 120hz screen;-)Ethrem likes this. -

Yeah my 120hz screen is one of my favorite things about the laptop!

Sent from my HTC One_M8 using Tapatalkpapusan likes this. -

Late to reply here, but yes, this is true. I had this problem with Dark Souls 1 before. The game would lag and give me ~10fps quite a lot, and I couldn't figure out why. Turns out it simply didn't demand enough power from my system at 1080p, so I was throttling to 2D clocks. I had to downscale from 1440p, turn SMAA to x4 and use SCAO (VSSAO + HBAO) ambient occlusion and otherwise turn on every bell and whistle in order to keep my cards at their 850/5000 stock gaming clocks (far less if I overclock them for dark souls, good lord I'd need to run the game at 4k and force SLI)

-

Wow. That still some serious heat. I'm running at 72c on stock at most. The custom vbios can give temps between 72c up to 84c at 120/3000/50

-

By the way, Ethrem... I keep forgetting your laptop is a Clevo even though this is in the AW forum. Right. I have something to point out to you.

You said that "GPU 2" was experiencing the heat with the modded vBIOS, and you were wondering why your slave was hotter than your master. I will say that from my P370SM3, my GPUs read as "GPU 0" and "GPU 1". My "GPU 1" is my primary GPU according to some programs, like Playclaw's sensors. It's a bit confusing, but it is possible that you in fact were reading your "second" GPU thinking it was your slave, but it really was the master. I know that my GPU 1 is my master and not GPU 0 because when I force a game to use 1 card, only GPU 1 spikes up, and GPU 0 sits at something like 5-10% while GPU 1 is blazing at 99% usage. So check this out, force a couple games to 1 card via control panel (but keep SLI enabled) and see which card actually gets used up. If I'm right, and your master card is your "gpu 2", then it fully explains your vastly higher heat difference, as you were reading the cards wrong.

That being said, all of you having 880M problems is making me afraid to upgrade to the newer drivers... I'm still on 337.50 because I have no need to upgrade, but holy moocow, I'm afraid XD.Ethrem likes this. -

I don't upgrade drivers unless I am having issues... unless I want to create some. =P

Sent with love from my Galaxy S4 -

^^^ This is really good advice. Don't jump the bang wagon like I and a few other did. You'll only end up regretting it. Same principal goes for purchasing any new tech. Such as the new AW's haha.

-

Dont you want the drivers that give say +20% in the upcoming game you want to play?

I have probably used 4 different drivers since I bought this notebook and have had no issues so far. Maybe I`m just lucky though. -

Yes I would. I tend to play the same game for a long period of time. Right now it's Mechwarrior Online and it's running very well. No need to upgrade.

Sent with love from my Galaxy S4Cloudfire likes this. -

I actually had already noticed that and accounted for it. My slave shows as the primary, the master shows as the secondary but it's still the slave that is hotter lol. The difference between them is better now that I am on stock vbios. It would appear that in addition to weaker cooling on the slave GPU, my slave GPU is also a weaker card. I've thought about trying to swap them around but it doesn't seem worth the effort when temps are lower than the fan profiles are even set for now.

Sent from my HTC One_M8 using Tapatalk -

My son updated to the latest drivers and got terrible performance. Told me a while to think that it may have defaulted back to Intel graphics. Yep, that was it. He was about ready to throw his XPS 15 out the window.

That example is not really minor though.

Sent with love from my Galaxy S4 -

Latest beta driver crashed for no reason, I reverted back to 337.88.

-

There is no doubt that beta driver is horrible.

Sent from my HTC One_M8 using Tapatalk -

337.88 works fine with 780M, no performance issues, but no noticeable improvements either.

Especially more so for SLI systems, since some older games are finnicky about SLI and only certain drives won't completely break them.D2 Ultima likes this. -

I can't run anything less than 337.88 on my machine because I play Watch Dogs but the 340 driver really is a disaster... I don't have any issues with 337.88 to be perfectly honest about it.

Sent from my HTC One_M8 using Tapatalk

Just got my 880M twins!

Discussion in 'Alienware 18 and M18x' started by Arotished, Apr 22, 2014.