Pretty accurate with regards to what? There are many instances where this is the case. Like the A10-4600m 7660G has a 3DMark11 score of 1300, which is more than GT 630m, but it still can't keep up with the 630m in gameplay, in many cases it's unplayable where the 630m manages well over 30fps.

3DMark is a good comparison for raw performance and nothing more.

-

They first optimize for 3DMarks, than later for games. Remember for 7970M which was slower than 680M in the past, now faster even with a slow CPU.

I do not think 7760G vs 630M good example for this case, one is APU the other is just GPU. 3DMark11 does not require much CPU power the APU can fully utilize the 7760M GPU power, while in games the CPU also have to work and share the power with GPU. 630M does not need to share power with anything + CPU also can work on the maximum... -

Pretty accurate for raw gpu performance, you said it yourself, at least for discrete gpus. I'm just sayin that u compare the 660M which has solid drivers for months to the 8870M that still had install issues within W8 a few weeks ago. Not very wise choice. I've never seen a discrete gpu beaten by a 50% margin in 3Dmark11 performing better in games. Unless the game is Nvidia optimized but in this case, it's more likely because of crappy early amd drivers.

But i agree,as long as APUs are concerned, 3Dmark11 can be more tricky cause of the power share issue. -

I don't think you can take into account the driver state after a card's been out a month or more. Waiting 2-3 months for proper drivers means if I buy a machine now, I cannot game and there is no guarantee that I will be able to game anytime soon. It may not be the fault of the card but rather the company, but it still stands. Even when nVidia releases flagship GPUs and they're running at about 70-80% capability, they quickly fix their stuff and get drivers out fast. I could safely buy one of their cards and know I can game X amount or not, whereas with AMD's mobile cards that simply isn't the case.

They wanna push their APUs? Sure. I'm fine with it, let them go right ahead. But if they don't fix their stuff properly and then get backlash for it, they looked for that. -

To all you doubters who doubted me when I said Haswell would match or even exceed Richland

OVER 2x the performance of Ivy Bridge. 2.5x the performance of Ivy Bridge on 3DMark11. That means it will beat Richland for sure. I know its just marketing from Intel, but hey, they state its based on graphic performance instead of just combined performance so it does look promising.

![[IMG]](images/storyImages/Screen%20Shot%202013-05-01%20at%205.37.22%20PM_575px.png)

Source: AnandTech | Intel Iris & Iris Pro Graphics: Haswell GT3/GT3e Gets a Brand -

But if only the GT3e would be present in mobiles... it'd actually make sense to combat discrete graphics. And the cooling would be fantastic too, as no cooling for a GPU = more cooling headroom for lone CPU, even if TDP is slightly higher. The best thing for them to do is to place the highest end GPUs on the midrange CPUs and the highest end CPUs should have no integrated chip to allow for better OCing and more performance gains in the desktop market. NOBODY who buys the highest end CPUs is planning to use the iGPU, but people who buy the midrange in prebuilt desktops etc or just want a machine for really light gaming don't have to buy video cards if the iGPU in their dualcore haswell is good enough to run anything on at least medium.

-

Fat Dragon Just this guy, you know?

While I agree that mid-range mobile CPUs are the ideal place for high-powered iGPUs, cutting the iGPU off the chip entirely doesn't make any sense in a laptop since switching allows for long battery life even in brain-hemorrhagingly powerful DTRs, and there would probably be a big uproar in certain desktop communities if it were cut off of any desktop CPUs without equivalent iGPU-enabled versions remaining available. -

Some of the 55w BGA mobile Haswell's features an Iris pro (GT3e) does have dedicated ram according to various sources (thought to be 64 to 128mb), backed up by photo's, the DRAM (or eDRAM) is the L4 cache. it will apparently double performance of the HD4000 (I can sensibly see this being realistic having dedicated ram)

I love the hype, I really do, and I see the GT3e potentially having a big place in the market, but its not going to be cheap, and that surely is the whole point.... the end of the mid range dgpu's will only come about when intel can offer that level of basic but true gaming performance across the board.

My sub £500 W110ER came with a GT650m and a whopping 2.0 GB of gDDR3, 2.5ghz sandy bridge, 4gb of ram, and a 320gb hdd......... for under £500, ...... I doubt any 55w GT3e or even GT3 wielding notebooks can boast that price tag.

Its got to be performance brought to the masses dirt cheap, that's the midrange market......... -

Oh I don't want them cut off from ANY mobile chip, I simply meant the higher end desktop chips. Like the replacement for the i5-3570k and upward (3770K, 3960k, etc). Even if they make the non-K chip have them and the K chip not have them, but the K chips are $10 or so more than the non-K chips so almost nobody buys the non-K chips, as unlocked multiplier is just flat out worth the ~$10

I was saying nobody buys the highest end DESKTOP chips to use the iGPU is all. I know about switchable graphics and its possible benefits for mobile, don't worry =3.

It still stands to reason though, that lower-end mobile chips should have the good iGPU in em. -

Thats something Intel do with Haswell. The CPUs with GT3e is 400MHz lower clocked than the CPUs with GT2. They can`t remove IGP completely, even though some people don`t use it, because a lot of people need it to shut down the powerful discrete GPU when they are not gaming.

As for desktops, yeah, the cooling system is so good there, they wouldn`t mind the GPU running at all times, even when not gaming. But there are CPUs without IGPs, several of them from Ivy Bridge. They are also clocked higher than the ones with IGP, if I remember correctly, -

See my above clarification post XD

-

Now, your W110 comes with the lowest end i5 with that price. Yes the GPU is better, but a 55W or a 47W i7 will be much better than your CPU. If I`m not mistaken, that 11.6 inch is one of a kind too, offering big performance in a pretty small notebook, so I think most notebooks at that size will be with the U-CPUs, without the GT3e. If there will ever be made one with GT3e, I couldn`t see much problem having the same price as you do, $780, since its so small. But in the end, yeah, your notebook will be faster in the most games.

-

Karamazovmm Overthinking? Always!

there are no 55w tdp gt3e cpus. the 55w tdp figures is that you do have now 3 tdp states in intel cpus, cTDP low, TDP, cTDP high.

no one said cheap, its simple how much a quad core costs? oem have no need to apple here. 3740qm and 3840qm are expensive as hell.

depends on how much you save on the overall package. We know that the cpu is not going to be cheap, however without a dgpu this might cheapen a little -

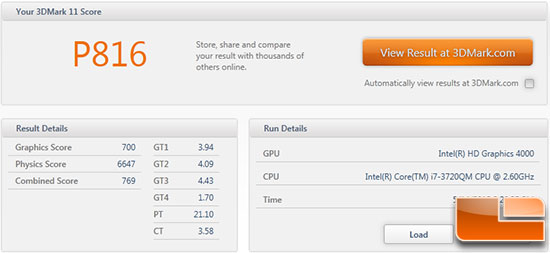

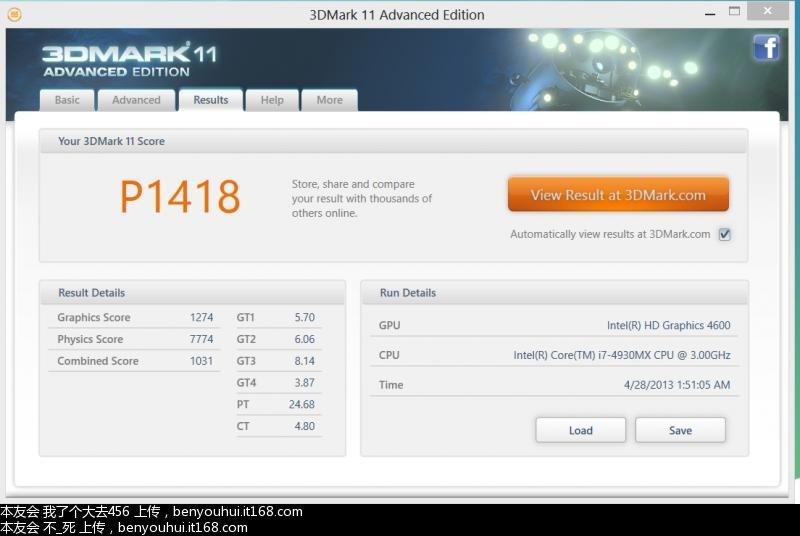

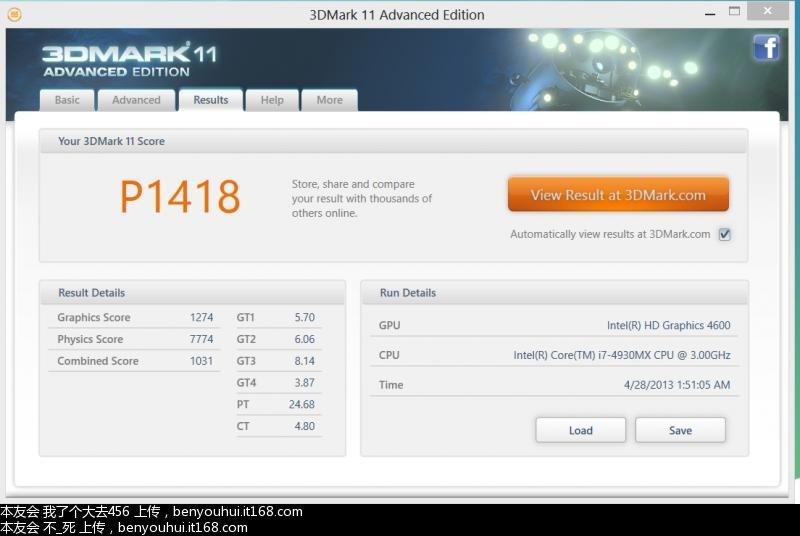

Intel Haswell with GT2 (20EUs) have been tested. Almost 1300 graphic points in 3DMark 11

Source: http://benyouhui.it168.com/thread-3998249-1-1.html

Can`t wait until GT3e with 40EUs is tested

-

Karamazovmm Overthinking? Always!

so lets say its 1100 for the 4600 on quads and dual (they hardly are that different in terms of score, if cooling and power is not a problem)

I can actually see the 1600-1800 3dmark11 that a lot of people guessed to be true with the 5200

one thing that I find troubling, lets say that intel marketing graphics are correct, putting 700-800 * 2.5 = 1750 - 2000, this combined with edram is going to be a blast, if we put DDR4 + edram + new arch with broadwell, this is going to kill mid range -

You do realize that by the time there is DDR4 + edram + broadwell, GTX 680m will be the new low mid-range, right? So unless they can achieve 6000+ 3DMark 11 equivalent performance, it's highly unlikely to kill mid range GPU's. Plus this is OK for 720p gaming, but still there's the issue with higher resolutions, something only higher bandwidth RAM and high core count can fix. Dedicated graphics double performance every two years approximately. IGP graphics are growing more quickly, but that's only because it was never a priority for Intel. Now they're just starting to address it but it will reach a wall quickly, and I doubt next gen will double.

IGP will always only be good for low resolution gaming. With 4k screens on the verge of being introduced, they're gonna have to do a lot more than present 30fps at 720p with low to medium detail. -

GTX 680m in the low mid-range? Lol what a joke. Maybe in 3-4 years when we see 14nm from TSMC.

GTX 680M= 1344 shaders

GTX650M= 384 shaders

GTX650M is considered as an upper midrange mobile GPU. The jump to GTX680M performance level for low-midrange models is impossible even with the next 20nm shrink. You need a reality check. -

Reality Check.

GTX 9800m July 2008

3DMark Vantage = 4743

GTX 460m Sep 2010

3DMark Vantage = 7617

3DMark 11 = 1814

GTX 480m May 2010

3DMark Vantage = 8872

3DMark 11 = 2387

GTX 650m March 2012

3DMark Vantage = 9470

3DMark 11 = 2300

GTX 680m June 2012

3DMark Vantage = 21163

3DMark 11 = 6158

See a pattern there?

Hmm, mid range 460m nearly doubles performance of "top end" 9800m GTX from 2008, mid range 650m bests the "top end" GTX 480m from 2010, mid range Maxwell 850m(?) bests "top end" GTX 680m from 2012... and to be honest, I wouldn't be surprised if Maxwell 880m was nearly 3x that performance of 680m.

650m is a mid-range GPU not upper mid-range. 660m is start of upper mid, and 640m is lower mid. 620m-630m is low end. 670mx is really upper mid-range, 675mx is bottom of upper range...

610m - 630m = low

640m - 660m = med

670mx = upper mid/low high

675mx = lower high

680m = high -

Karamazovmm Overthinking? Always!

What Im actually counting on is maxwell.

The main problem right now is the gap between mid range and high end. while you consider the 660m mid range, it barely scores 3k, while the 680m score 2x+ more than that. And the difference in TDP is not only terrible its lack luster

650m 43w

680m 100w

while the tdp difference is significant, and approaches the difference in performance, we can count on our finger how many notebooks have the 680m, how many have the 670mx or the 675mx, and with the move to smaller and lighter notebooks, this is going to kill mid range. It already has wiped almost effectively the low range, mid is next

What I basically see is the growth of smaller form factors and the even more niche of high end gpu

and lets face it, high end cards double the performance, mid range is only incremental for a long time, for example 335m to 540m not impressive at all. There was a better difference when kepler reached, but really 30% after 3 generations? -

I agree and notice that too. They go from 128-bit GPU's to 256-bit GPU's with only a single 192-bit one. If Meaker is right, the mid-range up to 765m will be a 128-bit GPU. 770m will likely be 192-bit, and again, only a single 256-bit GPU with 780m. I never saw much point in low end dedicated GPU's anyhow since they were usually too underpowered to really play anything anyhow, and usually coupled with weak CPU's. With reduction in power and heat though, mid-range dedicated may just be minimum for a dedicated card anyhow. Maybe if anything this will push nVidia and AMD to offer 192-bit GPU's as the mid-range. I'd be fine with that.

-

Karamazovmm Overthinking? Always!

both need a kick to get their act together, igpus are to be low level, they should never have left the mid range in the shape they are now.

the low range was to please people with the notoriously bad past intel igpu, those focused on multi monitor and "premium" machines (because they can be used on low entry multimedia notebooks). AMD had much better solutions for entry range with their igpus. AMD continued on their path, intel saw that things were getting smaller and boom low range is basically gone.

The mid range was multimedia, basically low lvl gaming with the capability to watch movies, the mid range changed, now we want decent gaming we ca have what was mid range on the igpus, if you wanna fire a low lvl game you can, wanna catch a popcorn section, bam done.

They cut costs by developing mobile and desktop together, nvidia goes and cut here and there to get their gpus in the entry high end, sheer example is the 670mx or the 570m. This leads to lower R&D but the wattage drops really only started with kepler for nvidia, amd is on this path for more time, and with the fermi heat it was complicated to develop something that was still good enough for the mid rage

One thing that leaves me baffled is that while nvidia and amd know that the big bucks are in the mobile and professional they dont allocate enough resources, specially nvidia. AMD has their cpu line, so they are content with the new DDR4, edrams, unified memory controllers, shared caches, they can profit either way and without the trouble of the not so reliable TSMC. nvidia? they are in deep with TSMC and their only option is their SoC line, which is not enough to enter the notebook market, and in the end intel and amd are in deep in that market as well.

with this last sidestep, they needed to get the 880 in the 100w range tops 150w, then their mid range will actually not only provide satisfactory gaming, but deliver the power of the 680m in the mid range. They focus on the flagship and cut down/rearrange to reach all the other ranges, so the focus is get those ladies in model style anorexic in power consumption and dissipation -

Fat Dragon Just this guy, you know?

Wasn't it just a year ago people were singing the praises of the 650m for being a legitimate mid-range gaming chip? It's the perfect fit for that segment - relatively inexpensive and power efficient, it can fit into a relatively small machine and can still play any game that has come out in the year since it was released, albeit the more demanding games at lower settings. With the widespread adoption of the 650m in dozens of models, it seems to be pretty much the epitome of a successful mid-range dGPU.

There's no real motivation for Nvidia or AMD to design an inexpensive 50W notebook GPU with 680m power (even if it's possible) because they would be shooting themselves in the foot by making their flagship GPUs redundant. Who's going to pay 3x the price for a huge, hot, heavy GPU when you can max out game settings on a cheap, efficient midrange part? With the new demands of next-gen consoles on the horizon, IGPUs are going to look like chopped liver for quite a while when compared to Nvidia and AMD's mid-range mobile parts. They might catch up with them within the lifespan of the upcoming consoles, but even that's questionable. -

Actually I want to correct you on an earlier point, HTWingnut. The 480M was a terrible failure as far as a high end release goes. They made a mistake and translated Fermi badly to mobiles, and the cut-down 470M beat it. Then the 485M was the true flagship, unlike the difference between the 280M and 285M of the past. I also find it worthy to note that the 580M and the 560M were close enough in power to have a midrange and high end separation without totally making the midrange obsolete. My friend with a 560M can throw up the graphics on a lot of current games and get playable frames at 1080p. The problem is that nVidia's entire product line was built on their midrange core, the GK104. Their desktop line struggles to find a midrange card and their laptop line gets the worse end of it. Hell, look at the GTX 670 versus the 660Ti. They're THE SAME CARD except the 660Ti has a 192 bit mem bus instead of 256 bit. They had no ideas on how to make affordable-yet-strong without flat out watering down their cards. The Desktops didn't get much watering down, the laptops did. Even the 675MX is only what, 2/3 as strong as a 680M?

Anyway, it'll still be a very, very interesting thing when the next gen of cards come out. Though I don't believe there'll be a MASSIVE change like Fermi --> Kepler was. I'd say flagship Kepler --> entry-level high end Maxwell. Unlike how a 660Ti compares to a GTX 590 or something >_<. -

Karamazovmm Overthinking? Always!

Not necessarily, I actually put on the other post the saving grace that was kepler, and on that one as well, still since 300m to 500m very pathetic, if you count the 4670m it was even worse

so your point is mid range will die, and only high end will keep moving? or that they will both develop something for mid range, which is on par with igpus? that would inherently kill the mid range as well. Or that they would try to compete in price because intel cpus are expensive, while offering close performance? that wont end well either.

In the end, they need to push more performance, thats the whole point of their existence. If your raison d'etre is removed from you, you are gone.

Its only logical to invest in putting the igpus in their low range, and mid range to be reconquered. The problem here is the res, 720p was OK for mid range before kepler, now 1080p is OK, high end desktop has moved beyond that with quality multi monitor gaming, or 1440p/1600p gaming. -

I do agree with this, we're still struggling to max games at 1080p 60fps, when 120Hz and 144Hz 1080p screens are out and the 1440p is emerging in the 60Hz realm now. I do place a lot of blame on video game developers for not optimizing their stuff, but some things I do admit should require lots of power. As much as I rag on Crysis AND AS MUCH AS THEY CAN IMPROVE THEIR ENGINE'S OPTIMIZATION, Crysis 3 still broke peoples' minds. Even in livestreams it looks amazing. Hell I was watching a stream and I was thinking "well huh the game does look pretty" then I'm told that was on low settings. Like.... *kapush* went my mind.

I think that there needs to be a clear-cut high end and midrange needs to be a good step back. 50%-66% the power of the flagship or so. 70%-85% could be the entry-level high end and onward, with the 100% rating being the flagship. Low end needs to step its game up is what. See that 660M we got? That kind of gaming power needs to be low end in the next couple of years. You try to run any game from 2014 onward on that at 1080p or, god forbid, 1440p and see how high you can turn up the settings. If intel can provide proper low-end performance and take that share of the market and nVidia and AMD's low end cards end up being just a bit better, or there for people who need CUDA/DirectCompute performance only (e.g. someone just wants to run photoshop a ton but doesn't want to game so they buy an nVidia card for CUDA etc) and we'll end up perfectly fine methinks. When I got my HP in 2008 with an Intel X3100 I could run a LOT of stuff. New games like DMC4 and such were unplayable, yeah, but I could play a lot of PS2-era games, UT 2004, etc on that thing. It wasn't so bad. But now the gap is so huge it's terrible.

I just realized I'm going on a long-winded post now and I've possibly lost my intent, so I'll stop. =D. -

Karamazovmm Overthinking? Always!

mid range is usually 10% or less. high end is 20%, then we enter the flagship which is absurdly more powerful than the last bracket

I prefer 20% brackets, each number gives you 20% more, simple and easy.

So that means, 840m + 20%> 850m + 20%> 860m + 20%> 870m + 20%> 880m -

Broadwell will be a new igpu architecture(probably another double of performance), but in turn will compete against Maxwell.

Chances are Intel is from now on lighting a fire under Amd's and Nvidia's asses regarding low to mid gpus.

As long Intel's igpus keep pushing for more performance year after year, i am ok with it.

Hopefully competition translates to better products for cheaper prices, consumers win.

-

So since GT3e/Iris pro quads will not be released before Q3 2013, only a few months before Kaveri's launch, in june we'll probably have only these 28W Iris/GT3 parts against 35W A10-5750M Richland. If GT3e will obviously beat Richland, i'm not sure that Classic GT3 will. They should be on par in benchies according to early numbers. Richland is claimed to be "up to 80% faster than HD 4000" while intel graphics show GT3 at 120% faster than HD 4000 in 3Dmark11. Slight advantage for intel ?

Edit : I've just seen the P1400 screen for GT2 on previous page. Obviously, Intel will win this round. -

It will be easily. Heck the 730m/735m are basically the 640m/650m and are the "low end" of their lineup. If you look at my previous post showing history performance over time, it's the way the trend is going. Heck even the Llano IGP from Jan 2011 is stronger than the 9800m "flagship" GPU released less than a couple years prior, and Trinity is on par with current 630m. I think people don't realize how quickly GPU tech actually evolves. How quickly this stuff gets outdated. That's why anyone who wants to "futureproof" their laptop and intend to keep it for four years, and buys a mid-range GPU are wanting for a lot more in two years' time.

And by the time GT3e is finally relased (which won't be June 3, indications show "Q3 2013") the nVidia 700m series and Radeon 8000m series will be in full force and make it look like quite weak. nVidia's "low end" 730m will likely be better by at least 30-40%. And that's without an architecture change. Wait until Maxwell. Intel and AMD will both have a LOT of catching up to do. It will make 680m look like weak sauce.

IGP's replacing dedicated GPU's has always been the mantra of Intel and people followed that beating drum like it was fact. No way can a single 45W TDP CPU+IGP replace a dedicated GPU with it's own 45W TDP. Just not gonna happen. Where Intel is going with the GT3e is where they should have been two years ago. And it takes a high end chip sacrificing CPU performance to get there and still barely competing with AMD's larger 32nm fab process and older tech and at 35W no less. It's just funny how Intel is bringing in an IGP in 2013 with 3DMark11 score of 1300-1400 and everyone is all pomp and circumstance when AMD did that a year ago with Trinity, and at 35W as well. When AMD sacrificed CPU performance to get there people slammed them. Intel does it (dropping 400-500MHz to add the dedicated vRAM) and it's going to "crush the low end market". Whatever. -

Don't expect Kaveri mobile before mid-2014.

-

Based on what source?

-

Based on AMDs claim and some logical thinking.

shows and expos Reviews | PC Perspective

What does it mean? Richland shipment started late in 2012. Do you see Richland notebooks available? I guess you don't see. From shipment mobile CPUs it requires 3-6 months until we see notebooks in stores. Kaveri mobile shipment sometime in 2014 means not before mid-2014 until we see Kaveri notebooks in stores. Also keep in mind Kaveri introduces some new stuff into the APU which makes it risky to delays: 28nm, GCN, Steamroller. Of course desktop Kaveri should come way sooner if there is no additional delay. -

If it comes out in 2014 then they will fall behind Intel again.

Since Haswell GT3e looking to beat Richland by a great amount, pushing Kaveri out in 2014 means it will almost have to directly to compete against Broadwell. So not only will Kaveri have to make up for the loss behind Haswell, but also fight against Intel`s Broadwell which will be DDR4 and brand new architecture. Not to mention it will be Broadwell in 22nm vs Kaveri in 28nm.

So they better release Kaveri this year or else it will not be pretty. -

I do have to agree with you on this point. And AMD's problematic driver issues are a lot less likely to happen with fully-AMD machines, as bad as that may sound. Only their fundamental driver issues (such as random CFx stuttering, which wouldn't even apply to most of those machines) would be present, and that isn't much to worry about on its own.

I do feel that sacrificing CPU power for iGPU is an annoying thing when people are looking to move forward and advances are stagnated by mixing of two technologies that nobody (that I know at least) called for to be mixed. APUs I suppose aren't meant to be high-end CPUs, so I'm fine with that. Being bottlenecked by one of them is common if you throw in a high end GPU in there, sure, but if you think about it for light gaming/media, it's perfectly fine. Intel is sacrificing their highest end CPU advancement and OC potential (even almost completely wasting a die shrink with Ivy Bridge desktop) to bring in iGPUs. And then the best only existed on the highest end CPUs, with the lower end ones (which are most likely to be standalone) left with more weaksauce. And they're doing it again with Haswell. Not to mention the fact that there were only two chipsets when it came out, and one of them allowed you to SLI/CFx while the other disallowed SLI/CFx in favour of giving you the ability to use the iGPU. Which nobody used. I don't know what intel's doing, but they need a solid shake up from AMD and it better happen soon. I think AMD kind of faltered with its 7000 series radeon and its bulldozer CPUs and nVidia and Intel kind of went lax as a result. At least their FX8350 outshines intel's i7s for gaming above 1080p and livestreaming; they went on the right track with that one. Even if it drained more power and produced more heat.

I want to see some proper competition again. I just wish AMD would step their game up. I hold no particular allegiance to any one company, but I'm going to get what I feel is best. Right now, nVidia's stability, driver attitude and treatment of the mobile market make them worth the money to me. Intel's doing right by the mobile market too and AMD's not putting out anything for us (at least not high end anyway), so Intel + nVidia are my logical choices. But if I hear someone wanting to build a PC to stream with, Right away I tell them FX8350. I wanna see some good AMD competition. We gotta force the companies to give us their best at competitive prices. A repeat of nVidia's planned midrange GPU and core ending up their flagship because of lackluster performance just can't be repeated. -

I don't understand amd's strategy, they could have made a bang by releasing Kaveri this year. Instead of that, they will be eclipsed by Broadwell. Same story again and again. It is really getting old. Those who were waiting for Kaveri will buy Intel.

-

Actually, going by Intel's current tick-tock roadmap/timeline, Haswell should be the refined 22nm process (as Ivy Bridge was the pioneer based on their Sandy Bridge 32nm successes), so Broadwell should be the pioneer for the next die shrink, and whatever's coming after Broadwell (Skylake I believe?) will be the refined architecture for that die shrink.

-

Broadwell is supposed to be 14 nm, i think. 14nm vs 28nm haha.

Temash/kabini are already produced at 28 nm and are supposed to hit market in June so why can't Kaveri be released earlier...It's like amd don't care about maintream notebooks/desktop anymore. But more for tablets, netbooks...and PS4 lol. -

Kaveri mobile is most of its lifespan a Broadwell competitor, this is for sure. Worrying for AMD since Broadwell is supposed to bring bigger GPU gains than Haswell according to an engineer from Intel.

jecb comments on IAmA CPU Architect and Designer at Intel, AMA.

jecb comments on IAmA CPU Architect and Designer at Intel, AMA.

Broadwell is a 14nm product by the way. -

You guys are rigth, I`m sorry I was wrong, Broadwell will be 14nm. Auch, thats 14nm Intel against 28nm Kaveri.

Can you imagine the transistor difference on a 14nm vs a 28nm? Thats not even fair. -

But manuf. process reduction is not everything.

AMD's 28nm for example will be more densely packed (more efficient in its use of available space) than intel on 22nm or even 14nm.

Granted, 14nm is still a sizeable gain in manuf. process reduction.

Ultimately, we don't know how things will unfold just yet.

With AMD gaining ground on consoles, HSA, etc., its possible this could provide them with necessary leverage with manufacturers to release Kaveri on mobile faster (and with decent offerings) and subsequently just skip 22nm and introduce 14nm.

Having said that... in the desktop area, AMD's offerings are relatively close to Intel's (especially on price/performance) as is even with the big difference in manuf. process. -

Iris Pro Graphics (GT3e) have been listed at Notebookcheck. Do you guys agree where they have put it? Just below GT555M? Or should it be higher?

Intel Iris Pro Graphics 5200 - Notebookcheck.com Technik/FAQ -

I'd say that's about fair.

-

Karamazovmm Overthinking? Always!

depends, the 555m is the 630m or 635m, we have to see the game fluctuations due to memory constraints

the problem with trinity was the cpu power, and as you know HT people tend to prefer intel, there is no technical point in most cases, the explanation is marketing or the entire lack of there off in amds part. For me even the FX line doesnt cut for the games that I play, their min fps are too low. I sheepishly installed shogun 2 tw on my new server

I have 2 titans in there, with 2 SB E. Now thats gaming with overkill -

Yes, this is true, however more closely packed things = more heat. The CPU could get hotter as it's more packed in as the GPU takes up more room. Sometimes leaving space open is what's necessary to get the best out of a chip in terms of performance/chill factor ratio.

-

Possible, but AMD will make Kaveri/Steamroller on a 28nm process, probably offsetting potential additional heat (still, regardless of that, lower manuf. process can easily mean more heat as well... the more transistors one puts in, more heat is being generated). That and coupled with plenty of optimizations/fixes that they said will be seen, its possible they can drop raw GhZ value while still coming up equal to Intel offerings.

We know that IPC enhancements, L1 cache sizes, decoder optimizations, shorter pipelines and more FPU's individually don't mean much, but rather a combination of all those 'might' (I repeat, 'might') allow for sufficient headroom so that AMD catches up to Intel in CPU performance using lower clocks than now while at the same time surpassing them in GPU performance.

Right now, we are still fairly limited in how much we know or what will happen, so the only reasonable thing to do right now is to wait and see. -

Karamazovmm Overthinking? Always!

hoping for amd to catch up with intel quads is hard, it needs such a jump on efficiency, that a design like that would jump products that were in their pipelines for a few years

-

What do you base that on? Have you looked at Intels drawings and specifications of Broadwell (or even Kaveri?)? How do you know that AMDs 28nm will have more transistors than Broadwell in 14nm? I call shenanigans

I`ve looked at the GPUs listed above. GT555M actually score the same as GT2 (HD4600) I posted earlier in 3DMark11. Its just a benchmark though. Wonder how they compare when real games is tested. At 1080p.

--------------------------------------------------------------------------------------------------------------------

AMD Richland vs

Intel Haswell with GT2

-

That Richland is unlikely a real score. At least if drivers are released for it it will likely score much better. Intel iGPU's are being manufactured right now so I'd hope that score is more realistic of day 1 performance. The Trinity 7660G can match that Richland score.

And GT 555m with mature drivers well over 1500, but it's really splitting hairs. 555m is really the 630/635m which is low end. -

In 3dmark11 A10-4600M scores 50% higher than HD4000, in games only between 20-30%. 3dmark can't tell us the whole picture.

-

Why should it be fake? You can see its screendump from a MSI notebook there. Maybe you are right about the 555M scores but according to NBC, GT555M scores from 1000 to 1318 points. Directly what HD4600 does. But I`m still not sure how it would play out in games. I think 555M will come out victorious there

Haswell gt3e to crush Nvidia and Amd low end market gpus ?

Discussion in 'Gaming (Software and Graphics Cards)' started by fantabulicius, Apr 11, 2013.