I just tried with my 8750M Radeon and it is mining 102 khask/sec. Unfortunately I do not have here my power meter, but I assume power consumption for the whole laptop is no more than 50W or maybe under 40W. Hope you will try too, curious how good is the speed for 7730M...

Update; I found here a mining speed database, according to that my little Radeon is close to the Geforce 680M speed.

-

-

Which one did you try, LiteCoin?

-

Wait, what? Didn't you buy the 8970 because of price/performance? Seems it is getting them sales.

-

Yes, Litecoin. I'm running it for a day, curious how much money can it earn...

-

It does, but still not as much as nVidia... simply because most people want ultimate power.

Or maybe some people just have too much money.

-

Is your 8750M stock or overclocked?

Edit: And also are you using GUIminer or CGMiner? -

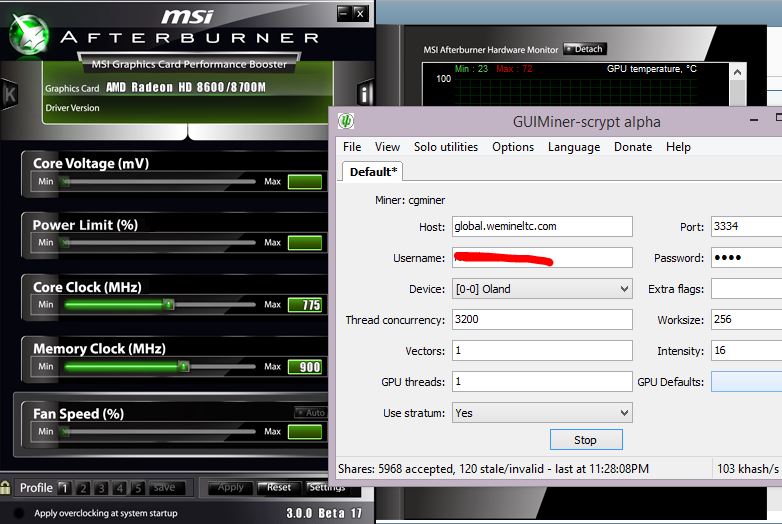

Guiminer Alpha what I am using. What is interesting, I cannot overclock at all and even my stock 825MHz GPU frequency falling back to 775MHz. I think this GUIminer gives very big hit for the GPU, much bigger than a Game.

-

I get about 96KHash/sec from the GUIminer but different numbers from 85 to all the way 120 on the LiteCoin website. I had AMD System Monitor running and my GPU usage was 99% at 575Mhz (supposedly what its clocked at, though some variants of 7730M are clocked at 675MHz, maybe I should overclock it). Unlike in gaming the laptop stays cooler and quieter, so maybe the 25W TDP I saw somewhere online is accurate. Probably whats holding it back is the ty 128bit 900MHz/1800MT/s DDR3 RAM while 7750M has 128bit 4000MT/s GDDR5, the differance in Memory Bandwidth is 28.8GB/s vs 64GB/s.

Also what server are you using, mine is US server 3334. -

Haha, I wish that was a problem of mine...one can dream

-

Here is my exact settings;

I do not know, if these the most ideal settings, but adding more than 16 insensitivity do not really helped to gain more performance. I guess, this my Radeons best...

I think Guiminer reports the correct Kh/sec, the Litecoin website showing me as well different numbers, sometimes even 150 or just 80. -

I experimented with the intensity a bit and found that 15 generated shares the quickest and hash rate was still maxed. Also wow 6000 shares, did you have it running for 40 hours straight?

-

Yeah, I have let it run for a quite while, it did not generated much extra heat or noise and the laptop did not become slower because the CPU stayed free. I think it is ok when the GPU is working in the background... I'll try than also intensity 15..

Update, ok with intensity 15 I got back my overclocking ability and with 1000/1125MHz I've got 131kh/sec. However I do not wanna run at this speed 100 is what is comfortable for this chip. -

Guys, here comes the new Maxwell Geforce cards;

GeForce GTX 880M 8 GB / N15E-GX-A2

GeForce GTX 870M 6 GB / N15P-GT

GeForce GTX 860M 4 GB / N15P-GX

GeForce GTX 860M 2 GB GDDR5 / N15P-GX

GeForce GTX 850M 2 GB DDR3 / N15P-GT

GeForce GTX 840M 2 GB DDR3 / N15S-GT1

Very exceted, fingers crossed, wow 8GB, NVidia knows something! -

8GB??

Is that really necessary lol -

No.

Actually 8GB is system RAM not VRAM according to that WCCFtech article. -

N15E-GX-A2 is a higher clocked K5100M so what exactly is the excitement?

-

Nice re-brands, I wonder why these cards making so much excitement? Anyway I might cannot understand because I have not been excited for Nvidia GPUs since the end of 2000 year.

-

nor I wasnt excited about an AMD cpu since the athlon 64 ^^

-

He's talking about GPU's not CPU's. But I agree. Athlon 64 X2 was the last AMD CPU I owned. I've been in the Intel camp ever since.sasuke256 likes this.

-

I guess that makes a lot more sense, not really seen system RAM specification on a GPU before.

+1

AMD CPU's are, and have been in the shadow of Intel's power for a long long time. -

First working game engine based on Mantle, live presentation (starts at 25:40):

Oxide Games AMD Mantle Presentation and Demo - YouTube

Source:

AMD Mantle Demo - Game still GPU-bound with CPU cut in half to 2GHz

Looks VERY promising IMHO.

-

Pftt on the demo. run 64 multi bf4 on a gx60/gx70,then there is discussion.

Atom Ant likes this. -

Not sure why you're being dismissive off the demo as there's probably an order of magnitude more draw calls in there than at any point in BF4. Mantle patch for BF4 should be dropping real soon so we'll definitely be hearing from the MSI GX60/70 owners in short order.

-

This is not system's but GPU's memory. It is for vRAM and caching.

-

It look and run great yadayada, but there is no control to compare to and hardly real-world(practical).

-

That's not the point. It's a Nitrous Engine tech demo and Mantle proof-of-concept. Did you miss the Mantle vs. D3D comparisons later in the video?

"Hardly real-world and practical"? Give me a break. People are developing upcoming AAA games using the technology shown here. You must be new to this thread. -

X2 3800+ myself, still going strong though, but the need for a laptop made me go into intel's territory

-

The point is that it show really nothing that make gaming better, lower cpu use? great, but the cpu really don't have much role in today AAA game.(unless apu set-up) What is the upcoming AAA game gonna do with even more cpu headroom?

So excited. -

AAA's care about graphics only, so, nothing.

-

columbosoftserve Notebook Evangelist

I think games such as BF4 will see a significant performance increase from this technology. Battlefield is probably just as CPU dependant as it is on the GPU.

Sent from my GT-I9305T using TapatalkMarecki_clf and octiceps like this. -

You see the tens of thousands of objects on-screen during that demo? That's not possible in any current game today because of D3D's small batch problem. I don't care if you have an i9-9999XXX or whatever, that number of draw calls would cripple any CPU and cause a severe bottleneck due to the API overhead. So while your quad-SLI GTX Titans may be perfectly capable of rendering all that, the CPU chokes on the amount of draw calls and simply can't tell the GPU about what to draw fast enough, bottlenecking it and causing low FPS. So in the end, it isn't about the graphics card; it is about the CPU cost of performing draw calls.

Mantle gets much closer to the metal, removing that overhead and allowing an almost order of magnitude more draw calls per second. That means not just higher FPS but more things on-screen at the same time, more subtle details, and overall much higher quality. With Mantle and our über PC hardware we could potentially have many more unique meshes in a typical game scene instead of endlessly duplicated trees, grass, and buildings, because geometry instancing is one of the most common optimizations for reducing draw calls.

What's amazing about that demo is that even with all that stuff happening, underclocking the FX-8350 to 2 GHz resulted in no loss in frame rate and still being GPU-bound. This speaks to Mantle's efficiency, as removing that driver thread required by DX and the associated overhead frees up so much CPU time that even cutting the CPU freqency in half did not impact the game whatsoever. The implications of this could be huge, as the developer could utilize all that unused CPU time to improve their game in areas besides rendering such as more advanced AI, physics, and audio. Or they could drop the CPU requirement altogether. -

The high overhead of draw calls and resulting CPU bottlenecks are things we see in PC games all the time. What do games such as PlanetSide 2, Battlefield 3/4, Rising Storm/Red Orchestra 2, Crysis 3, StarCraft II, and Arma II/III have in common? Performance in all of them is very much bottlenecked by the CPU. Why? Because we either have an incredible amount of stuff on-screen at the same time, e.g. Battlefield/Red Orchestra 64-player multiplayer and PS2/SCII Zergs. Or incredibly complex assets, e.g. Crysis 3. All of which substantially increase the number of draw calls.

Because draw calls are so expensive, PC game developers have been reducing graphical quality for years as an "optimization" to mitigate CPU bottlenecks:

But despite the developers' best efforts, some games such as PlanetSide 2 and Rising Storm continue to suffer from horrendous performance issues directly related to this. -

Why don't today's games look much better than Crysis 1, if at all? GPU's have improved by leaps and bounds since 2007 but improvements in graphics haven't kept pace at all because GPU advancements have been outpacing CPU advancements for years, and that CPU draw call ceiling continues to hold back graphical complexity even as our GPU's become increasingly powerful.

It's pretty sad that even previous generation consoles such as the PS3 and Xbox 360 regularly push many times more draws than the typical high-end PC. Our obviously much faster hardware covers a multitude of sins, so we've been able to stay competitive by "brute-forcing" it, but it's about time games started actually taking advantage of our vastly superior hardware instead of fighting against it.

It's a very good thing that the PS4 and XBONE turned out to be as slow as they are. Had this console generation been a repeat of the last one with console hardware vastly outclassing PC hardware of the time, the "next-gen" consoles would've, if not outright killed, left PC gaming for anything outside of MMO, RTS, TBS, and "Facebook games" on life support. When the consoles are blowing you out of the water draw call wise, meaning the PC can't match a console in "amount of stuff on-screen," plus they're easier to develop for, have better toolsets, generate more profit, and suffer from none of the problems plaguing PC such as moving hardware targets, high draw call overhead, driver problems, and scalable requirements, why would any game developer in its right mind make games for PC? Thank goodness for Mantle.

A few years ago when PC gaming was at its lowest point, Microsoft and IHV's were playing the blame game and there were rumors of ditching DX altogether and going "to the metal." It seems likely that Mantle is what eventually came of it, as AMD remains adamant that Mantle exists not because of its dual console wins but because game developers have been asking for a low-level API for years. -

Only way to know for certain is to render both side by side. It's easy to show a tech demo and say "ooh ahh" but unless you throw up another machine using current tech, it means nothing. I would have been more impressed if they showed this demo and then said now here's the same demo sent to a third party to optimize for Direct3D running on the same hardware and there was a noticeable difference. And there are different techniques to achieve the same or similar result. So until we get actual games or playable demos to trial ourselves, it's still just a pipe dream. I'm a big advocate for AMD, and want this to succeed, but like anything else, they have to prove it.

Cloudfire likes this. -

Usually, yes, but not this time. We've never heard of the batch rate in any implementation for any purpose.

-

Meh, wake me up when they showcase a game that doesn`t look like its from the 90s. I`m pretty sure I have seen games similar to that run on a server. Those multiplayer games you just log in to a website to play.

All those units playing at the same time doesn`t impress me. Planetside is an example of a game that features thousands of gamers battling on a single server. Graphics way better than that little techdemo of Mantle. Running on DX and doesn`t require a beast of machine to play.

I`m with HTWingNut. No point in showing a demo if they can`t compare live. I`m pretty sure they could have easily had that game running on DX and could do a split screen with Mantle to show FPS, CPU utilization and such to give us a better idea what the ramifications of Mantle really is. -

That demo reminded me of Homeworld 2:

<iframe width='480' height="360" src="//www.youtube.com/embed/AbrFcTfNHEI" frameborder='0' allowfullscreen></iframe>

<iframe width='480' height="360" src="//www.youtube.com/embed/YpcfbRXjrhk" frameborder='0' allowfullscreen></iframe>Last edited by a moderator: May 6, 2015 -

That was spot on lol

-

ROFL you gotta be joking right? Have you even played PlanetSide 2? It's one of the worst optimized games ever. It very much requires a beast machine to play without massive FPS drops when things get busy. More specifically, a beast CPU like an i5/i7 @ 4.5-5 GHz because the game only cares about single-threaded CPU performance and is almost never GPU-bound.

Yeah 2000 players per continent and 6000 per server sounds impressive, but hitting the continent pop cap almost never happens outside of a select few servers during prime time, to say nothing of the server pop cap. And there are never thousands of players on-screen at the same time. Anyone who says that hasn't played the game. Vehicle and infantry render distance (not to be confused with terrain draw distance which can be altered) is capped at a few hundred meters. Anything further than that and they simply disappear. Most fights are 48 vs. 48 CQC inside bases or at most a few hundred infantry, armor, and air Zerging outside a Bio Lab or Tech Plant.

Plus with O:MFG the devs have been deliberately removing objects from the game and dumbing down the graphics, e.g. removing PhysX and toning down ambient occlusion. Objects and players disappear and pop-in even in CQC because of the overaggressive occlusion culling. But despite all these optimizations to reduce draw calls, the game still suffers from pisspoor performance and CPU bottlenecks. SLI/CrossFire don't work and the game is still essentially single-threaded because the D3D9 renderer limits draw calls to one CPU core. Even WoW moved to DX11 a few years back for CPU optimization reasons but PS2 has yet to get with the times.

It's ironic you should mention PS2 in a Mantle thread, because Mantle would solve most of this game's evils as they relate to performance and allow it to truly take off.

EDIT: One last thing I should mention is that completely turning off the UI (made using that POS called Scaleform AKA Adobe Flash) still gives me an extra 20-30 FPS even after O:MFG supposedly "optimized" it by dumbing it down and and making it look butt-ugly. This game is just a laughingstock of poor development and optimization. They expect me to make the game unplayable (turning off the UI) to make it "playable" (higher FPS).

-

Yes Planetside 2 does enjoy fast CPUs, no doubt about that. But it seems to run fine on an i3 and looking at the GPU chart, it also clearly use the GPU.

I have never played the game just seen videos. Although it probably have its limitation due to the player base to preserve resource usage, and it have poor optimization like you explain, you can`t disagree that it looks a ton better than that tech demo right? Like HT showed, its just like those simple massive multiplayer games where you play through the browser. Therefor, that they could cut the cpu clock down to 2GHz without having issues, doesn`t exactly amaze me.

"because Mantle would solve most of this game's evils as they relate to performance and allow it to truly take off."

As much as I`d love a better API, I want to see that in FPS and in comparison with other DX systems first before jumping up and down in joy. They had a chance to do that in the presentation to amaze us as well as the press, but chose not to for some reason

-

No a browser game, but close enough. Homeworld / Homeworld 2 were from 2002/2003, and had hundreds of units fighting independently.

-

PS2 has no built-in benchmark, and being that it's an MMO, it's impossible to do consistent and repeatable tests within some reasonable margin of error. And then there's the whole network issue, as desync between server and client frame rates can further skew data. Just way too many variables. I call BS on those benchmarks.

Of course I think the Starswarm demo is more impressive than PS2. PlanetSide can't come close to matching it in amount of stuff on-screen, hardware utilization, and performance. PlanetSide 2 may have impressed you more through those videos you watched, but you've never actually played the game, so you're only qualified as an armchair expert at this point. I've experienced it firsthand, tweaked the game endlessly on my PC's, and have been following its development for a while.

And where was browser MMO mentioned? Homeworld 2 is an RTS. I hope nobody is dumb enough to compare a tech demo like Starswarm run on a local machine to a browser game whose processing is all done remotely.

Can you say Battlefield 4? -

I was speaking generally. That tech demo looked like a broswer game, and I dont care that its processed by a server. Those games are so graphically garbage that you can run hundreds of clients on a simple server.

.....which have never been showcased. No FPS data have been released and they still havent put out the update that enables it. 2 months have soon passed and their words that Mantle is easy to incorporate in the games lose value for each day that pass.

Meh we will talk when they show us real demos and benchmarks. Right now its just words. -

I think the BF4 devs have a few other higher priority issues on their hands than bringing out mantle for a small number of users.

-

Like fixing their game

they say it's crashing like hell !

they say it's crashing like hell !

-

When it comes to Mantle, I have to ask, "Where's the beef?"

Last edited by a moderator: May 12, 2015Cloudfire likes this. -

Mantle is so awesome with Battlefield 4. Like OMG

<iframe width='853' height="480" src="//www.youtube.com/embed/mHaYjVJaQqE" frameborder='0' allowfullscreen></iframe>Last edited by a moderator: May 6, 2015 -

Robbo99999 Notebook Prophet

Cheers cloudfire I just wasted 10mins of my life working out whether or not that was fake or not! ;-)

-

I know that recorded and uploaded video cannot show the exact feeling of what gamer actually gets but IMO those 75 with Mantle OFF looks much more constant and fluid then those jugginess with Mantle ON. It recalls me game FEAR 3 where fraps shows me 60 fps with enabled Sync while I shurely see that real frame rate oftenly goes down do 15 wherever I turn main hero to look 90 degrees right or left.

At this time I don't buy it. -

Pssst video is a fake and a troll. Mantle isn't even out yet. You obviously didn't get the joke. All that other BS he said in the video should've been a clue, huh? Then again you could've just read the comments.

Maxwell or Mantle makes you more excited?

Discussion in 'Gaming (Software and Graphics Cards)' started by JKnows, Nov 15, 2013.