R3d and Cloudfire, no matter which is more accurate, I just admire the bright minds we have on here, great analysis both ways and does anyone know if alienware 14 is going to feature an i7 with an 860 on amazon with w7? because that could be priced at 1300$ right? I could buy that no problems what do you guys think?

-

-

@R3d:

There is noticable differences between the different vbios listed under:

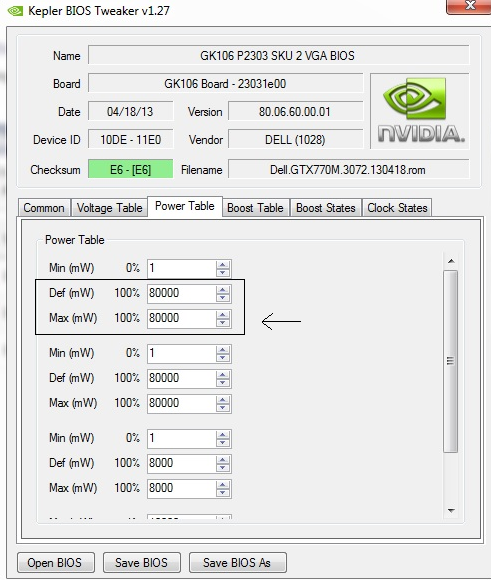

Here is GTX 770M. Notice that it only list 0% and 100%?

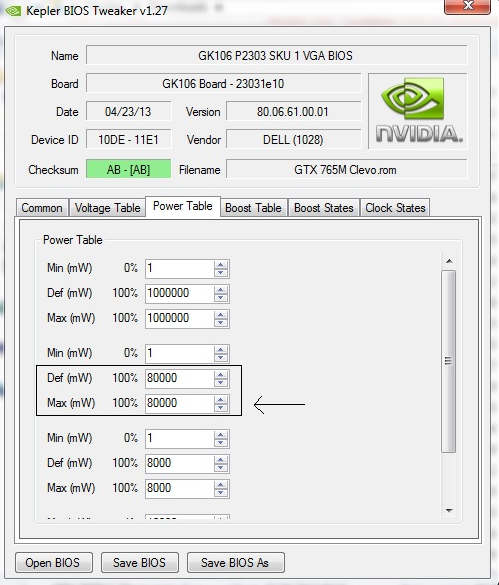

Here is Clevo GTX 765M. Notice that it too list 0% and 100%?

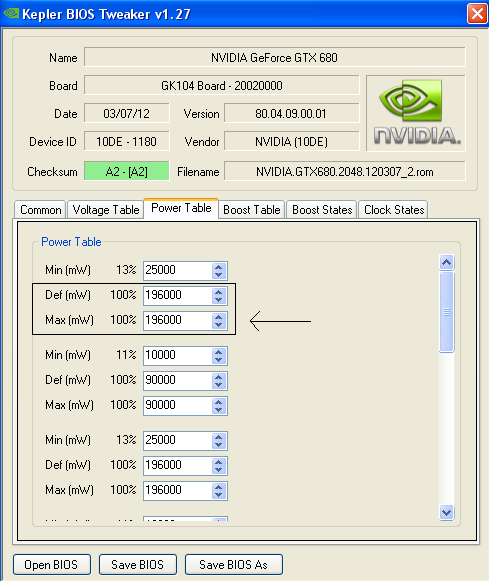

Here is GTX 680. It have 11% and 13% as minimum, but like the two above, it only list 100% as max. Which match its TDP, 195W.

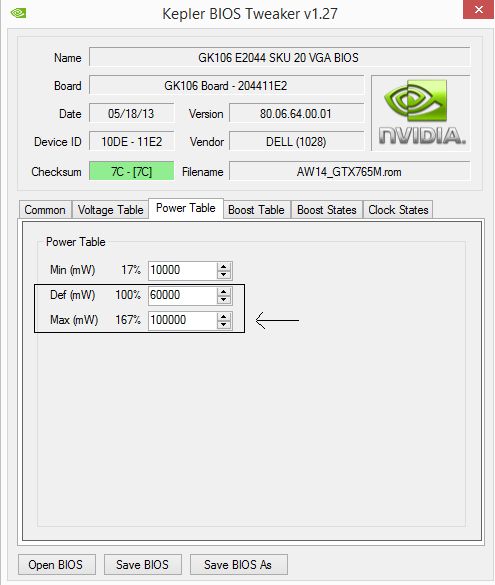

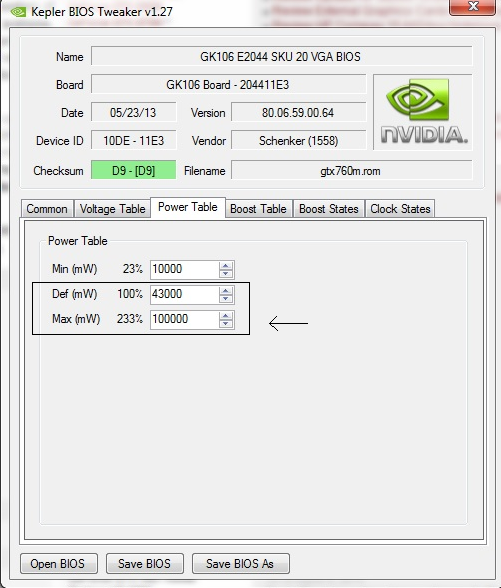

Now here comes your vbios dump. Unlike the above, it list 100% but also goes all the way up to 100W as Max (167%).

Same can be seen with the GTX 760M. It list 100W at max.

From your own post which quotes Nvidia:

"Max Power Limit - The maximum value in watts that power limit can be set to. Only on supported devices from Kepler family."

This means to me that it can go up to 100W for the GTX 765M in the Alienware 14 but Clevo have put 765M max 80W. To me it makes sense that the normal power draw is around 60W, but it can actually go higher in some occasions. And since TDP is worst case scenario, it may be closer to the Max power draw which was listed with the Clevo GTX 765M.

Here is tidbit from Anandtech that supports this theory. I think its about how the OEMs make the vbios. The ones with only 100% as max are closer to the real TDP, while the vbios that have listed 233% and such as max is more obscure where the TDP is somewhere between 100% and 233%.

http://www.anandtech.com/show/6276/nvidia-geforce-gtx-660-review-gk106-rounds-out-the-kepler-family

"2. I can very well back up the "magical binning and mature 28nm process". But don't listen to me, listen to Nvidia. From Anandtech: "

You are quoting Anandtech from an article that describes Maxwell in 28nm, not Kepler.. You are nuts if you think they can lower the TDP with 2x as many cores on the same architecture. That is something they can barely do with a new architecture.

Nvidia managed to shrink the TDP from 75W to 50W with GT 640. But if you look at the details, one is running GK107 and the other one is running GK208. Two entirely different chips, different ROP counts.

The GTX 765M is still running the old GK106 chip you find on GTX 650 Ti. There is no freaking way they are able to reduce TDP in half (from 110W down to 55W) when GTX 765M is running clocks close to the GTX 650 Ti.gust0007750, ThePerfectStorm and hailgod like this. -

If you examine the unlocked 780M vBIOS with KBT, you'll find values of 110W and 161W (roughly, for the latter value) corresponding to Def power and Max power (146%). Apparently that percentage for max power comes from dividing it by the default power.

In any case, if you used that vBIOS and opened up nvInspector, you'll find that the power limit is initially at 100% but is adjustable to 146%. However without manually setting the power limit to above 100%, the card will not go to 146% by itself. Now this is with a boost-disabled vBIOS and maybe with boost the card can autonomously raise the power limit. However it is unlikely that a 43W 760M would allow itself to ever go up to 100W. To me that 100W in the 760M vBIOS looks like what we've been doing in our XTU--setting the CPU power to something like 999W. They can leave arbitrarily large values in that field if they know that given the voltage and core clock limits there is no danger of power draw being an issue.Robbo99999 likes this. -

Yeah I don`t think GTX 760M will go up to 100W at all. But I also think its much higher than 43W which is listed as 100%. To me it seems that one OEM put a powercap as Max at 100W which is more like TDP, while another OEM just slap on a 1000W cap as Max and allow the card to whatever it want unhindered.

But of course that is self regulated based on the amount of cores and clocks. And the user can`t fry the cards either with unhindered overclocking with the cards that have 1000W as Max, because stuff like increased voltage is needed, plus +135MHz is locked on the cards. Not to mention the temperature sensor on the cards.

Or am I in deep sea here? To me it makes senseRobbo99999 and hailgod like this. -

First of all, power target and power limit are two different things. You are conflating the two.

Power target is what GPU boost will boost up to. If a card is running at 150W and the power target is set to 170W, it will boost the clocks such that it takes up the extra 20W headroom if it can. With GPU Boost 2.0, temperature is also taken into account and it may go even higher. Power limit is the maximum power that the GPU will reach before it starts throttling, as defined by the the Nvidia documentation I linked. A corollary of this is that the TDP (if defined as the maximum power that a card can use) will never be higher than the power limit. Sometimes power limit and power target will have the different values (e.g. the GTX680 as you linked) and sometimes they will have the same values (e.g. the Titan).

Second of all, the max power shown in Kepler VBIOS tweaker is not something the card will reach by itself. It is simply the highest amount that the user can to set the power limit to. So having a 200% max or a 0% max makes no difference. The only thing that matters for determining TDP is the default power. One example of a way the power settings can be adjusted is through Nvidia Inspector.

My OC'd 770m has the same power limit and power target, so adjusting the power target at the driver level may also adjust the power limit...

Power target at 100%:

The power target/limit percents are read correctly by Nvidia Inspector. Note that the GPU isn't running at full boost since it's near the TDP limit.

Power target at 50%:

Yep, the power usage only maxes out at around 50% now. Which is to say, the card now does not exceed 50% of the max TDP, i.e. 40W. This is equivalent to flashing my card with a VBIOS that has a 40W power limit and is proof that you can artificially limit the TDP of a card by adjusting the power settings.

Which brings us to the 760m. There is only one set of settings in the power table and the power limit must always be one of the entries in the VBIOS power table since there is no way for the GPU's power management algorithm to know what the power limit is unless it's defined somewhere. Which means that the default power limit of the 760m is 43W, which in turn means that the TDP will be no higher than 43W.

I'm not nuts and lowering the TDP is exactly what they did, assuming you're even right about the 660m TDP in the first place (I have my doubts). It's clear as day in the VBIOS dump. Your assertion that it is impossible because there are too many cores at too high of a clock speed is irrelevant, because the card will throttle to meet the TDP if necessary.

It's not like 4-5 years ago when all Nvidia did was run their GPUs through stress tests like Furmark and OCCT and say, "this is the highest power use the card reached, so that is the TDP". No, now they can just say "we need a card at X TDP, so we'll just set that as the power limit and let the power management algorithm do the rest". This is why trying to determining TDP by looking at the amount of cores and clock speeds is flawed.

Also, if you don't believe me about a process getting more mature over time, go look it up on the internet. My point stands that it's misleading to attribute the efficiency advantage that GM107 has over GK107 to the architecture alone, since GM107 also has a process advantage.octiceps likes this. -

SinOfLiberty Notebook Evangelist

Does not matter now!

I have got some EXITING news for geeks!

M$ is gonna present DX12 March 20. Which means FORBES is right with their predictions.

Guess what equipment does MS need for DX12 Demo, except OS that supports it? YES, u are right, IT IS A GPU. The First Ever gpu to support DXX 12.

From what I have gathered, it is not a low range segment and is the first sign of 800 series! -

And Maxwell won't support DX12 since it already doesn't support 11.1 and 11.2.

Also there are already two separate threads about this. -

ah maxwell doesnt not support DX 11.1 and 2 ?

that's bad.. -

SinOfLiberty Notebook Evangelist

f

Is there an OFFICAL word about it from Nvidia and such? Exactly, there is none.

Your posts are misleading and should not be taken into consideration.

Until then, out. -

Whatever. You must be brainwashed to think that Maxwell will support DX12, and that M$ will include DX12 in anything prior to Windows 9.

-

ThePerfectStorm Notebook Deity

octiceps, there is no need to be rude. I too belive that it is very unlikely that Maxwell will support DX12, but you seem to be a real AMD/ATI fanboy, with the way all that you say is anti-nVidia. Maybe get a balanced perspective before any more fanboy-style posting.

Cloudfire likes this. -

I am not a fanboy and I can't imagine being one as I despise them. I am simply an eternal skeptic and critic of all. But I am open-minded and try to see the big picture. That is the perspective an informed consumer should have. I have used or owned PC hardware and software from most major companies and every one of them releases both good products and bad products. That is indisputable. I am just as likely to rip on AMD as I am on Intel and Nvidia, and in fact I have done so on many occasions.

sangemaru likes this. -

ThePerfectStorm Notebook Deity

Fair enough. The position of eternal cynic is one I share as well, though not as strongly as you. I'm a bit easier to impress than you are, though. However, as you can see in my sig, I AM an AMD customer, and I think very highly of ASUS' HD 7970 DCU II TOP. So I too believe I have a balanced perspective. However, the kinds of improvements the 860M has showed so far are really, really good for someone like me who might buy a multimedia-gaming 15.6" relatively thin and light laptop in the next few months.

-

Its simple.

Either the TDP of 45W with the GTX 660M is wrong, or the 43W TDP of GTX 760M is wrong. It cant be both since that is impossible. Or we are comparing MXM GTX 660M with soldered GTX 760M. If soldered GTX 760M is 43W, then a soldered GTX 660M will be much lower.

Why have Clevo listed the GTX 765M as 80W while Dell have listed it at 60W? I`m more inclined to believe it is 70-75W and that Clevo reports the correct.

That would also make sense because GTX 760M is 60-65W. The Vbios of GTX 760M I found was from Clevo`s 13" notebook and it showed 43W as 100%. Maybe some OEMs lock down the GPUs in some models?

Since both that Clevo 13" with the GTX 760M and the AW14 with GTX 765M both use soldered versions, that remove some of the TDPs? The Clevo with GTX 765M is a 15" with MXM version. That could explain the TDP differences. But I still don`t understand exactly what exactly it is that makes the soldered versions have lower TDP.

It also means that the TDP of GTX 860M would be very low since its speculated to only come as soldered.

In the end, it will all brew down to that Maxwell is much more efficient than any Kepler chip anyway, which was my point in this discussion. -

I'm very sure the gtx 760m has way higher tdp than 43w. it has 768 cuda cores. The gt 755m is the rebrand of the 660m and has lesser than 50w tdp. That means that the 660m likely has 45w tdp.

Cloudfire likes this. -

How can't Maxwell support 11.1? i believe that 20nm maxwell have high chance to support Dx12.

-

Yeah I think Nvidia will support DX12 with Maxwell. Its not like they cant support DX12 if they don`t support DX11.x like someone here cries about.

SinOfLiberty shared some news that Microsoft will showcase DX12 running on Nvidia Maxwell.

Great news Liberty

Is this the first sneak peak of 20nm Maxwell? That would be awesome hailgod and SinOfLiberty like this.

hailgod and SinOfLiberty like this. -

14" MSI barebone with GTX 850M and i5 processor listed.

Only $689. Pretty cheap.

MSI NB Barebone 937-149231-028 14.0 HSW Ci7 i5 GTX850M W8.1 6Cell Black RTL. MSI 937-149231-028 -

SinOfLiberty Notebook Evangelist

I know this will sound strange but, apart from Nvidia taking the AMD route, releasing gpus from bot to top; there is something else!

After 750 Ti, next up a mid range Maxwell gpu, successor of gtx 760/670.

This card(lets say 860) can still be made of 28nm with 860Ti and later kicking off with 20nm.

790 plays a big role here as well. Pushing 20nm prior to 790 seems very unlikely and the production is not just there yet.

GTX 750Ti, as talked about in OC3D review is clearly a cut down card, from something bigger and stronger and still 28nm. It all starts to make more and more sense. Anyway, skipping the background story, here is what is gonna happen:

March-860(28nm)

July/Late June- 870/880-90% certain (20nm).- 880 will almost certainly be a big die, GK 200. Unlike it was with 680 being on gk 104 instead of GK 110. Not sure of 870 as of yet.

Later: 860Ti(20nm)

Good news for everyone, D&M! -

According to driver leak, the next chips will be GM206 and GM204. So if there is coming midrange Maxwell`s, they should be 2nd generation Maxwell (GM206). Which is said to be in 20nm, but that is not confirmed.

It would be cool to see a GTX 870MX releasing in April/May

-

Cloudfire, forgive this silly question but if I buy a laptop with a screen of 1300*760 and connect it to a 1080p monitor, would I see things in 1080p or does my laptop screen have to be 1080p?

-

not you dont have to get a 1080p laptop to use a high res external monitor on it

-

So I can play an external tv 1080p monitor at 1080p even tho my laptop screen is 768p?

-

You can definitely do it at 1080p if you are only using your tv. As for running your laptop at 768p while having the tv at 1080p, is also something i would like to know.

-

Since you clearly didn't read my post, I'll say it again.

All Kepler chips will stay under a predefined power limit. The power limit defined in the VBIOS you posted is 43W. Ergo, a 760m which uses the VBIOS you posted will keep power usage under 43W. Therefore the max power usage, i.e. TDP, will be no higher than 43W. I don't know how much more clear it can be.

You keep bringing up the 660m, which is a complete red herring. It doesn't matter what the TDP of the 660m is. Nvidia could release a 40W 780ti if they wanted to, the card will just throttle to meet the TDP limit. This is also what my 770m did when I halved the TDP to 40W and this is what the 760m will do if necessary to reach 43W. What it won't do is magically use more power just because it "should" when compared to another card. .

I'll reiterate what I in my last post: It's not like 4-5 years ago when all Nvidia did was run their GPUs through stress tests like Furmark and OCCT and say, " the highest power use the card reached is X, so that is the TDP". No, now they can just say "we need a card at X TDP, so we'll just set that as the power limit and let the power management algorithm do the rest".octiceps likes this. -

R3d, could you please explain TDP to me in simple words, and tell me what unit is it measured with Watt?, and do I need a card with higher or lower and how come its better to have a 4700 with 47W than Ivy bridge with 45W

-

Drat, I was hoping that card would come out in the early summer months...

-

Please ask offtopic questions in private messages or create separate topics.

It is more and more suspicious I would say.

@Cloudfire, I wait for newer laptops with exciting. Perhaps new GE70 will be my new laptop. Just not sure how good low-end 4700m will let maxwell show itself. -

Low end 4700m? Did i miss someting? since when did an i7 4700mq become low end?sasuke256 likes this.

-

Considering that there are some misleading TDPs floating around, I think that defining what TDP actually is is relevant.

There are a bunch of definitions of TDP, which stands for Thermal Design Power, and all of them are measured in watts.

The literal definition is that it is the maximum amount of heat that that a processor will generate. This is typically used by engineers who cooling systems, so they know how much heat the cooling system will have to be able to handle. Note that the maximum heat dissipated by a processor cannot exceed the amount of power that the card is using, since that would imply that the card is creating power, which would be impossible.

The Nvidia, AMD, and Intel definitions are different:

- Intel defines TDP as "the maximum power a processor can draw for a thermally significant period while running commercially useful software". Which is pretty much saying it's the highest amount of power a processor will use most of the time while running normal software (i.e. not power viruses like linpack or IntelBurnTool, etc.). And indeed Intel processors will sometimes exceed TDP when turbo boost is activated, but only if it detects that it has the thermal headroom.

- AMD defines it as the "maximum power for the processor", which is probably means the maximum power that the processor will use. Not sure if this is the same for the GPUs, though.

- Couldn't find an official post, but according to bit-tech, Nvidia defines TDP as "a measure of maximum power draw over time in real world applications" which seems like a mixture of AMD's and Intel's definitions and essentially is the maximum power that a chip will draw when not being used with a power virus (for GPUs, an example would be furmark).

One thing to note though, is that Nvidia seems to also include board power into their definition of TDP, which means a GPU with better VRM's, cooling, etc. may end up with a lower TDP than another GPU using the same exact core, but with auxiliary components that are less efficient This may also be why the TDP for MXM cards appear to be higher than soldered parts, because the VRMs/VRAM/other board parts are included in the TDP for a MXM card while, for a soldered chip, the same components may be considered part of the motherboard and therefore not included in the TDP for the GPU.

Also, for all three companies, there is some fuzzing going around.TDPs for OEM only cards may be strange, since they're just to satisfy the needs of an OEM and will never be seen in the market.

As for why 47W Haswell is better than 45W Ivy Bridge, you have to realize that Haswell integrates some components that would otherwise be in the chipset into the CPU (e.g. some of the VRMs). This means that, while the CPU TDP will be higher, the chipset TDP will be lower to compensate, the overall TDP for both may be the same or even lower.

In addition, Haswell performs better than Ivy Bridge, so while Haswell may have a higher TDP, both will both have very similar perf/watt for most applications.octiceps likes this. -

It didn't.. I would say 4702 might be considered low-end i7.. But still not low end.. That's reserved for i3 and perhaps i5

-

Yep I agree. In the grand scheme of things 4702MQ is a high-end not low-end notebook CPU. After all it is only a few hundred MHz slower than the 4700MQ and about as fast as the previous-gen 3630QM. A low-end mobile i7 would be the ULV dual-core ones.

-

As a tech fan not expert TDP seems to trouble me, I mean I see a GTX 680 listed with 100W now that should mean if cooling is proper the card will exceed the expected performance but with a 43W yes there is not a lot of heating generated but the card is not drawing alot of power either so it is not reaching full potential unless the 43W is overclocked but since I am not vbios changer, I stick to stock settings on any card, thats why I am still debating do I get a 680 with stock settings or 860 if both were priced similarly.

-

Also, im suprised no one has figure out why he said that 4700m is low end. Its because he got confused between the guy talking about haswell 4700mq, ivy bridge 3630qm and another guy talking about maxwell cards. He simply got confused between gpu and cpu and i was joking thinking otheres would realise.

gust0007750 likes this. -

Huh?

This is even more confusing.

Language barrier perhaps? -

Read those 2. -

Cloudfire posted 2 links of MSI GE 60 Apaches with maxwell cards but it seems they have identical specs except for processor being changed from 4700MQ>HQ and HDD differences 5400>>7200 which seems like very little reason to have 2 different models over ..Please post any other models of GE60 if you can find, and if you know what the difference between regular and apache GE60s please post that too its so humiliating not being able to search with my pathetic 2006 laptop with 1.7 pentium 4 My PC freezes in gentech's page:S

Thanks -

HIDevolution MSI GE60 Apache Pro 003 15.6" check this ge 60 with unequal ram soiddms

12GB Dual Channel DDR3L at 1600MHz (1 x 8GB, 1 x 4GB)Cloudfire likes this. -

I`ll leave the TDP discussion dead. I have used too much energy on it and we are getting nowhere. Anyway you look at it and how you compare, Maxwell is much more efficient.

Period.

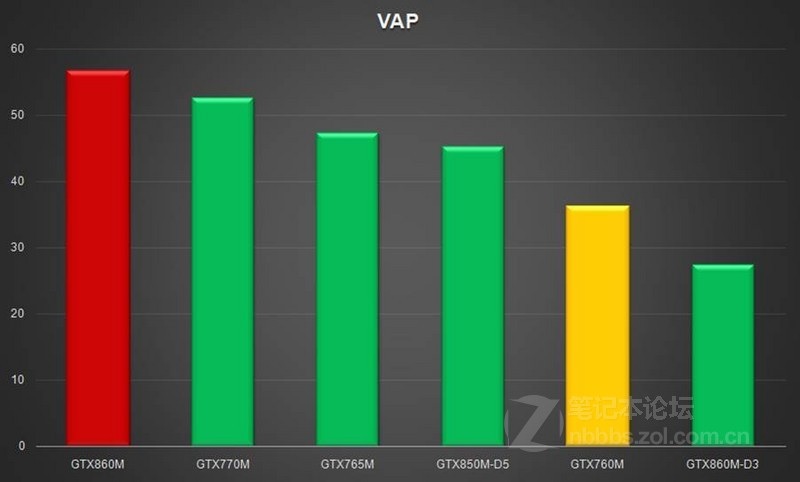

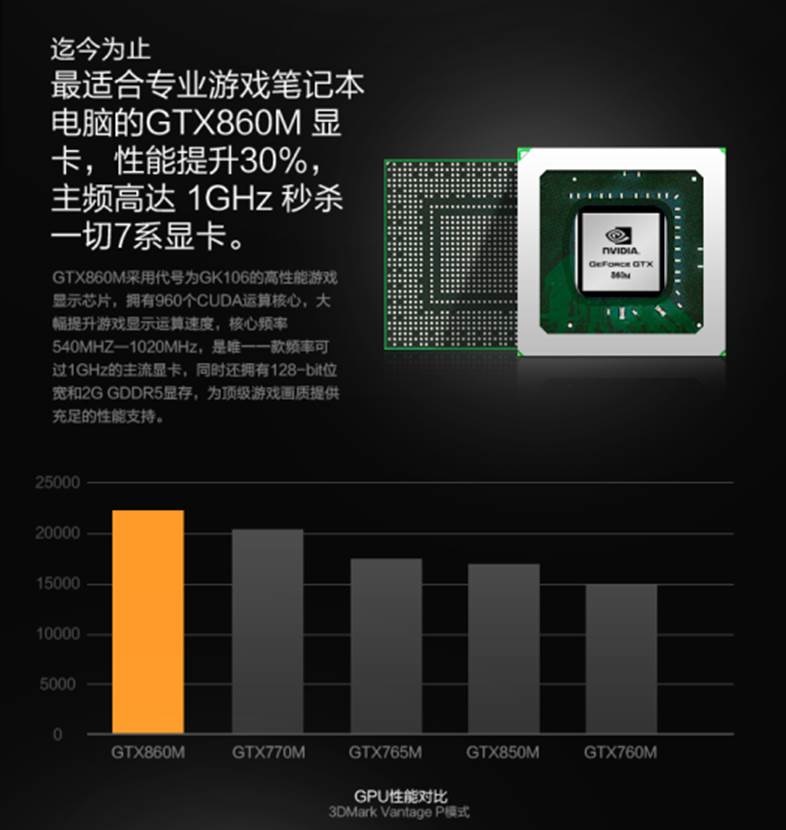

On another note, some chinese site have put out a teaser on the GTX 860M. VAP means Average Performance.

Talk about smacking Kepler in efficiency. It beats GTX 770M too

The chinese site that have tested the GTX 860M says it actually have a base clock of 540MHz (like shown in the GPU-z) but can boost all the way up to 1GHz.

HTWingNut, varung356 and ThePerfectStorm like this.

HTWingNut, varung356 and ThePerfectStorm like this. -

![[IMG]](images/storyImages/I%27m%20With%20Stupid.jpg)

![[IMG]](images/storyImages/SjgHRxn.jpg)

I'm more confused than I should be, but that's my normal day. -

That one have GTX 860M yes I think. You are not so bad at searching after all

There is also one GE60 that have GTX 850M if you are interested. I don`t think its been listed yet though.

GS60 Ghost also have GTX 860M but its not cheap

http://www.gentechpc.com/showpages.asp?pid=1351 -

Cloudfire the ones with "Pro" at the end are GTX 860 carriers?

I found 2 versions

Gaming Laptops - GenTech PC - Gaming Notebooks - Custom Laptops - Custom Notebooks - Customize System 8gb

HIDevolution MSI GE60 Apache Pro 003 15.6" 12 gb

Cloud isn't it a little odd to release 2 different models with only mechanical HDDS and ram differences..:S, Where is the SSD? is it going only to be available in the ghost version? -

Yep, I think PRO is with GTX 860M.

Here is the one with GTX 850M I think

MSI GE60 Apache-033 15.6 inch Gaming laptop with Intel Haswell i7-4700HQ - GenTech PC

And here is the one with 860M:

MSI GE60 Apache Pro-003 15.6 inch Gaming laptop with Intel Haswell i7-4700HQ - GenTech PC

I`m not fully certain about the one with 850M though, since its only $50 difference. But I know GE60 will have one with GTX 850M so I think its correct.

Its typical resellers to offer many various models with different hardware like only change RAM and such. Its a mess -

boost to 1GHz ? why not making it 3d clocks.. to make it work when overheating in small barebones ?

-

I noticed HIDevolution MSI GE60 Apache Pro 003 15.6" and Gaming Laptops - GenTech PC - Gaming Notebooks - Custom Laptops - Custom Notebooks - Customize System carry the same name,one of the re-sellers must have posted the wrong specs, maybe there is only one apache pro with 860 that has been confirmed..

non of the new laptops were the ideal that I was looking for... 3k display +880?=2k? the card that once throttled and 3k is going to justify it? 870+4800 new processor, cutting down 680 is going to justify upgrading? ge 60 pro same grim 8gb ram, mechanical hdd but new maxwell is an okay deal, overall disappointment... -

Another tease about beastly Maxwell.

Disregard the "GK106 - 960 core", they used an old GPU-z that can`t reckognize Maxwell when they made this slide. DUH!

Just like the previous tease, it is beating GTX 760M with 50%, 765M with 30% and GTX 770M with 10%.

:thumbsup:

gust0007750 and varung356 like this.

gust0007750 and varung356 like this. -

I'm tired of reading all of these arguments about the 860M, where's the 880MX at?

Killerinstinct and ThePerfectStorm like this. -

Lol Cloud you are the best! and you must be fluent in Chinese because you have been getting all these kind of information, just help me find an 860 with w7 or w8+ssd in msi/alienware14/asus rog thanks bro

-

I would take that with a grain of salt, because is 3D Mark Vantage, and if synthetics are always not 100% reliable, they are even less reliable when testing new hardware with old testsCloudfire likes this.

New details about Nvidia`s Maxwell

Discussion in 'Gaming (Software and Graphics Cards)' started by Cloudfire, Feb 12, 2014.

![[IMG]](images/storyImages/I'm%20With%20Stupid.jpg) I am still confused.

I am still confused.