Here is another one of the very informative graphs

According to him, it looks like the 680MX will be miles ahead of 680M.

The Oracle has spoken. It must be true

![[IMG]](images/storyImages/mpu_PipNews_id1351149335_1.jpg)

-

-

Looks promising, ha ha. I am very excited about this.

-

who's graphs is that?nvidias?

i dont think that its accurate....first of all the 680m isnt powerfull than 7970m right now,

the 7970m is (at stock )little powerful than 680m.

as for the 680mx we will see.

-

No its not Nvidia that made it

You got some reading to do kolias:

http://forum.notebookreview.com/gam...s/692713-7970m-hotfix-reviewed-anandtech.html

I made a comparison between 680MX and 680M. Should highlight the differences

![[IMG]](images/storyImages/v9OCh.jpg)

-

yea you right but i dont have EM-i have HM,big defference mate

-

I already did the benches at the same bandwidth and tried to simulate same #cores at 719MHz by using the 680M at a higher clock to match the texture fill rate. That showed 13% improvement from 680M stock. Why does that test not work?

-

dang, if this is how good the max card of this gen is going to be, think of how powerful the 780 will be next year!

-

Try looking at the graphs and try again. If you get better than 100% GPU utilization with your HM you have one magical 7970M. Specifically compare with the 680M utilization which are not affected by Optimus slowing it down and do a 2+2 and you should be able to figure it out.

I`m not going to have participate in this discussion over again. The thread is there. Read it

If you got the same pixel and texture fillrate and the same bandwidth, that should absolutely work.

680MX got 15% more GFLOPS performance so that is probably around the percentage boost we should see with this 680MX perhaps. And since it worked for you, aka not having any power problems with the MXM not feeding it enough juice at the clocks you tested it on, that pretty much settles the whole "OMG it will consume more than 100W, we will not see it on other computers than iMac discussion" ?

Yep, improved architecture next year with the 700M series. More power/watt

-

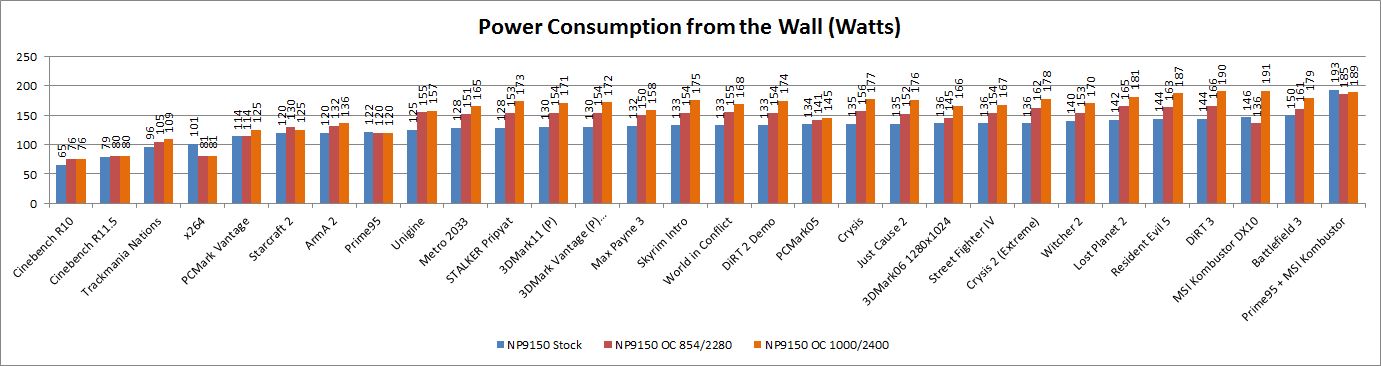

Maybe it's just me but overvolted and clocked at 1050/2400 my GTX 680m 4gb only pulls about 15 watts more from the wall over stock. It's plausible that my Kill-a-watt meter isn't entirely accurate but still. The 680MX should have plenty of headroom power wise.

-

cj_miranda23 Notebook Evangelist

I just got information from "ORIGIN PC" that this model is OEM specific to apple so this means its exclusive. I don't like this, apple is starting to used there pile of cash to lure company on making exclusive product for them in/which IMO there is no benefit for nvidia doing this. I don't understand, maybe apple offer them a great amount of money but there limiting themselves to different type of customers. Hoy many people will buy an IMACS compare to people buying this inside a window based laptop or an all in one system? I believed the the ratio will still be 10:2 in favor of windows based system. Now if they made this compatible for both macs & windows the benefit for nvidia will be much grater. What is happening here? Is there something I'm missing here?

-

Probably not. If I'm not all wrong the 680MX is a OC'd 680M... that's what Apple got, people are basically looking forward for a OC'd 680M.. something they can do themselves

although I hope I'm wrong -

If that is true this gotta be the first time in history that a company ever have gotten a product from Nvidia exclusive.

Well don`t get me wrong, they make customized chip for big companies all the time, but this is the first time I have ever seen it released on Nvidia`s own homepage where they say its a mobile GPU and its not going inside any mobile devices.

I still don`t believe it -

They are wrong.

This is 100% "laptop" GPU, and i said "laptop" not just "mobile" so i know what I'm talking about

-

Maybe Apple have a "timed exclusive" deal with Nvidia. 1 month or something, then the rest follows?

Maybe the other companies might wait for 700M series with improved architecture with less heat before going to the 1536 core model perhaps? -

nVIDIA is not retarded, so timed exclusive or just rumor exclusive

when THIS goes live, so does the GPU, as always

P.S. Yes thank you the link doesn't work yet ofc, but when it does

-

We will see

I just finished reading 75 pages (PDF file) in the MXM 3.0 revision 1.0 trying to find any mention of power requirements. I found none. All what was mentioned was the card layout, where the connector is going, where the GPU is going, RAM and power supply plus a good deal of signal output plus, pin layout and some mention of temperature limits

No max power draw mentioned. So where does the resellers saying max 100W get their specifications from?

The PDF file I read is what the OEM`s get from MXM-SIG which decide the base lines about MXM -

Meaker@Sager Company Representative

Manufactures limits come from the power circuitry and cooling capacity.

-

There must be a reason that it's above the 100W limit this time. What we don't know is whether it'll be limited once it goes into laptops or not.

As far as I can see it's going to be 122W w/ a bandwith of 160.0 GB/s. -

That might mean that it'll require redesigned motherboards to support the higher power draw and thus it won't be safely usable in older laptops at full power..

-

Where did you guys get 122W? I read the last 3 pages and didn't see any mention of such requirements...

-

That and/or they'll wait until Haswell releases? I'm confused

My bad, provided no source...

NVIDIA GeForce GTX 680MX | techPowerUp GPU Database

NVIDIA GeForce GTX 680MX - Notebookcheck.net Tech -

I know what a limit comes from, but MXM is a standard which most follow, and they have guidelines which are required to follow, while others are optional from one OEM to another.

My question is, which you didn`t answer (and nobody else have), is what limit the MXM power circuitry have. According to wikipedia and a dozens of other sites, including resellers here, that limit is 100W for MXM-B.

Where does this limit originate from? Who made it? Is it MXM-SIG? If yes, then why isn`t it mentioned in the PDF file I just read. All the other specifications (heat, signal, layout etc) are there, but nothing about power.

Then we have the whole GTX 580M deal. Already at stock it use a pretty decent amount of watt. Yet people still manage to overclock it. Same with 680M. It can be overclocked waaay more than stock, still enough juice from the MXM.

It gets you thinking -

Considering the limits tend to be by TDP, it's a matter of temperature/heatsink design. Power limit would be caused by manufacture's components choosing as well as any particular limit they self-implement on the mainboard for the module.

The figure of 75/100 or any wattage shown, is probably an estimation rather than a rule, since in the end, any manufacturer can show/make their own machines with better/sufficient cooling. Otherwise every machine would perform to a certain standard with all the same temps, power consumption etc etc etc.

In the end, it is power disippated that you must deal with. -

Either way, that means a 22% improvement in TDP efficiency for the cooling system. That would likely require a new heatsink fan. I still think it will be too much for the 15" Clevo's to power since an OC'd/OV'd 680m easily pushes the limits of the 180W brick.

-

But you can find the power draw from this right?

So you are saying that there isn`t any power limit with MXM, as long as you have a extremely good cooling system that can deal with the power disippated? There was some temperature limits in the specifications, but as long as you avoid going as high with a good cooling system, you are good to go? That would explain why people could overclock the 580M pretty high. They were all using a dual fan system (Alienware, Clevo)

Wikipedia says "max power" btw. I reckon they talk about power draw, and not heat.

So confusing. -

TDP isn't power consumed but heat dissipation energy. In any case I'm sure power supplied through MXM slot has some sort of limit.

-

Meaker@Sager Company Representative

Yes but it's likely along the same lines that a "75W" 6 pin pci express could probably deliver 300W without failing, it just would not be optimal.

-

For mxm 3.0, heres the max power-

The max is 20v@ 10 amps. Thats a max of 200 watts for the 3.0 spec.

(volts*amps=watts)

The max voltage is 20, max amps is 10, max watts of 200.

TDP is not the power-consumed. {if it was, then mxm would have a tdp of 200, and there would be no discussion}

TDP is a representation of what the cooling system needs to deal with.

Also note that within the 3.0 spec, there is no limit on TDP described within the spec itself. (Remember, TDP, as defined for MXM 3.0 standard, is related to the cooling system, NOT the power consumption), HOWEVER thermal environment is discussed in other documents (including a dedicated one)

If you have a mobo that supports 3.0 spec[Read: MXM 3.0 certified], you will be able to use a tdp 125 card, or a tdp 23904823472 card(granted, half the world be vaporized), so long as it meets the mxm3.0 specs.

The plug on any mxm3.0 certified system, WILL support the voltage (its part of the spec,head to mxm's site to read more)

The cooling system is another subject. All of the 'current' high-end systems were designed around the 100w tdp mark. So that would create some nasty heat issues (25watts of heat building per second is ALOT).

The 100watt TDP mark is the assumed max tdp for a 200watt card., its important to note that the spec DOES give liberty in regards to extra power connections, so it IS possible that it uses a extra connector somewhere/somehow (it could be an actual plug, a wire, or just a custom plug in the apple comp etc)

It's extremely important to note, that different types of components and manufacturers use various definitions of TDP, erroneously, and that just compounds the problem of people not understanding what it means.

as a side note for the peeps who are confused about 'but physical action must take place to use energy[electricity]',

modern mp3 players have no moving parts (flash memory etc). according to that statement, they will never run out of battery power and would require only a single charge.

This is simply not true, power IS consumed, even in a digital process(Ie, transistors consume power, thats 7,100,000,000 transistors on a gk110 chip), it is also lost due to MANY factors.

Heres a simpler explanation to follow-

Watts, heat and Light: Measuring the Heat Output of Different Lamps

JAE develops MM70 series - MXM3.0 Compatible Notebook PC Internal Graphics Board Connector with Industry's Highest Class of Contact Stability

[info on a plug from a supplier, note the specs for it]

if by 'not be optimal' you mean 'would burst into flames before it made it to 200watts' then you would be correct

its a 12v(x3=36v) line, with 2.08ampsmax, you could very likely push it to 13volts(x3=39) and maybe 2.15 amps, but beyond that it would simply burn up from heat.

Heat in such applications doesnt slowly ramp up, it's near instant.

NBL!:thumbsup:Attached Files:

-

-

How long do we need to wait for this? ._.

-

No one knows.

We aren't even sure if it's Mac exclusive or not, let alone power requirement capability, etc. -

Meaker@Sager Company Representative

150w 8 pin connectors are the same gauge yet have an extra 2 ground pins that do not help carry supply. Cables and connectors are usually vastly over speced to reduce voltage drop and losses.

I would ask you research such topics before posting advice on them.

A cable engineer who speced to within 20% of death (in flames no less) would not last an hour at a proper company. -

No need for the childish posturing or attempts at insults bud.

i suppose i should have been clearer and read your post in more detail. you apparently were referring to just a 2 foot stretch of 18awg wire(average quality of PSU, good for 90c or so) while i was thinking of the unit as a whole (PSU etc) attached to said wire

No worries. -

Input Voltages

MXM version 3.0 requires the following voltages:

q 7-20V (battery) up to 10A (depending on system capability and charge status)

q 5V 2.5A

q 3.3V 1A

It says 7-20V " up to" 10A. You are assuming that it can run 10A @ 20V which is likely not the case. -

According to NBR, the 680MX consumes 122w. o.o Speculation or legit?

NVIDIA GeForce GTX 680MX - Notebookcheck.net Tech -

Meaker@Sager Company Representative

A quality PSU would likely have a single rail and be able to deliver most of that power over the cable unless there were safety features built in as it's an abnormal loading condition.

So I still don't understand what you are going on about.

I was not being childish, just simply pointing out your lack of clarity and incorrect information which could lead to me having to correct several people down the line who read your post. It has happened before so I try to step in early to prevent having to repeat myself several times.

HTWingnut, it's saying that if the designers fix it at 20V and put in power circuitry able to deliver 10A at the 20V, it's still within spec.

I believe they put a range in to allow for dropping battery voltages.

They are saying that the connector if implemented properly should take 20V at 10amps without issue.

However designing it to only deliver 5 amps, is still in spec too, just you now can only use cards up to that power level.

So the apple computer could have a 20V supply capable of delivering 6.5A, use an MXM connector and a standard MXM layout and expect it to work. -

Cable ampacity requires calculation not only from the wire gauge, but it's coating etc plus materials in general. The limits typically involve physical limitations due to temp, voltage drop etc.

Regardless of how you design your board, cable/wiring has already limits on it's own. Small caliber like 18 AWG could probably take around 7 amps constantly without much issue easily.

Back on topic, for now it doesn't matter if it''s exclusive for apple for now. The GPU won't be anything that laptops can't handle with a proper upgrade of heatsink etc. Plus both 680m and MX will have a similar performance ceiling, considering the physical thermic limits of our laptops. So regardless of which version you get, you will obtain the same potential performance, just different stock one. -

Meaker@Sager Company Representative

Except the 680mx has full voltage memory which could reach 1500-1600mhz which is a huge bandwidth advantage.

-

Has anyone spoken with Dell/Alienware reps about this GPU and its expected availability date? I was going to, but I thought I'd ask here first.

-

No one has any idea..... you can ask for the fun of it though

-

BOOM

![[IMG]](images/storyImages/HJzW7.jpg)

-

Interesting.

-

I have to hold back again /:

-

Yeah and Dell support also said there would be a 680m in the M14x too.

-

cj_miranda23 Notebook Evangelist

Sir this is a st....pid question but when they stated that this will be available for computers they're talking about laptops right?

Btw I guessed Origin PC got a wrong information about the availability of 680mx on other system. Cannot believed a company big as them would not have a correct information on this matter or maybe they're just lying in-order for there 680m stock to sell. -

that might be true as the new iMac with 680MX is scheduled to be released in December as well) good news! tnx

-

Meaker@Sager Company Representative

This is unlikely to get a non-limited bios and +135mhz before starting to clock bounce.

-

SOURCE: | Description | GeForce

Seems like the 680MX isn't as strong as I would have imagined nVIDIA would have advertised it... only 60% faster than the 580M? huh and ~30% faster than the 7970M... ok I rest my case -

Wait I don't get it. Was that sarcasm?

-

Sweet! Just in time for my birthday...

Thanks for checking, bro.

Nvidia GTX 680MX?

Discussion in 'Gaming (Software and Graphics Cards)' started by Tyranids, Oct 23, 2012.