I blindly believe that it will fit into Clevo P170EM without a modified BIOS...

Santa, please, please, please!

-

-

Nvidia and AMD 'smash' each other all the time (if you can call it that).

It's a marketing game at best.

There is no 'Nvidia hate' here... at least, I hadn't observed any.

My comment simply incorporates reports that were released until now, which indicate that AMD will have gpu's with HBM out in early 2015... while Nvidia's stacked memory gpu's have been reported to not come to the scene until 2016.

Also, if various reports on HBM capabilities/performance pan out as accurate (and thus far, AMD has been more or less on the mark with their claims for their products), Nvidia wouldn't be able to barely match, let alone surpass that kind of performance even with fully unlocked Maxwell until it releases its own stacked memory - and even then, its a question of implementation (thought to be entirely fair here... its a question of how well AMD will implement HBM and how will this translate to performance gains - though according to this: AMD 20nm R9 390X Features HBM, 9X Faster Than GDDR5 - its encouraging). -

3 questions:

Why exactly would Apple have M295X for itself while Windows based laptops would be left out?

How do 'high power requirements' come into play here?

Considering how Apple is not exactly known for its cooling efficiencies... why would they integrate a 'power hungry' mobile gpu into their system to begin with?

It just doesn't track well that AMD would release a new mobile chip which seemingly rivals current high end Maxwell in performance and is far more power hungry (it is accurate that we have no confirmation it is 100W for this chip, and yet we have no data indicating otherwise either), and then limit it to Apple based products. -

If AMD launches the 20nm M390X sometime next year, it will probably be based on the desktop 380X.

With up to 1024bit 8GB HBM, 4.5x faster than GDDR5.

-

Meaker@Sager Company Representative

AMD worked with them to get a 5k panel working over current display port tech on a single link. That's why it's in this machine.

-

1. why exactly would apple have the majority of TSMC's 20nm yields?

2. mind explaining to me how you can fit an 110W+ card inside notebook?

3. why would apple wanna pay $800 for a 980m instead of perhaps half the price for a m295x while the latter supports 5k and the former doesn't? -

I have two things to say: 780M and 880M.

-

IIRC the 680MX was an Apple exclusive, so it's not entirely unfathomable if AMD also made an Apple exclusive GPU.

As for 110+W card inside laptop, we already have those, they're called the 780M and 880M.TBoneSan likes this. -

I don't know maybe the same reason why they make GPUs for consoles?

-

To bring 20nm and stacked DRAM to market before Nvidia would be a big comeback for the red team.

-

Even if it is Apple exclusive, can you blame them? As I said before - R&D costs silly money, and what better way to get some than warrant some exclusive contracts? In the end of the day it's not like you were going to buy AMD anyway and this is precisely the reason - they can't count on a huge base with deep pockets, like nVIDIA do, did and will do. It almost seems like that nVIDIA can do nothing wrong to push most of their buyers in the other direction.

-

aint buying until 20nm with maxweelll though. silly paying for same amount of config core where i can just OC which i rarely do on gpu anyway.

-

There's no way you're OCing a 780M or 880M to match a single 980M in any capacity. It *IS* a gimped card compared to what nVidia is CAPABLE of releasing, don't mistake this, but it is NOT a "non-upgrade" like the 880M was to many (assuming it worked)Cloudfire, Mr Najsman, Cakefish and 1 other person like this.

-

He means his 7970m xfire (better than or similar to a single 980m anyways). Yea if I had a Kepler SLI or GCN xfire machine, I would just keep it until 20 nm, otherwise it is pure waste of money considering the games are coming up will run just fine on your machine

-

I still think that this GPU is exclusive for Apple because AMD have not announced anything but an OEM was the first to talk about it which is unheard.

Secondly, unless its a new architecture, we are looking at power and cooling requirements that notebooks can't meet but iMac does due to using desktop parts including power supply that exceeds what MXM can deliver.

Third, no other OEM have announced the chip. There are no leaks anywhere except ozone3d.net which may have been an iMac running the chip

That website quoting 100W probably got that information from nowhere and made it up. I think R9 M390X are the only AMD GPU capable of going inside a notebook and something tells me it won't come out until Q1 next year.Mr Najsman and moviemarketing like this. -

That the GTX 980M will most likely beat his TWO 7970M in a lot of games and will output so much less heat speak volumes how far ahead Nvidia is compared to AMD. Its like watching Intel and AMD with CPUs

-

But cloud, would you drop 3-4k on a computer every single year? Forget about kinds college in that case

I think 1 year stellar 1 year ok is a good plan!

I think 1 year stellar 1 year ok is a good plan!

-

I'm more in the $2-3k every year but I sell my notebooks and it contribute to the majority of the cost

Yes I do lose some money, but I'm willing to pay say $500 per year if it means I get a fresh new notebook with the newest hardware

Nothing wrong with sticking to the same notebook for 2-3 years either. We all are different.

7970m CF certainly isn't a weak setup -

If that's the case though, compare 2x GPU to 2x GPU... the 980M SLI will make his 7970M crossfire look like a joke. A single 980M is indeed stronger than two 7970M stock cards though. OC'd the 7970Ms may win, but then you could OC a 980M too.Cloudfire likes this.

-

what?! 7970m can last longer than a year?!!!

not even the power requirements, apple probably just bought all of amd's supplies like they did with the tsmc 20nm chips. so far no one out there can compete with how much supply apple can buy, so chances are even if it can fit in a notebook, there won't be enough to do so. so rather than dealing with the low volume supply of a card that's way more troublesome than the 900 series, OEMs would just ditch it.Cloudfire likes this. -

COOL, and now add to comparison:

- year of manufacture

- current market price

- Results in games and benchmarks

And exactly the price/performance ratio between 7970mCF and single 980m.

Let say 7970mCF wil get 140fps and 980mSLI wil get 300fps, what will be a noticeable difference on the screen 60/120Hz for the player???

2k$ for 160fps more on 120hz display yhym...

I am a noob, so please explain difference to me?

And besides that, this topic is about R9-M295X, not nvidia products. :[unityole likes this. -

Nope there won't be difference in gaming (that supports xfire and SLI). Though we have hardcore benchmarkers in the forum who wants the utmost number at 3dmark 11 & FireStrike. For me no difference, that's why I said wait until 20nm release

-

Maxheap could you show the results of 970m in Middle Earth: The Shadow of Mordor Benchmark @ 1080p on very high, or send to me by PM to avoid offtopic?

-

Sadly I canceled my laptop and I won't be getting it until 2-3 weeks, heck maybe longer as I might go for the 4k display, sad stuff

-

That is an explanation too.

AMD got the whole industry on their knees so they get to announce chips like M295X for the first time I bet. That's what happened with GTX 680MX which was also Apple exclusive. -

First benchmarks of R9 M295X.

Look at 05:47 in this video:

http://m.youtube.com/watch?v=oM2CdF4cc0o

Compare with GTX 970M found here (980M haven't been benched yet)

NVIDIA GeForce GTX 970M performance in GFXBench â unified graphics benchmark based on DXBenchmark (DirectX) and GLBenchmark (OpenGL ES)

Me thinks the M295X is running very low clocks to not become too hot for the iMac. Because the 970M beats it solid (in OpenCL 3D graphic test)moviemarketing likes this. -

or the iMac as we know is throttling like crazy because what can you expect from apple?

unityole likes this.

unityole likes this. -

Actually I just saw in the store their new server, cylinderical thing, its cooling looked pretty

-

The trash can aesthetic may not be for everyone--I personally think it looks really sleek--but the Mac Pro is an amazing feat of engineering given that it runs cool and quiet with that amount of horsepower crammed inside a tiny enclosure. It doesn't even need mobile GPU's as it can house two full-blown desktop FirePro cards (2048 cores each) alongside a 12C/24T Xeon.

-

You can beef it up seriously, and the package is so little! I even thought at some point like, heck buy this and don't need a cluster anymore (its better than a single node of any cluster I used so far, a few of these and you got yourself your cluster

), Btw alienware's new desktop looks to have also an interesting structure

), Btw alienware's new desktop looks to have also an interesting structure

-

ThePerfectStorm Notebook Deity

The iMac's obvious lack of cooling means that the M295X must be low clocked for risk of frying itself.

-

I don't think that's the case, I think the difference is from the OS or the benchmark being bad. If OpenGL ES is being used on the iMac that's the biggest issue. Phone API compared to DirectX is night and day. Even if they both use regular OpenGL Windows may still be superior or even drivers may affect things.

Now Passmark is another very bad benchmark and it doesn't even read clock speeds so average scores are affected by all the overclocked GPU scores yet still the R9 M295X (still in iMac but running Windows this time) scores 4977 compared to 5164 for R9 280X. Again the 280X scores are probably higher than what it scores at stock due to some people scoring 6000+ with 20%+ overclocks.

PassMark Software - Video Card Benchmarks - Video Card Look Up -

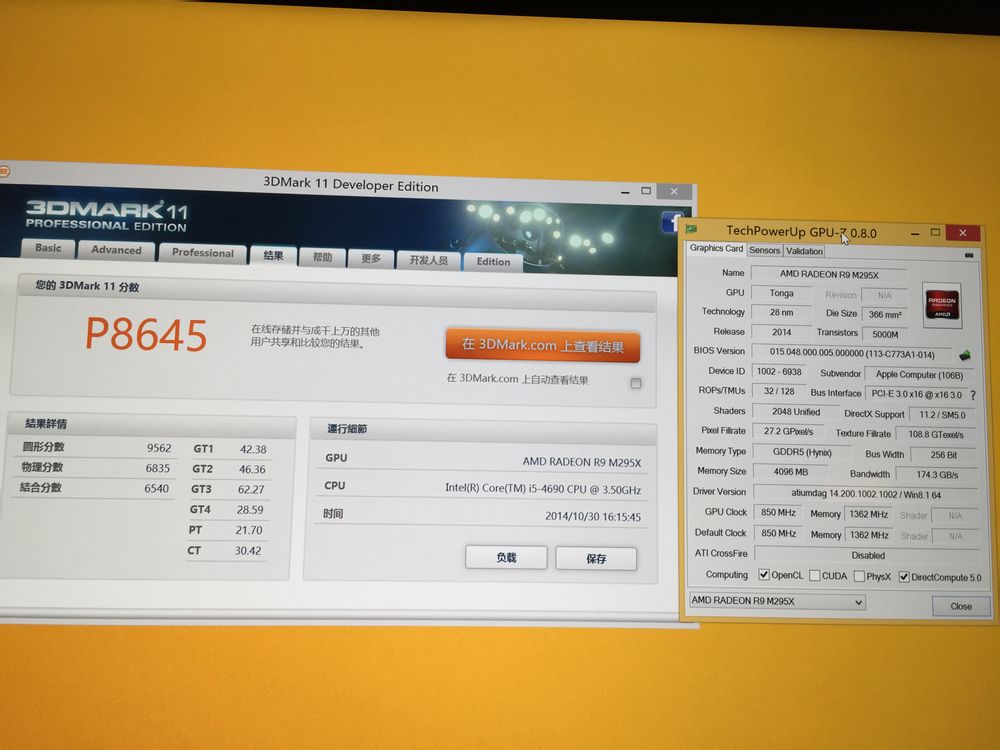

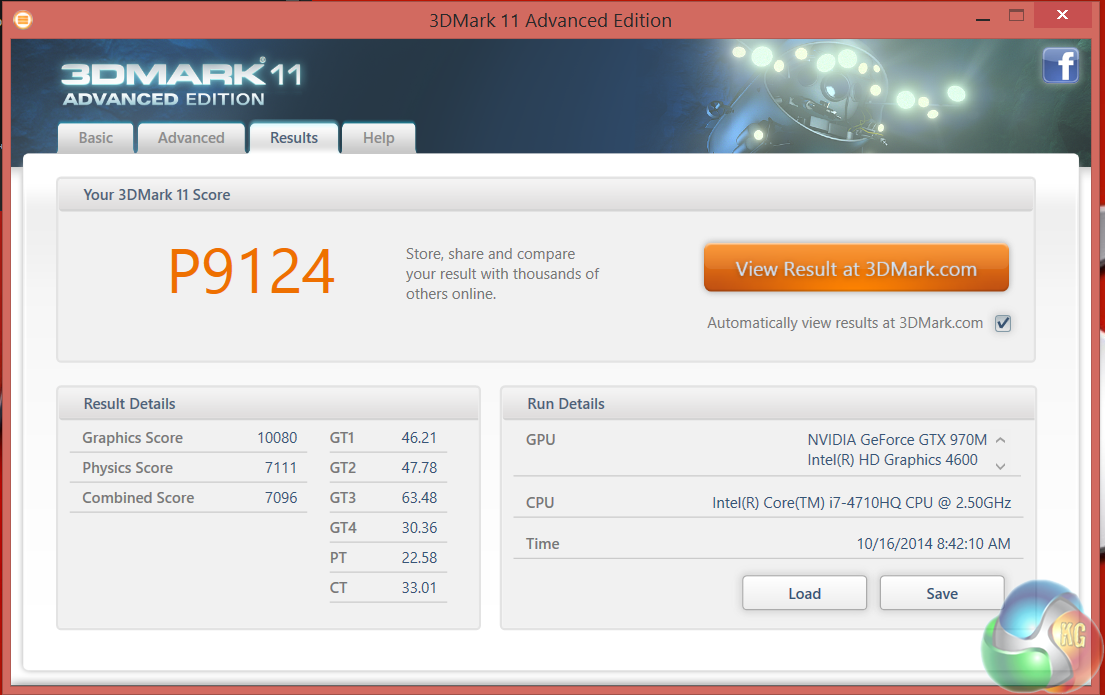

3DMark11 :hi2:

As always, keep an eye on the "Graphic Score"!

R9 M295X (9562)

GTX 970M (10080, +5%)

GTX 980M (12111, +27%)

Note that the 3DMark11 score from M295X was from the Apple iMac which is the only computer using the M295X at the moment most likely due to high heat output and big power consumption.

But there you have it :thumbsup:moviemarketing likes this. -

-

So they are really comparable, up to 970m. That is inline with what we had anticipated, because AMD has no current next gen GCN arch tech yet. Their best bet would be something near a R9 280x but that's it.

They are not in a bad standing, specially if they price it accordingly cheaper than 970m. -

That's *IF* they are willing to price their flagship cheaper than nVidia's second-best chip. This has never happened before, and I think it'd be something AMD wouldn't think would work. It also needs to be a decent bit cheaper, not just like $50, because their mobile driver side of things are still mess.

-

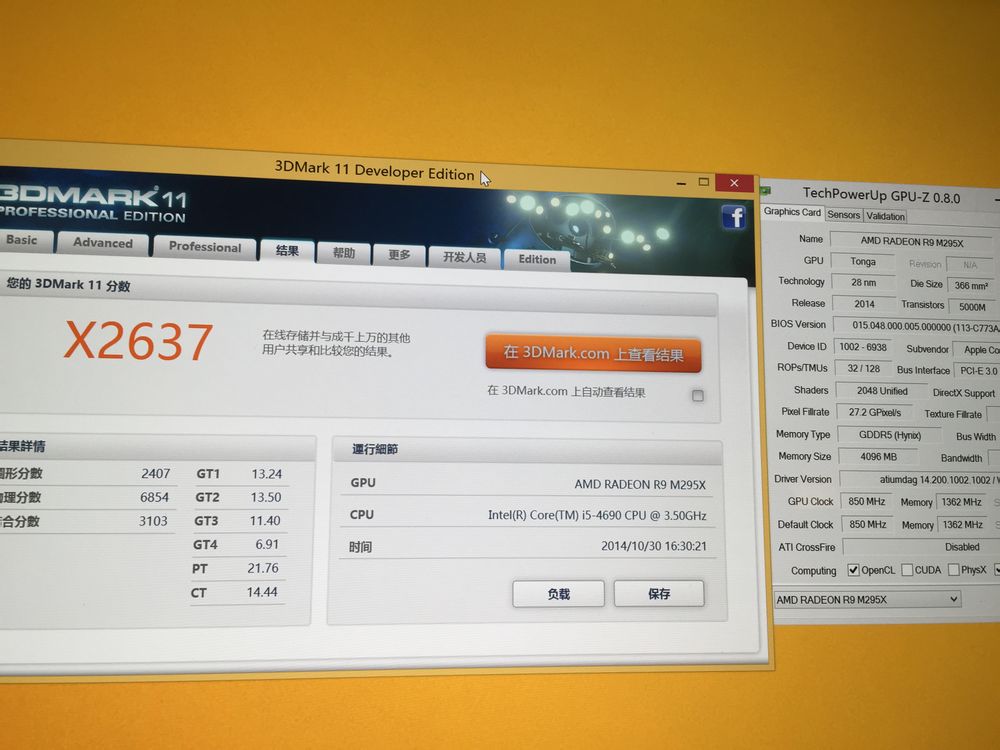

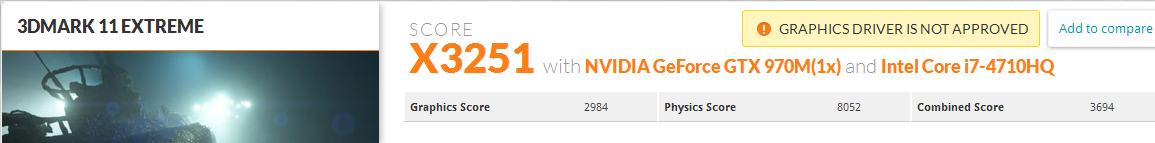

Lets compare them on 1080p benchmark which is more valid than Performance preset (720p) for us gamers

First up,

R9 M295X (2407)

GTX 970M (2984, +25%)

GTX 980M (3856, +60%)

I`d say with thermals, power and performance put together, Maxwell is leaps ahead of AMD. Note that R9 M295X may never make it in to notebooks due to high TDP -

I firmly believe that AMD will hold until 20nm & stacked ram release (which might be around the corner).

-

I am thinking the opposit, Imac are very thin and use only one fan to cool cpu and Gpu if i am right. Maybe they are underclocked ... and after that , can someone do a comparative between windows and mac on the same or nearly the same config to see if 3d mark run good on mac ?

-

By the way, I would like to point out that the desktop chip is a 3.9GHz quadcore which can help the chip more than the 3.3GHz quadcore the mobile chip has, hyperthreading be damned. It likely has a bit more of a performance gap on similar hardware; the best way to test would be to grab a 4910MQ + 980M and OC the 4910MQ to 3.9GHz while disabling hyperthreading then running the test again and seeing if the graphics score increases

Cloudfire likes this. -

Still, unless they manage to get over 1Ghz on that chip, it won't touch maxwell. HD7000 series are far too old now, and Tonga is nothing more than a TDP tweak. They need a new arch to compete with maxwell.

-

Look at Physics Score results. Mobile CPU scores better than desktop CPU. Awkward, if you ask me. IMac MUST be throttling a lot, on both CPU and GPU.

-

And the very same desktop chip puts out quite the heat (which in single fan iMac is quite the thing, just like in most notebooks). Call me when its in MXM-B format and if possible, tested in the same system as the 970m/980m, then I'll tell you it's a solarium equipment

Till then, I blame the iMac.

Till then, I blame the iMac.

-

You do realize that faster CPU affects the Physics score, yet the laptops have higher physics scores than a desktop Core i5 which is a bit weird to me. Also seems like the same issue is dragging the GPU score down too, especially the extreme one. Whether this issue is because of operating system or iMac's lack of cooling the M295X should really outperform a desktop 285 and perform closer to a 7970/280X just like in the Passmark score where the iMac was actually running Windows.

-

The 4690 score more or less what its suppose to. Its missing maybe 8% is CPU score. Despite this, it should be more than powerful enough to not bottleneck the GPU in a simple 3DMark11 benchmark

-

It's possible the Physics score is Hyperthreading dependent (which means that i5 would need to clock at 4.2GHz or better to match that mobile chip), but it doesn't mean that the calculations for preparing frames to the CPU were also hyperthreading dependent.

I could be wrong about that, but I always like to point out CPU differences in scores.

Also, you could be write about the OS it was running on.Cloudfire likes this. -

(2048*850MHz)/(1792*918MHz) = 0.05 = 5%

(M295X: 2048 shaders 850MHz)

(R9 285: 1792 shaders 918MHz)

R9 285: 2600 in 3DMark11

R9 M295X: 2400 in 3DMark11

Ideally the M295X should score 2600 * 1.05 = 2730

But like D2 says, it could be because of the OS.

But does it really matter? Its still below GTX 970M (3000) and way below GTX 980M (3980) -

I finally found a fair comparison between the 2014 and 2013 iMacs running Windows 8.1(the same chassis and the only difference besides the electronic components being the screen). In 3DMark Firestrike the M295X scores almost 50% higher than the 780M and this is in the full test, I expect the difference between the GPU scores to be even higher (high physics scores won't be helping the 780M anymore). What is important here is that the 780M is only scoring 4131 in the overall score yet on notebookcheck you can see that with more recent drivers the 780M easily scores over 5000 points in the same test so most likely the M295X is also being held back by iMac's insufficient cooling. It looks like under the same thermal limitations the M295X is 50% more efficient than 780M which is really impressive since we are talking about GCN that isn't much different from Tahiti.

Also to those that claim that Tonga isn't anything more than a Tahiti with lower power consumption think again. Tonga has a few significant improvements besides the much better memory bandwidth efficiency. It has significantly higher Tessellation performance, over double that of Tahiti, and even better than Hawaii (desktop 290X). I also has better ROP performance (probably 40% higher than Tahiti at the same clock) although most likely not as much as Hawaii because it has double the ROPs. Also it should have better double precision compute performance but just like Hawaii it is most likely cut down in anything besides Firepros.

http://arstechnica.com/apple/2014/10/the-retina-imac-and-its-5k-display-as-a-gaming-machine/

Edit: The Firestrike Ultra GPU score is exactly the same as the best 970M scores and I am sure M295X would do better if it had decent cooling.triturbo likes this. -

4690 is about back to back or a bit better than a 4710hq, but even if it throttled a bit it shouldn't affect the graphics score, cpu throttling is not the problem here

-

Radeon R9-M295X

Discussion in 'Gaming (Software and Graphics Cards)' started by Tsubasa, Mar 15, 2014.