Well that my biggest concern.... I dunno how amd's new architecture is going to be.

And If i buy an asus g751 i cant change the card so im stuck. Is 980m will be good to game on max or nearly max settings for atleast two years?

-

-

If AMD releases Tonga on a mobile platform, it 'might' come close to competing with existing Nvidia cards.

On the other hand, AMD will release gpu's with HBM memory in about 5 months from now - THAT is what I'm personally waiting for because it seems to be the biggest transition from 'standard' technology to something new. -

@Cloud - I wont be so sure that R9-M295X can't fit notebooks. What about the thread starter - MSI GX? Trust me, those guys have the info at least an year before the release, otherwise they can't design the MoBos, MXM/GPU modules and etc. Take a look at couple of schematics and compare the dates with the product release dates and you'll see what I'm talking about. Also, I was keeping with Apple news for quite some time (not lately, very disappointed where they went with iPhone) to know, that they make sure that they have TONS of every part they are using in their products. I know how I sound (still wish R9-M295X to happen in MXM format), but you should not mark it gone until it is as well

And GTX 680mx is essentially GTX 780m, so it happened in notebooks, just different name

And GTX 680mx is essentially GTX 780m, so it happened in notebooks, just different name

Link4 likes this.

Link4 likes this. -

Might be in February when AMD releases 20nm Desktop GPUs with HBM. You might want to wait because HBM (3D stacked memory) is a great improvement not only in performance but also power efficiency. If you want nVidia with HBM you will have to wait a year longer.

-

I shouldnt really share this with you AMD fanboys (no offence, a joke

) but this could be a benchmark from Alienware notebook with R9 200 series.

) but this could be a benchmark from Alienware notebook with R9 200 series.

Notice the low clock (626MHz) which may fit a mobile 2048 core M295X with low clocks.

Generic VGA video card benchmark result - Intel Core i7-4710HQ,Alienware 0146R1

15200 in Graphic score...

GTX 980M only get 12600 in Graphic score...

Please remember that this may be a benchmark from a desktop from Alienware with a desktop R9 200 card and it could be some faulty detection.

If it really is M295X inside a notebook, its a new architecture and it will be faaaaaaast.

PS: Benched todaysteberg, Mr Najsman and triturbo like this. -

Looks like it could very well beat the GTX980m.

If they can knock down the powah enough from the R9-285, we could get a 125-130w TDP card. (Only 17" notebooks would be able to have it tho.)

Wasn't the GTX880m 122w TDP? -

Hm... Would be impressive if its a laptop... Good thing I held off from the rush to get the 980M...

-

Definitely not a R9 M295X, that is similar to Hawaii performance and I don't know what is up with that clock speed (seems like throttling to me). So unless this is R9 M390X which will probably be based on Fiji which will also be used in Desktop 380X and is said to be faster than GTX 980 then it is just a Hawaii 290X and the benchmark read the clockrate only the instant that it throttled yet it didn't throttle 99% of the time for the score to be this high.

Edit: Did some calculations and by my estimates R9-M295X in iMac should score about 11100 in 3D Mark 11 Graphics score. -

Huh interesting but I doubt it is a notebook GPU. That score is similar to a R9 290x. Most likely a new alienware PC that uses intel mobile CPU?

-

The CPU is HQ (soldered mobile) and the hard drive is 2.5"

Different chassis than the 980m test bed.

Different chassis than the 980m test bed.

-

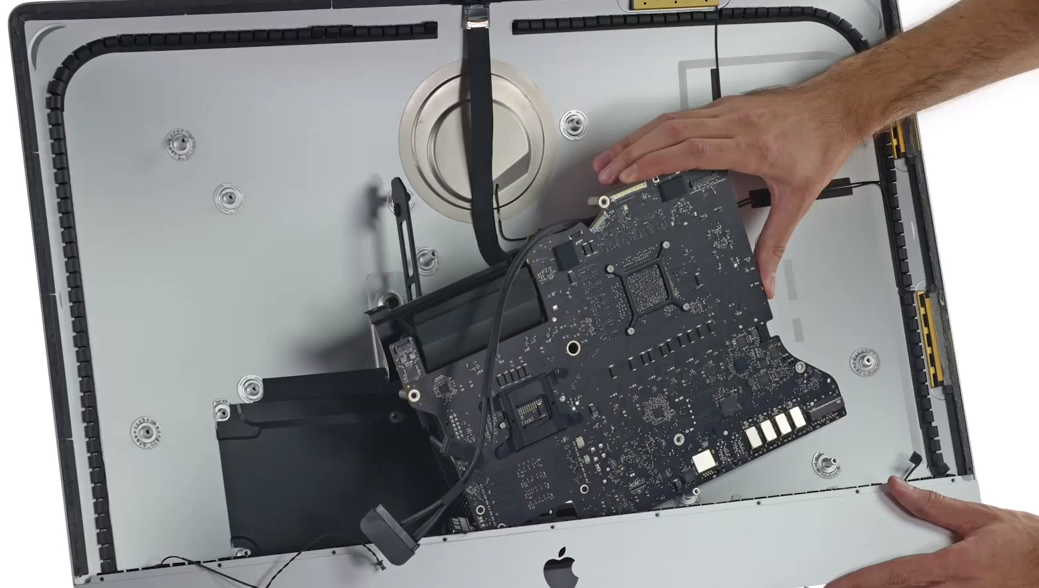

I also think there is no reason why it can't go into a notebook. Just look at how thin the iMac is and that hing also has to cool a Desktop i7 4790K. Its also a large monitor and I think it's only 2.5" at it's thickest point (where it connects to the stand so most of it is probably < 2" think. I see no reason why a gaming Notebook can't handle one especially since they have larger vents (and possibly denser and taller cooling fin arrays). Older models had a 780M in them and the dimensions of the newer one haven't changed yet it can still handle R9 M295X while keeping a hotter 4790K in check.

-

Here is a tear down of it on iFixit

https://www.ifixit.com/Teardown/iMac+Intel+27-Inch+Retina+5K+Display+Teardown/30260

Sooo... yeah physically don't see why that also wouldn't work in a notebook form... doubt the layout would be any different for the R9-M295X. Only thing would be is if this was specifically made just for Apple similiar to the nVidia 680MX. -

Nice find. Indeed i doubt 295x will be different layout. Let's hope we hear more from this GPU soon.

-

It's precisely what I was looking for yesterday, but I though I have to give them couple of weeks, but they are fast as always

-

The R9 M295X wasn't made specifically for Apple but they might as well have bought the entire supply of it. We might see it in other products but it may take some time.

-

-

wait a sec bro, explain to me how is the 5750m 30% faster than an i7 4600m when it can barely even beat my old first gen i5 450m?

-

A10 4600M is not the i7 and it is only up to 30% faster thanks to turbo.

-

consider how the trash can throttle with a xeon and d500 xf I'm not surprised if this iMac can't handle devil's canyon at all, I think we are overestimating apple. cooling isn't something they are known to be good in

-

mind explaining yourself a bit more? 7600p is like 10% faster than 5750m, and 5750m can't even say it can beat a first gen i5 confidently

-

This was my thinking as well. Devil's Canyon is one hot chip. Its not going to turbo much in an iMac.

-

turbo much? have you seen what's inside it? I will be surprised if that thing can stay at stock speed without throttlingmoviemarketing likes this.

-

Nah my 4790K doesn't run hot out of the box. 4770K is easily 10C hotter.

Sent from my HTC One_M8 using Tapatalk -

cause 4770k uses crappy tim where as devil canon solders to IHS

-

Incorrect. Devil's Canyon still uses thermal paste, albeit a supposedly better one, but the main issue before wasn't the quality of the paste but the air gap caused by the glue used to attach the IHS.

-

-

I think 4790k uses soldered tim right under IHS compared to 4770k uses crappy paste no?

-

about time. with that many config core once oc a tiny bit maybe performance will sky rocket who knows. but then again at this point i consider it all rumor.

-

No solder on Intel chips since Sandy. They are using a "next-generation polymer" instead. Temps are definitely lower though.

Sent from my HTC One_M8 using Tapatalk -

I think they pulled those specs straight from Wikipedia, and I don't think the boost clock is accurate, at least for the version in the iMac, because it takes 850MHz to come close to 3.5 Teraflops advertised by Apple.

-

So we're looking at GTX 970M levels of performance then from the looks of it.

-

Closer to 980M I would say, if it is clocked at 850MHz (boost) by my calculations the 3DMark 11 score should be about 11100 GPU score which is similar to 980M. 980M does better in Firestrike but then again Maxwell scores are bloated in Firestrike. These are just synthetics though so we should wait for real world tests. 970M on the other hand isn't that fast so M295X shouldn't have any problem outperforming it.

-

2k cores at 850 boost, I would say the initial benchmark by Cloud might be true

Though I still cannot believe they managed it in a 100W TDP package

Though I still cannot believe they managed it in a 100W TDP package

-

Google Translate

I was just going by the above details. If they say 40% above M290X that puts it on a level of the 970M, or thereabouts. Maybe they have their info wrong. -

It should be 60% faster.

-

If 2k cores, that is just wrong information, I would say it should be better than 7970m xfire (or somewhat equivalent), this is pure speculation though

-

Cool beans. So between a 970M and 980M, realistically speaking? All in 100W TDP. Hmmm, not bad AMD, not bad. Took you long enough to get there though! No doubt will be competitive on price, as always.

-

It'll probably be closer to 980M than 970M.

-

Good news indeed! I am an Nvidia fan by nature (my first gpu was Nvidia and since then I loved Nvidia). *HOWEVER* I am glad that AMD is giving them good competition for a long while (I miss the ATI branding though

), I would be even glad that if m295x beats 980m, that will only make Nvidia work even harder and we will get even better chips next gen! What I love about 900m is the power efficiency, 65W and 85W TDP of the cards are amazing, and if AMD puts out there a 100W TDP beast, that would be the perfect generation, can't get any better than that!

), I would be even glad that if m295x beats 980m, that will only make Nvidia work even harder and we will get even better chips next gen! What I love about 900m is the power efficiency, 65W and 85W TDP of the cards are amazing, and if AMD puts out there a 100W TDP beast, that would be the perfect generation, can't get any better than that!

EDIT: Also I am going into my AMD cycle next gen (as you can see from my sig ) So, 20nm AMD card next gen MUST be amazing

) So, 20nm AMD card next gen MUST be amazing

triturbo likes this.

triturbo likes this. -

I really hope AMD can deliver some serious competition at a very fair price. It will just force nVidia to release their full GM204 cards that much quicker. Maybe if AMD puts up some decent competition we will see less of these incremental increases and less milking it by nVidia.

-

Well, I am gonna put this out there. Seeing as how the 980M is cool as hell and is apparently a 85W TDP... GM204 full core (whenever they stop being money-grubbing and release it) would be a 100W-110W GPU, which would be both cooler and TWICE as strong as stock 780Ms. Hell probably twice as strong as stock 880Ms too, if we get a decent core clock mimicking the 980 cards. AMD bringing that 100W chip at that power that's in 980M's ballpack is still leaving things a bit... lacking. Basically, this is their final-attempt flagship, and I am dead certain it isn't going to be one of those ice cold chips.

In other words, this might kickstart nVidia's full GM204 chip production, but in the same breath it's likely to fall into a 7970M/780M power gap.heibk201 likes this. -

Which many people will be perfectly fine with if it doesn't end up costing an arm and a leg like Nvidia does.

-

Maybe so, but when the GM204 refresh comes in and the current 980M becomes a "975M" or something and comes out at the current 970M's price point, it'll be cheaper than a M295X, except that the M295X has no guarantee that it'll actually be stronger than the current 980M. If the M295X is AMD's best and nVidia's cheaper second best still outperforms it, why would anybody buy a M295X?

Basically, AMD needs to keep top competition with some incentives. M295X can't be their best. -

Ofc not seeing as M295X is still on 28nm. AMD will have 20nm GPU w/HBM or something eventually.

D2 Ultima likes this. -

GM204 refresh will be called the GTX 1080M. Of that, I'm fairly certain. Or at least a 980MX. They certainly won't rename the 980M to 975M to make room for a 'new 980M' - that's marketing suicide.

AMD has R9 300 series launching sometime next year at 20nm with HBM. That's their best. Will provide fierce competition with GTX 1000 series. -

Good good... COMPETITION. YES. I EAT IT UP LIKE NOM NOM PIZZA SAUCE. YES.

-

Funny thing, my first GPU was FX5500 which was defective and I'm rocking ATi ever since

I do have some experiments with nVIDIA, but not as my main weapon. The competition is always nice and I miss the ATi branding as well

I do have some experiments with nVIDIA, but not as my main weapon. The competition is always nice and I miss the ATi branding as well

Those TDP ratings... There's only one proven way to find out which one is cooler - put them in the same machine - end of story.

octiceps likes this.

Those TDP ratings... There's only one proven way to find out which one is cooler - put them in the same machine - end of story.

octiceps likes this. -

If I'm not mistaken, the R9 300 series on 20nm with HBM should launch sometime in February 2015.

'Fierce competition' is not exactly an accurate description since according to various reports until now, Nvidia will not apparently have stacked memory gpu's out by as far as 2016.

In that context, and if what we saw of HBM until now demonstrates to be accurate... Nvidia could be completely outmatched by several times... at least until they release Pascal (about 10 to 12 months afterwards). -

So much Nvidia hate

What about Nvidia smashing AMD with Maxwell? Both companies are doing a fine job, but they are different companies, they have ups and downs..

What about Nvidia smashing AMD with Maxwell? Both companies are doing a fine job, but they are different companies, they have ups and downs..

-

I still think this M295X is exclusively for Apple due to high power requirements.

I have never hard about the website quoting 100W for the chip and I find it fishy that they are the only site writing that.

Radeon R9-M295X

Discussion in 'Gaming (Software and Graphics Cards)' started by Tsubasa, Mar 15, 2014.