Majority of apps don't support the render target switch.

Optimus works, because apps never change their render target - it decided on the start, either app is using iGPU or dGPU. You can't switch GPUs when its running already.

So, yes, you can potentially make a freshly started full-screen game to run on external monitor (which will force it to use dGPU via kind of control panel list like Optimus uses). But you can't just switch the whole desktop (with apps already running) from iGPU to dGPU when you plug it in "seamlessly", without some apps misbehaving.

So strictly, it is kind of possible to implement, but still it will be quite an additional work,- and the question is, will the GPU driver makers want it? I doubt it, they have enough work with Optimus/Enduro as is. Also, properly implemented Optimus shouldn't really cause any overhead, since its done on normally unused PCI read-back bandwidth. It was explained plenty in Optimus 2.0 presentations - why they feel that this is best route to continue development/polish.

-

Meaker@Sager Company Representative

There is always an overhead, we have seen 3-5% and switching your external to primary ensures no issues

-

I reiterate, you cant' switch GPU without restarting app that running on it and already using its resources. 3-5% is hardly a significant overhead to warrant a hassle IMO.

That's why implementers will be hesitant to spend effort on such scheme - since a lot of userbase would not even know that they are losing several percent of performance, while they will start complaining if you tell them for example that they have to configure on what monitor they want specific app to run.

Note that AMD have chosen to copy Optimus approach, even when they had to lag behind NVidia instead of doing something original. Just because it still provides too much of benefits to be ignored.

I am not saying your proposal is pointless, but just explaining why it won't be really considered.

Maybe later, when eGPU support will bring easier GPU switching/GPU virtualizaton, then they could just use same to seamlessly juggle internal GPUs. -

Meaker@Sager Company Representative

You seem to be a bit confused, say you have a program that gets hit by optimus not being optimised or detected correctly, or you want to run more than 2 monitors or you want to hook up to a high resolution or refresh rate monitor.

You simply plug a monitor in, set it as your primary display and run your application.

This implementation is up and running in our machine.

You can take a 3d window running on the dedicated card and pull it over to an optimus powered display or vice versa and it will still run too.

I have tested this personally. -

What's the expected improvement in battery life in the refreshed Clevo P150em (Sager NP9150) with Haswell? Will there be a price bump in the new version?

(BTW, this is my first post!!!) -

Meaker@Sager Company Representative

No idea yet, but it's the same process so nothing too huge.

-

Considering I already feel set with my Sager NP9150EM for the next few years, the Sager C22T Tablet has me intrigued. Especially considering I wouldn't mind something more portable than my huge laptop and something I could potentially write notes on. It wouldn't be as bad if I could get 5 hours of battery life instead of 2.5-3. Always have to bring the 180W power brick with me which increases my backpack weight much more noticeably than just the laptop.

-

Well, if I have a program that optimus does not detect, I have context menu option or list in control panel which specifies to run it on dGPU. You simply replaced this step with "set primary display - run program there", but it still extra step, I don't see how it makes it better. Also, how do I run app on dGPU if I don't actually have external display then?

Also AFAIK Optimus does not require any special "optimization" from apps. Its really simple - everything gets rendered on dGPU as usual, its just on Present call finished frames are copied across to iGPU instead of simply going into front buffer on dGPU. This copy step is pretty transparent and otherwise no special functionality required from app. The only when it breaks - it must somehow know, what GPU app must use when you start it. When this detection breaks, you get perf problems (or use dGPU unnecessarily), that's why manual override is here.

And, dragging windows like you described does not change GPU that app initially started using. It will always run app on original GPU, it will just copy frame buffer to whatever GPU you dragged it on. So, if you started app using iGPU, and drag app window on dGPU, it will display there just fine, sure, but it will not gain performance. -

Meaker@Sager Company Representative

There are professional applications along with certain titles that don't like optimus. There is no "manual over ride" so if it's just broken, then it's broken. You can set globally to use the dedicated GPU but it's not the same thing.

Plus that few % is what some overclockers strive for so just ignoring it at that point seems to make little sense.

You also get things like display refresh rate overclocking which you dont get in optimus setups, so you can get one of the new 1440p displays and OC to around 120hz. -

Could you give examples? Like, what app, and how it breaks?

There is a control panel which allows to set per-application profiles, which GPU to use, and you do it just once per bad app. Works for me. Manually selecting primary monitor every time I switch displays is not the same, but it does not add more convenience.

It makes perfect sense, just because there are not many of people throwing tantrums over 3% and they will have to just get over it.

it will become perfectly possible on Optimus when Intel adds custom video modes to their drivers, its not the hardware issue. -

Meaker@Sager Company Representative

When you slect an application it still has to be detected and optimus is still being used through the intel chip. If that itself is not working then you can have issues.

I cant remember which ones specifically but we have recieved calls from people about it.

If you dont want that last 3% thats fine but some people would like the option and have the display options now.

All you have to do is select the external as primary when you plug it in (and to default that way in future). After that you are good to go. -

Sager Tablet... alright you have my attention. Got any info on this? I could only find this thread that mentions it.

Edit: Derp, nevermind, buried post, found it. Nothing to see here. IGNORE ME! -

Hi guys,

First time poster, looking forward to P150SM, mostly for the second mSATA option (with 480Gb mSata drive coming out and hopefully dropping in price in the next few years..)..

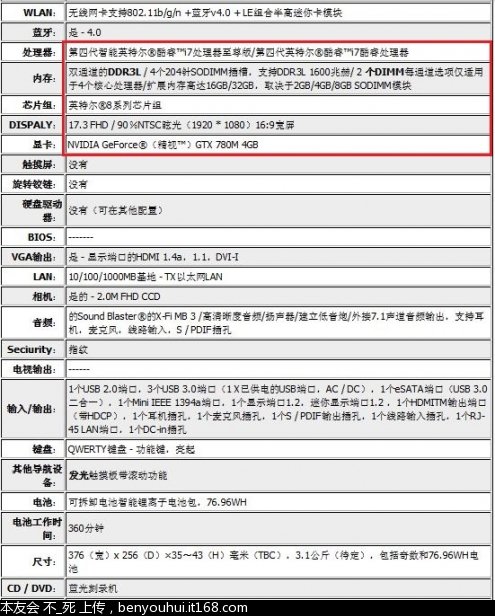

Anyway sorry I haven't read through the entire thread but fell onto this P150SM spec sheet:

http://www.computex.com.tw/PhotoPool2/201303/201303201610320728.pdf

In case it hadn't been posted yet..

Cheers, -

Perfect specs... except for the screen ratio/resolution.

In 2013 we need higher resolutions that 1920x1080, hopefully the 17.3 inch model will offer optional higher resolutions. -

Some of this stuff looks great.

I'll probably have to try and sell my W150ER, and pick up a W230ST if the GPU is GDDR5, or if not I will have to brace my bank account and pick up a W230ST and W650.

I just really hope we see Thunderbolt though. The only reason why I got the W150ER was because I needed 1080p to be productive, I would be happier with a smaller size. -

hm,wouldnt call those specs perfect...

i mean, whats different aside from the 2xmsata and the mini DP? ill wait another year

Sent from my Galaxy Nexus using Tapatalk 2 -

I liked the post with the specs because it clearly shown me that I should not place any expectations/plans on next gen Clevo.

-

Meaker@Sager Company Representative

Well, everyone we see the fallout of the driver issues, no AMD option if that slide has all the details.

2 display ports and HDMI, so looks like many monitor support. -

u really think they would cancel the AMD option altogether? that would suck big time...

-

Nah it's more likely because AMD hasn't given permission to basically announce the 8900M for them. This same thing happened with the HM series of noteboooks, when the GTX 485M was known before the 6970M. I'm pretty sure only Nvidia graphics are mentioned in all of the early P.R. releases.

So are these using actual Sound Blaster chips? Or is it just software? -

I'd be surprised if it was using Creative hardware. Why wouldn't it just be using RealTek again? Or use what Asus has been using, C-Media's chips, they sound better anyway.

Just me being not satisfied with 7970M, I'm starting to think, maybe they should, good riddance. AMD better hope consoles and their ARM server chips save them. They lost 1.8 billion last year. Personally I won't be buying AMD again, as I just am not confident this game company will be around much longer. They still can't get into the tablet or smartphone market and PC sales continue to plummet. Yeah... -

There are no "Sound Blaster" chips. Creative buys the DAC/amps from OEMs like everybody else and puts their own software on it (e.g. the xfi go uses a c media dac).

-

I guess I'm just wondering what SOUND BLASTER® X-FI® MB3 actually means to what we should expect from the sound quality.

-

Meaker@Sager Company Representative

It will likely use a codec but hopefully they have some minimum requirements on the DAC and output quality

Would be awesome if like asus it had it's own separate region of motherboard space (hell daughter board) but that's wishful thinking most likely.

Would be awesome if like asus it had it's own separate region of motherboard space (hell daughter board) but that's wishful thinking most likely.

-

The Sound Blaster MB is for some sound blaster features on Realtek. But you have to have a license to use it. I think Asus uses it too. It's BS is what it is. It's just effects on top of Realtek's drivers.

-

X-FI® MB3 is just a software "enhancer" like THX TruStudio.

Basically, useless, just to cash in more on Creative label. "MB3" stands for "for cheapskate hardware vendors". -

I hate that...

-

I have read that the new P1x0SM chassis are due around mid-April.

What is the educated concensus of when actual built SM laptops from resellers/system builders will hit the consumer market and be available to order ? -

i doubt its gonna be before june/july, at the earliest. unless some builders decide to offer the new chassis with currentgen gpus and IB cpus...

wait,scratch that, since IB and haswell arent compatible so yeah, ud HAVE to wait until haswell comes out

so yeah, ud HAVE to wait until haswell comes out

Sent from my Galaxy Nexus using Tapatalk 2 -

Larry@LPC-Digital Company Representative

^^^^^^Yes we are going to hear that this or that dealer is posting these new machines for sale from now to the Intel release date for the new platform.

They do this ever year...it's just a way to get you to visit their website...

- -

Yes. All machines will start shipping in late June... the earliest.

-

-

Oh dear. What with these ultra-tacky grilles on P157SM???..

-

Speed holes, they make the laptop go faster.

-

Killerinstinct Notebook Evangelist

The P157SM photos are inconsistent, the top image shows the left side having usb and stuff and the photo below it has the audio ports

-

Meaker@Sager Company Representative

The 17 inch is using an image of the 15 inch, they are using mirror flipped images.

-

They all look a bit chunky no? Especially the 15 incher....

Sent from my Nexus 7 using Tapatalk HD -

Aren't they adding an additional storage drive slot to both units? That might be why they look a little more on the bulky side... got'ta make room for that space.

-

- -来自本友会,Chinese?

-

Anyhow the 15inch model is too small for an additional slot

Anyhow the 15inch model is too small for an additional slot

-

According to the mobilator page before the machine got pulled said both would have 2 mSATA and 2 HD/SSD slots... given the extra girth it has some merit. And I know everyone wants that extra size to go to cooling rather than storage.

-

one of the three available SM 15 inch models will have two regular storage bays (one for 9.5", the other for 7" drives) plus 2 msata slots, thats why its also slightly thicker and heavier than its other two revisions.

-

They basically same as MSI chassis for 15" - thicker&heavier because of second hdd slot, also similar "flashier" look. Which clear that Clevo is trying to bite chunk of MSI customer base, but unclear, what advantage over MSI it provides to sway them.

-

Meaker@Sager Company Representative

Well the next MSI super raid edition will have 3 MSATA ports lol, but plenty of SATA III ports to go round.

Now you can get your massive raid arrays if you want, 2x 500GB M500 mSATA + 3x 500GB M500 2.5" would give 2.5TB of storage space with a maximum throughput of 2.5GB/sec lol. -

Any info on w110er successor? Adding a backlit keyboard would be my wet dream.

-

Meaker@Sager Company Representative

The 11 inch is not being refreshed, you will need to look out for the 13 inch.

-

You do see that the led indicator/touchdisplay/whatever it is that is above the keyboard, is different on the P157SM and P177SM right?

Oh yes. I`m really looking forward to upcoming notebooks, especially GT70 with Super Raid 2 with 3 * mSATA SSDs each hooked up to its own SATA3 port. 1500MB/s, yes thank you

-

according to the Chinese pictures it says that battery life is 6 hours, is that credible?

-

On the integrated Intel HD4600, 6 Hours is a safe bet... most likely not so much if you are using the dedicated 780M continuously.

-

I get ~5 hours of normal web surfing on my P151EM, so I would think its easily extend to 6 hours by turning display brightness to minimum and pretty much not doing anything (just to get max battery life).

Next Gen Clevo

Discussion in 'Sager and Clevo' started by BBoBBo, Jan 23, 2013.