Dragon Age: Inquisition is pretty well optimized and not extremely buggy. It's not like Skyrim at all.

-

Just ran the game for the first time tonight. I can officially say that instead of the saying "but can it run crysis?" should be changed to "but can it run assassins creed unity?" absolutely gorgeous game though

-

Really?

TotalBiscuit says it looks like butts, especially texture-wise, on anything but Ultra High. He has to drop it down to Very High--at the expense of a significant drop-off in visual quality--in order to keep 60 FPS @ 1080p. On his 5930K and 980 SLI.

He mentioned how even supposedly efficient post AA such as FXAA and SMAA have a disproportionately large performance hit, which is simply inexplicable as they are basically free forms of AA in every other game.

This game looks average to below average in videos and doctored screenshots using unplayable levels of SSAA, and definitely does not justify its hardware requirements at all.

Last edited by a moderator: May 12, 2015jaug1337 likes this. -

Notice almost no difference in quality between ultra and very highLast edited by a moderator: May 12, 2015 -

Didn't you also say you notice no difference between 30 and 60 FPS?

-

To be fair, he was using SLI and SMAA T2x, which means it forces SMAA M2GPU (which I could imagine would have a massive performance hit on this already-unoptimized game that maxes 4GB vRAM buffers with multisampling) which he didn't know about. But even with that, the performance is still butts.

Also, it appears SLI does nothing in the game, as PC gamer said they managed to ultra the game with a 970 and an i5 and get ~50fps or so. So that's a thing... maybe. I wonder if anyone ever checked to see if it made use of the second GPU or how the usage scaling was? -

I watched the video when he was flipping thru the options menu and and it looked like SMAA wasn't even available at all due to SLI?

SLI scales fine, but even on a single 980, to have that level of shoddy performance and not look like a game 5 years from the future is ridiculous. Ubi$oft wasn't exaggerating when they said a 680/970M is the minspec, because it really is this time! -

Yeah... I wonder if he was using MSAA flat out and said it was SMAA by mistake? If that's the case, it probably explains the massive performance hit.

But still, if PC gamer uses an i5 and a single 970 and gets ~50FPS on max at 1080p, and he gets ~60fps or so on two 980s with an OC'd (I think) 5820K/5930K, then... it looks like SLI isn't doing anything. granted, a 970 --> 980 doesn't really mean +10fps usually, but who knows with this game.

The best thing is to ask that user whose name I forget that starts with a V and his 980Ms how much vRAM the game uses too. I wanna see if it hits high levels. -

Yup, he switched the M and the S. At one point he was talking about using "8x SMAA" LOL.

But before that, he said even FXAA had an impact, which is just like wuuttt. That's why he played with AA completely turned off. -

#TBMixups

But yeah, I noticed people complaining about it. Even so though, I find the tiny increase in FPS between a 970 with an i5 and two 980s with an extreme haswell i7 to be... too little. Something isn't right with that SLI scaling. -

What's scary is that Ubisoft isn't fully done slaughtering frame rate yet. They will add tessellation later. I'd imagine the game will be fully unplayable for AMD cards at that point, exactly as Nvidia intended. GameWorks strikes again.

Assassin's Creed Unity Graphics & Performance Guide | GeForce -

Could be the game bottlenecks on one main thread so even with the extra cores and GPU horsepower it doesn't run appreciably faster.

#Ubitimization -

Maybe... TB said he gets ~60 or so, but a user Very Traumatic has the same setup except the CPU is a 4.5GHz 4930K and he gets an average of 70fps on max (I think with FXAA). But I know he'd buy superclocked & OC his GPUs, so that may also have an effect... but yeah, somewhere there's a huge bottleneck. SLI obviously isn't doing much of its job for that game; be it that drivers are broken or that the game is bottlenecking before SLI can work.

-

Meh. I don't believe in any gameworks unoptimization issues coming from Ubisoft. Because Far Cry 3, for a LARGE amount of time, ran so much better on AMD cards that simply using equal-strength cards from nVidia could result in something like 15-20fps less at times. I remember a bunch of benchmarks, even from users on this forum where the 7970M was tearing 680Ms apart in that game. Now it seems nVidia is tearing AMD cards to shreds until a bunch of driver updates come out and stuff.

I think Ubisoft is just a special case of awful XD.

Either way, the min spec is still too high because the Xbox 1 can do it at 900p high 25-30fps. Min spec is still 720p low 30fps. It just shows how much extra unoptimization PC has, needing a GPU over 2x as strong and a CPU about 2x as strong to get a worse experience. What amazes me still is that people are still excited for Far Cry 4 in the midst of all this. -

One thing that they did ok was the number of moving objects in the FOV, obviously procedurally generated (magical dress lady in Total Biscuit's video), though it is still amazing that they managed to do it (probably the reason game is continuously cranking numbers, in probably a brute-force fashion, therefore goes down the performance). It really looks gorgeous though. Total Biscuit did zoom into some parts where the textures were not particularly amazing, but imho it is not only the textures that makes a game look amazing.

Last edited by a moderator: May 12, 2015 -

I mention tessellation because it notoriously performs worse on AMD than on Nvidia. Now whenever I see tessellation being added after release in some Nvidia-aligned title, I automatically think of nefarious cases in the past such as CoD Ghosts' tessellated dog fur and Crysis 2's invisible tessellated water mesh and tessellated concrete blocks. Significant performance hit on both brands, but AMD cards suffer more due to their architecture, which is one way of making Nvidia cards look better.

About Far Cry 3, I'm not sure what you say was ever the case. Looking at benchmarks, even launch window ones where any extra AMD optimizations would've shown through, it was actually Nvidia cards which performed noticeably better:

The trend I've noticed over the years is that whenever a game is optimized for AMD (Gaming Evolved), it runs good for everyone. If the game is a GameWorks/TWIMTBP title, it's almost always a stinker performance/optimization wise. Of course, it doesn't help either when Nvidia aligns itself with companies like Ubisoft...![[IMG]](images/storyImages/X3rAkzN.jpg)

![[IMG]](images/storyImages/1920_01.png)

Haha I'm sure the massively jarring pop-ins and LOD transitions, while funny at first, would get annoying fast when it starts interfering with gameplay, such as running into NPC's and terrain objects which suddenly appear out of thin air right in from of you.

I've been through this crap with PlanetSide 2. In huge battles, when the server is buckling under the sheer stress of a massive concentration of players in a small area, dynamic culling makes them and pop in and out literally 5 meters away. So you'd get killed by enemies you can't see, or accidentally teamkill or run over friendlies you never see or who don't appear until it's too late. -

^^ Yea its annoying when it happens but I didn't see much (during the 2 hours I played so far

)

)

-

Assassin's Creed Unity Benchmarks - Notebookcheck.com Tests

those garbage framerates even in low haha, this is unbelievable

-

1024x768 Low...must look like a game from 15 years ago.

-

dumitrumitu24 Notebook Evangelist

I guess that this guy is lying?

https://www.youtube.com/watch?v=r5I5vpfS32s

Configs

1080 P

i5-4200M

16GB RAM

average 40FPS best case above 50

All Ultra

exception:

Shadows high

AA- FXAA

avarage 40fps on 770M with i5 on 1080p?maybe he means 770gtx.Im not sure if either 770gtx can achieve such framerate -

well i always take notebookcheck benchmarks with a grain of salt, it's a good indicator but from my experience i always manage to get a better framerate than they do with the right tweaks and settings. They use a particular set of settings i guess.

Maybe they always have Vsync on or another useless monster eye candy setting u can dump easily.

Damn, i was pretty sure i could throw anything on my 750M for a while as long as i play 768p medium (i'm not a graphic nazi). Guess i underestimated Ubisoft. This is ridiculous any game should run easy at 768p with modest settings. -

What's up with the cut seen s running at only 17 fps. All other games the cut seen s fps go way up.

-

Why no frame rate counter if he has nothing to hide? Because he's lying. 40 FPS isn't his average, it's his max. He's getting about 20-25 FPS on the streets and it looks even laggier because of some microstutter (uneven frame pacing). 30-40 FPS is only when the character is climbing around by himself or indoors.

NBC's tested 20 FPS for the 770M looks about right considering they used 2x MSAA and a more demanding test sequence that was running through the streets the entire time. -

I have a 770m with everything all the way up except aa and I'm bearly getting 23 to 29 fps. On rare occasions it's above 30. And on cut scene's it's around 14 to 17 fps. Crazy

-

Cut scene bug seems fixed no (there was a 0.5GB kinda patch yesterday I think)? I am not seeing any frame drops anymore. Even with the cinematic experience, I am liking the game, thanks to the setting

-

Working as intended. This is why they are called "cinematics."

D2 Ultima, moviemarketing and heibk201 like this.

D2 Ultima, moviemarketing and heibk201 like this. -

So... LTT forums has people with loaded meme cannons aimed at Ubisoft. Here we go!

First this post: AC: Unity isn't that bad! - PC Gaming - Linus Tech Tips

And then this picture

![[IMG]](images/storyImages/post-54950-0-71257000-1416055626.jpg)

-

what settings ?

-

killkenny1 Too weird to live, too rare to die.

He means it as historical era/city/atmosphere/etc. -

Well, when my GPU actually agrees to stay at 99% load, I get 30-40FPS in Paris, maxed out with FXAA at 1920x1080. Seldom dip below that. Can get higher in the more linear sequences. Minus a certain tower climbing bit. That was way more demanding than it had any right to be.

Cutscenes no longer drop frames as bad as they did at launch and the game looks fantastic. LOD transitions, especially for crowds, are still weird but better than they were. No huge fps drops even in one particular mission that has thousands of NPCs on screen.

Shame it still crashes once every 2 hours or less. Super unstable. Can't change graphical settings mid-game either unless I want a hard lock. -

Wow that screenie looks good indeed, but the visuals of Unity is still much better than Rogue imho. Cinematic experience put aside, the game honestly looks good (it might be a matter of taste though as I really like the French revolution setting).

-

How much vRAM does it use? You're the only one with a 8GB vRAM card that regularly replies in this subforum, so it'd be cool if you could tell us for these unoptimized titles.

If you want, you can install the free version of playclaw 5 and use the GPU overlay to see its real-time usage and vRAM usage. -

MSI afterburner shows vRAM usage, I can just alt tab and take a look at the graph.

-

Okay. Well how much does it use? XD. Everyone with 4GB says the game maxes it. Like every other AAA title since August.

-

Maybe so, but...

![[IMG]](images/storyImages/ubinewvzu16.jpg) moviemarketing, killkenny1, maxheap and 2 others like this.

moviemarketing, killkenny1, maxheap and 2 others like this. -

killkenny1 Too weird to live, too rare to die.

^^^Wait, I even need GTX980SLI to view this .gif? Nice job Ubi!

-

Oh I love it

-

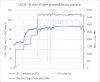

Caveats first:- not had chance to play the game much at all, and no idea if the scene I was running around in was representative of the rest of the game or not. That said, I dumped the GPU load/temp/mem usage out and graphed it. Game was set to ultra on everything and ran at 1920x1080. Not sure you can conclude it won't go above 4GB in other scenes though?! But anyhow, I hope it helps.

Edit 1: Run on P35X CF1

Edit 2: Mem Usage Metric taken from GPU-Z and is "Memory Usage (Dedicated)"moviemarketing likes this. -

Now THAT'S interesting... the game eats up 4GB vRAM, but doesn't ever go above 4GB... it feels like it's just designed no matter what to eat up 4GB vRAM and what, 2GB system RAM? I don't get why somebody would code that...

-

For the cinematic experience brother

-

Watched Linus and Luke rip apart Ubisoft and AC Unity on last week's WAN Show. Mentioned how even CoD: Advanced Warfare looks better.

heibk201 likes this.

heibk201 likes this. -

What is the best aa to use on this game. I mean what looks good and is performance wise. I have a 770m with 4800mq. There is a lot to choose from and I really don't know what the difference is between them. I know all the way maxed is best experience but I only have 770m so was wondering Wich of the lower settings is best for performance and looks.

-

Use FXAA.

-

Thanks I will try that. I will have to check when I get home but I think I have been running it on txaa or something like that.

-

LOL how do you tolerate the severe blur of TXAA, it's even worse than FXAA. And TXAA performance stinks too, same as 4x MSAA.

I think you will get a nice FPS boost and sharpness increase (never thought I'd say this) with FXAA. :thumbsup: -

moviemarketing Milk Drinker

Interesting comments from someone claiming to be on the Ubisoft development team: Alleged Ex-Ubisoft Employee Talks About His Experience - ASidCast ASidCast

![[IMG]](images/storyImages/10365850_710710982350287_8205349610759450532_n.jpg)

-

No words to describe this.

-

what's the quote from octiceps's signature again?moviemarketing and octiceps like this.

-

CPU running 100 %

-

I also heard frametimes were a lot better on 900 series nVidia cards and that even older 700 series (even if stronger, like 780Ti vs a 970) would suck. So... no idea what went on there. If nVidia is conspiring to screw over anybody not using their cards, they've gone to new lengths this time because anyone not using their latest is screwed too.

I'm actually starting to have doubts about Ubisoft being this bad on their own now. Man I hate the AAA gaming industry right now.

Assassin's Creed Unity system requirements (Confirmed)

Discussion in 'Gaming (Software and Graphics Cards)' started by jaug1337, Oct 23, 2014.