What we're saying is, if 20nm maxwell is the 980M and 970M, then it's a MASSIVE disappointment that it doesn't even match 28nm desktop cards... unless the 980M is in fact a 75W and 970MX and 980MX cards are going to come out that makes the entire thing looks like poo splattered everywhere; but in that case, why not release those cards now? Because you don't want desktop to be outstripped by mobile? That's ALSO stupid as hell.

-

-

Killerinstinct Notebook Evangelist

Interesting ur finally starting to think , the 980M could be only at 75 W tdp , and yes why wouldn't nvidia release 980mx and 970mx later on? They did use gk104 from 600-800 series to make profit.

What do you mean that I don't want desktop to be outstripped by mobile? I don't care what the hell happens to the desktop portion since they don't have any design constraints like laptops, which makes laptops far more interesting since they have to innovate to get power to a small form factor.

Why did they release the cut down version of the gk104 instead of the full gk104 version when they released gtx 680M? Then they release the gtx 780M the next year.

Nvidia could also release two versions , 28 nm and 20 NM, for mobile parts as well to sell their slim laptops at outrageous prices.Kaozm likes this. -

When I said "you" I meant "they" as in "nVidia" as if I was asking them a question.

Also, the 780M required more power than was currently accepted in laptops to run and cool; 120W per card. Laptops needed to be sold with 240W PSUs and slis had to get 330W PSUs as opposed to the 220/300W that were previously the standard. The 680M was a safe-bet launch and was not a full GK104 either; which is why the 780M beat it so badly.

basically I am saying that if this lineup is 20nm, then it's a HUGE disappointment, and a disgrace to the flagship name. Why would you do a die-shrink + new architecture that doesn't beat the last-gen's flagship? When die shrink + arch change came out last time, 1 x new card midrange architecture (GK104) = 3-way SLI last-gen flagship (GF110). For a GM204 to not even beat GK110 with a die shrink? Far less not beat new arch minus die shrink? It feels like they're wasting potential like crazy. They could probably easily squeeze another 50% out of that card if that's the case... or more. -

Killerinstinct Notebook Evangelist

I think 180 W was sufficient for the 780M since it did run in the P150Sm laptops. You just couldn't over clock far with the 180 watts.

I'm saying that the gm204 on laptops could be 20 NM and 28nm. Desktop will be 28 nm . Also cloud did post that the gtx 980 could be 30% faster than the 780ti with a tdp around 180 . And if thats true on 28 NM then a 980M at 20 NM with a tdp of 60-75 and the scores we have been seeing could be true. The whole point of maxwell , to me, is to create a huge headroom for power and tdp. Also like I stated before they could have 20 NM for thin laptops and 28 NM for laptops like gt72. All we can do is wait till the 17 . And yes we most likely can over clock the 980M alot.

. And yes we most likely can over clock the 980M alot.

EDIT: I think soldered GPU will be 20nm and mxm will be 28 NM for laptop ,I think that could be a better way for describing it. -

You're still missing the point... it is stupid of them to artificially limit mobile chips in both clock speeds and core count if it has a die shrink over desktops, just so they don't beat the desktop cards. There IS a limit to overclocking. It's why a single GTX 770 will never beat a 780Ti. The 780Ti has FAR more cores and memory bandwidth etc. You can OC the 770 within an inch of its life, but it simply will not beat a 780Ti because of those reasons.

If they limit the FLAGSHIP MOBILE CARDS at 20nm to being weak as hell the way they currently are COMPARED TO DESKTOP CHIPS, then they have wasted the entire 20nm movement and architecture. Thus it makes absolutely no sense for them to do so. These are similar-to-desktop chips and are sufficiently downclocked/lacking cores from GM204 desktop cards, and thus are 28nm. Even at 75W, for 20nm that is STILL a massive disappointment, because there is nothing better being released. That's the entire point.

I don't know about the P150SM using 180W and 780Ms. I know 680Ms worked with the EM laptops and 180W though. Maybe the SM laptops would downclock if they ran out of power? That's how the stock vBIOS is designed (but not how anyone with a 780M and some sense uses it) -

Killerinstinct Notebook Evangelist

They could always release gm200 @ 20 NM for laptops.

Your missing my point. This is not about maxing out the architecture and getting the maximum power out of the cards.

The important part is getting gm204 into a smaller laptops such as the p35v3 but have the same scores as a laptop like a gt72. The only way this is possible is that the p35v3 has a 20nm gm204 to keep the tdp and power consumption low.

But in the gt72 there might be 28nm gm204 since it can handle the higher tdp and power consumption hence why it is slower than the desktop counterpart.

Perf/watt is key here

Also htwingnuts p150sm review:

http://forum.notebookreview.com/sag...wingnut-review-sager-np8268-clevo-p150sm.html

That had a 880M with 180 Watt psu

Anyways this is all speculation , we should know facts in 4 days

-

Please somebody tell me why would lower process node alone give better performance on the same architecture, number of modules, cores and same clocks?

Just want to learn something new. -

Lower process means the actual die size is smaller. This means it produces less heat, draws less power, and you can pack more into it. If I made a 20nm Kepler 780Ti and left the rest of the specs EXACTLY the same, it'd perform the same but draw less power and run cooler. That should be about right.

-

I think you are missing the point that D2 ultima is saying. he's saying that the performance improvement for gm204 would be too disappointing IF it has BOTH PROCESS SHRINK AND NEW ARCHITECTURE.

in order to it to be 20nm it needs to have bigger performance jumps, and when I say big I mean bigger than the current jump, or else it doesn't make sense.

you are forgetting that benchmark is different from stress test.

you can run a benchmark without throttling, but you just don't know the temps, that is different from not throttling during stress tests. you need to know the temps at least to be able to say the p35v3 can cool a 980m like gt72.

running the same benchmark, gt72 could be just in its 70s while p35v3 jumps to 95C

shorter travel distance -

Smaller transistors, much closer together, so they switch faster. Basically like reduced latency.

-

Again. You're looking at the side of the barrel of "WOW WE GET POWER IN THIN FORM FACTORS, WEEE!"

This means NOTHING for us of the high end spectrum. It is a waste. If they wanted to go per/watt and cram that much into a 75W or whatever, they should have called those cards the 960M and 965M chips instead of 970M and 980M. If it IS 20nm, then they have PURPOSEFULLY held back the mobile launch in terms of high end cards just so desktops are happy that they aren't beaten by the mobile market.

Further to this, making 28nm and 20nm versions of the same high end mobile cards and selling both in the mobile market cuz larger laptops can "handle" the hotter cards is just boundless stupidity. Why would you make a hotter, larger-power-draining version of a card if a more efficient, cooler version exists already? There is zero reason, at all, ever, for this to happen.

I am not doubting that if 20nm maxwell comes out we will see 20nm maxwell cards in mobile. Hell, mobile'll probably get it first. But if they ARE using 20nm processes and they are using these cards as the flagships for the mobile market, they've entirely wasted the advancements for high end; they could have done far far better cards and put the current cards we're seeing much further down the line. -

First off depending on how you are using performance it doesn't.

It would require less energy to provide the same level of performance, and technically it might have a small difference based on a speed at which the transistors function and actual distance the electricity travels, but that should all be fairly minimal.

However a change in process from say 28nm to 20nm will certainly have other advantages.

First the 20nm will require lower voltage and amperage to power it causing it to have a better performance to wattage ratio.

Second it will take up a smaller portion of the chip meaning either more cores (almost always the case), or an increase in other aspects like L2 cache, or more specialized processing units can be added (often multiple of these at once).

Third chips actually function more efficiently when they are cooler. A combination of the lower power draw, and the on chips with fewer of the SMM active having the active cores be spread more evenly across the die, will normally gain a small performance boost this way. This also can help with over-clocking potential.

There are other things that can affect it if you go deeper into the physics but most of them tend to be difficult to explain or just theoretical, and normally have a smaller impact as well.

___________________________________________________________________________

So its not the smaller process making the performance boost, its the smaller process making more room on the chip and a better performance/watt ratio that is the key. (Of course then they use that room for more cores to raise the performance.)

Edit: lol took too long writing I see multiple people beat me too it with simpler explanations.

Second Edit: Also many people have the misconception that for instance in a 20nm process you would fit 25 (5x5) transistors in a 100nm square. The 20nm or 28nm or 14nm you see is only one of many measurements. for instance 28nm has a contacted poly-pitch of 114nm and a metal pitch of 90nm (amongst many other measurements). 20nm on the other hand has a contacted poly-pitch of 90nm and metal pitch of 64nm. So you can't just compare the 20nm and 28nm to figure out the improvement, its far more complex. -

That's essentially what they did to the GTX 860m with one being GK104 and the other a GM107. One has a 45 watt tdp and the other with 75 watt tdp. Both in the 'same' class and with the 'same' performance....or somewhat the same performance. But one is the hotter, larger-power-draining version of a card when there is a more efficient, cooler version that ALREADY exists....the GTX 860m Maxwell chip.

-

While almost as stupid, it was still different. One was Maxwell and the other was Kepler. Yes the GM107 proved to murder a severely cut down GK104 with a gimped memory bandwidth... but that was cross-architecture. I think nVidia probably didn't actually test how good the kepler card did before releasing it or something lol.

-

Thanks. That is what I thought (although still may be wrong). So in this case the argument "it can't be 20nm because performance is lower than Desktop's chips" should not work, right? We don't count watts and temperatures, all we count is 3DMark points.

I am not saying that mobiles chips are 20nm though.

P.S. I still have a small feeling that raw performance does differ with all the same but different node. I have a feeling that I read some article which was saying that lowering node nowadays does not give same performance boost as it was earlier and difference can be zeroed pretty soon (14nm?) but I am not sure about this. -

@ningyo

Nice explanation

A die shrink forces engineers to shift stuff around (internal chip sections, interconnections etc). It's not like reducing a photo to a lower resolution by making it smaller.

There are other factors that influence it too like leakage current, internal parasitic capacitance, intermodulation between adiacent signal lines etc etc. The variables are a lot in the equation. It would take me 5 of my university's courses to explain everything

@D2Ultima, Killerinstict

Guys don't heat things up. It's not worth it. This time around mobile and desktop cards are based on the same core and same 28nm process. If Nvidia engineers went with 2 different designs it would be a mess as some chips would be 20nm and others 28nm. It would cause fragmentation so as I see it, it's improbable (not impossible). Just like Microsoft is doing with Windows 9, same core OS but with some GUI changes based on the platform its running (tablet or normal PC). Also by releasing on 28nm this year and maybe moving to 20nm next, Nvidia can fine tune its Maxwell architecture better. -

860M Maxwell was soldered, while 860M Kepler was MXM. It was clear they were intended for different market segments -- Maxwell for thin and lights, Kepler for standard >!5" laptops.

-

Guys, no need to worry or argue about it. You're hypothesizing about something that's never happened in Nvidia's history and makes zero sense from a business perspective. Desktop and mobile Maxwell will be 28nm at the same time, mark my words.

Tonrac, LostCoast707 and Babtoumi like this.

Tonrac, LostCoast707 and Babtoumi like this. -

i wish asus would make SLI laptops and make them a bit thinner if maxwell is going to run a lot cooler. this sager laptop is so bulky and loud that i dont even use it as a laptop, just a desktop replacement. hard to relax and get work done

-

If you think that's bulky, you should have seen their old 18.4" models with SLI 280Ms. Or Alienware's M8x & AW18. This thing is light and easily usable considering lol.

-

Guys, im now participating ASUS expo, any question for those ASUS big bosses? I already asked about new G751/771, they're not sure but looks like the date is somewhere in November

Kinda sad as I expected October

Kinda sad as I expected October

-

Tell them to make thinner notebooks.

I'm really excited and having a P34/35 V3 with a 970/980 in it. Need to hurry up and be released.iAGZzzz likes this. -

Update: I just talk to the Vietnam Asus biggest boss, the G751/771 is still in "alpha" stage and it's not going to be released soon. Pretty suck news for me as im ready to click the preorder button.

-

I dont think the guy know what he talking about, as we already seen a working G751 so it cant be in "alpha stage" as he said eh =(

-

nVidia Maxwell... Speculation since 2013...

deadsmiley and LTBonham like this. -

I'd really like to get a new 13"-15" notebook, preferably thin and light - but not if it's going to be a noisy oven. If 980M tdp is going to be circa 100W, and 970M tdp is going to be circa 75W, then regardless of the GPU performance the notebook is going to need to get rid of a significant amount of heat (unless there's another factor that I'm not appreciating). If manufacturers simply swap an old-gen 100W card for a new gen 100W card and take all the benefit as performance gain, then in my opinion that's missing a trick.

Will be very interesting during Oct/Nov to see what laptops get released and how they get on from that point of view. I've waited a while to upgrade, I can wait a bit longer.... current laptop is 17" Clevo, T7700 CPU and Geforce 8700M and sounds like a vacuum cleaner

-

Killerinstinct Notebook Evangelist

At least it doesn't sound like a jet plane at takeoff

-

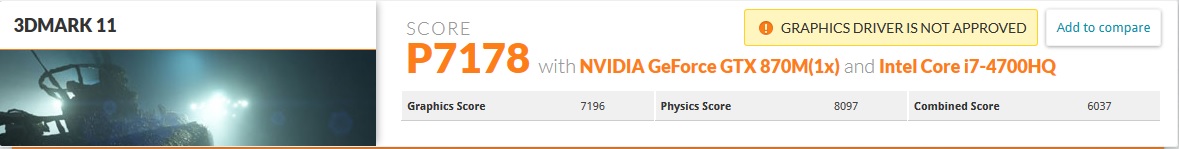

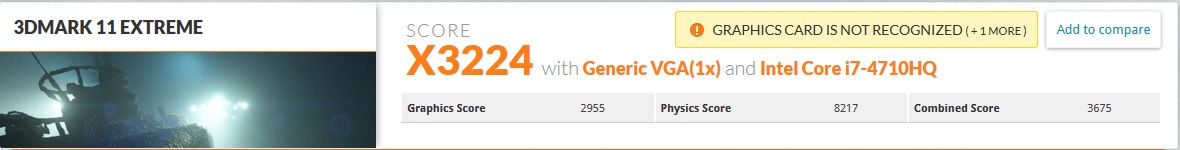

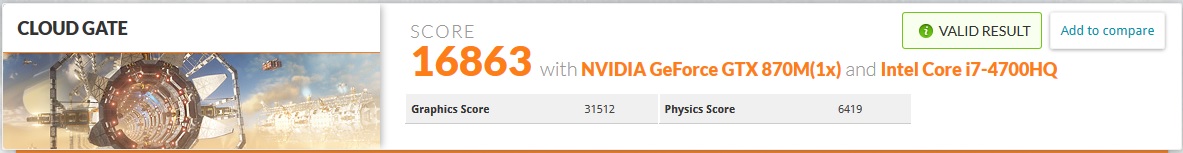

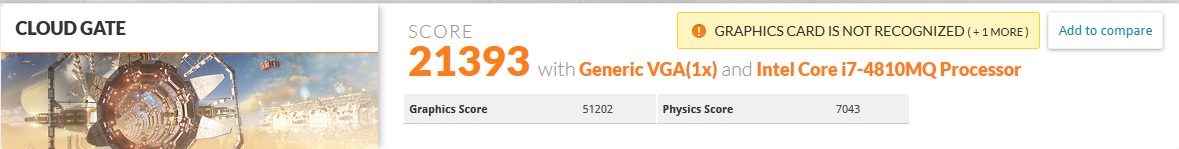

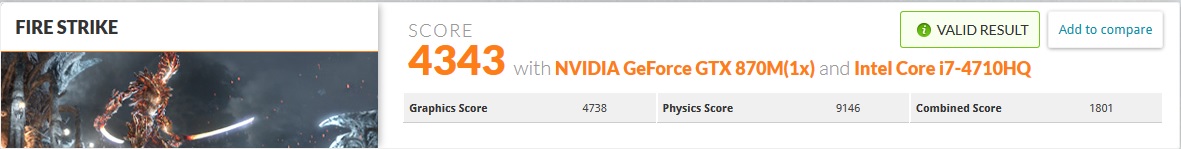

I didnt have the energy to do this yesterday but here they are. A complete synthetic comparison between GTX 870M/880M/970M/980M

Enjoy :thumbsup:

3DMark11 Entry Preset

GTX 870M

GTX 880M

GTX 970M

GTX 980M

3DMark11 Performance Preset

GTX 870M

GTX 880M

GTX 970M

GTX 980M

3DMark11 Extreme Preset

GTX 870M

GTX 880M

GTX 970M

GTX 980M

3DMark 2013 Cloud Gate

GTX 870M

GTX 880M

GTX 970M

GTX 980M

3DMark 2013 FireStrike

GTX 870M

GTX 880M

GTX 970M

GTX 980M

3DMark 2013 Ice Storm

GTX 870M

GTX 880M

GTX 970M

GTX 980M

All these benchmarks have been posted to the first post in this thread, so if anyone have missed them, you can also find them there.

At last, it is now confirmed that

N16E-GX = GTX 980M

N16E-GT = GTX 970M

Cheers -

so gtx 960m might be a 192 bit part right? and it will beat my gtx 780m...

-

Good stuff. The only thing that really matters out of that is 3DMark 11 X and Fire Strike. Those are 43-56% improvement 980m over 880m. Pretty impressive. The fact that 970m beats the pants off 880m is also great, and probably runs 10C cooler at least and won't throttle (hoping).

No 870m is 192-bit, 960m likely still 128-bitCloudfire likes this. -

irfan wikaputra Notebook Consultant

Wooohoooo i am so lucky guys just got a buyer for my clevo p377sm-a for an overvalued price lol, i am ready to buy the upcoming top dog as soon as it releases. Pls advice clevo or alienware 18? I never owned alienware 18

Cloudfire, D2 Ultima and Killerinstinct like this. -

Yes. "Can you please hire a bios tweaker who can at least be trusted to tell the difference between AND and XOR?".

Seriously, though - ask them why the ram-timing, throttling thresholds and boost policy settings on all their current models are borked.

And ask them why their previous generation laptops were locked to sata 2 when the motherboards were all certified for sata 3. And why it was impossible for Asus to change that via bios update later.

Note that in either case, there's literally no reason of any kind for why Asus would not fix this, other than incompetence.

It certainly doesn't help either that a lot of cooling systems rely on the gpu temp never rising above a fairly low limit, without severely throttling the cpu.

By the way - there is a known issue where the gpu score will be reported wrongly when the cpu throttles in a specific way. The output is completely staccato, but the actual score is higher than it should be. Not saying that's exactly what's going on here. But remember we have one single uncertified score to go on that looks to be within the realm of what's remotely possible at the moment. And that run suffers from some sort of unusually severe throttling problem. -

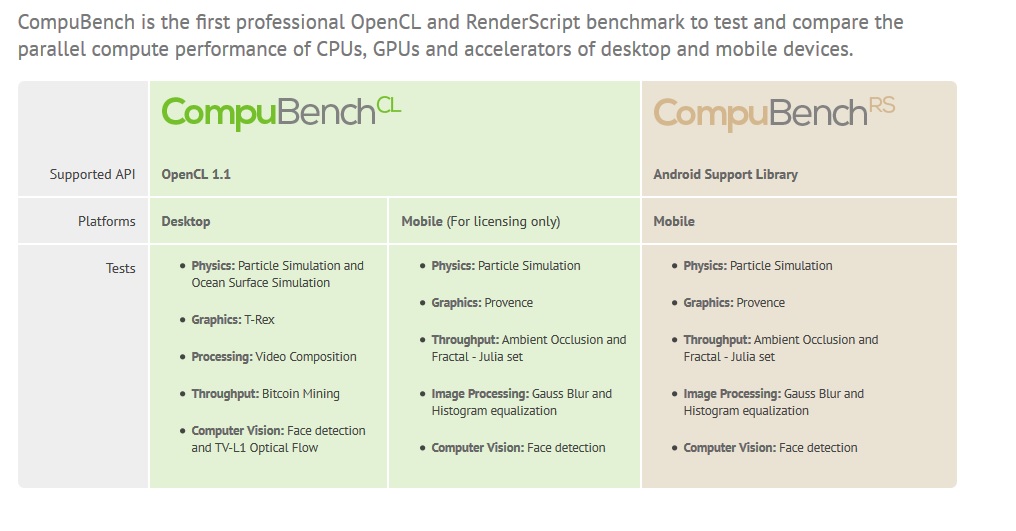

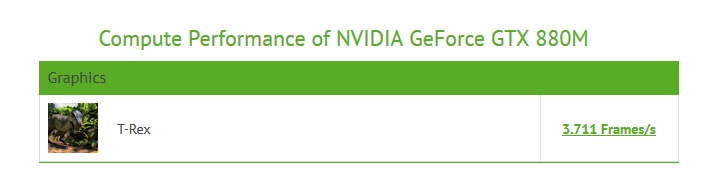

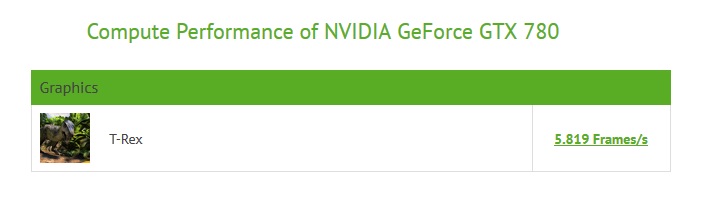

I can confirm that the OpenCL compute performance of mobile GM204 will surpass mobile GK104 by a great deal.

Here is a leak from CompuBench. This is the T-rex part of the test, that use OpenCL in a graphic test.

Here is how CompuBench describe their software:

GTX 880M

GTX 970M

GTX 780

Thats right. What you basically see here is a 75W GTX 970M beating a 125W GTX 880M by 45% in OpenCL performance

It is only 10% slower in OpenCL than the 250W GTX 780. LOL

Either way, OpenCL or DirectX, the Maxwell cards are dealing deadly blows to the Kepler cards :thumbsup:

Another thing:

GTX 970M have 1280 cores and 10SMM. Not 11SMM like TechPowerUp posted.

Described here: It have 10 compute units

http://compubench.com/device.jsp?benchmark=compu20&os=Windows&api=cl&D=NVIDIA+N16E-GT&testgroup=infoKillerinstinct likes this. -

The more I'm seeing the more I'm drooling over these new Maxwell chips.Cloudfire likes this.

-

Why is the 880M even being compared? The 780M outperforms it.

-

I updated the post btw. Seems like the GTX 780 score was a little too high. I hope.

Its only 10% between GTX 970M and GTX 780. lol -

See, wasn't so hard, was it ;D But that explains the insane synthetic performance increases - which, I might add, people would have been able to tell if the individual tests in 3dmark had been posted.

But this is absolutely promising. Looking forward to see the 25-30w variant benchmarks now. -

And please nVidia. Keep vRAM to 4GB on 980m. We can see right through that horrible and expensive marketing ploy. Cut $200 off the price of the card an go with 4GB.

ericc191, Robbo99999 and iAGZzzz like this. -

I agree with both statements.

I think GTX 980M should probably give around 50% more FPS in games than GTX 880M.

GTX 970M seems like the perfect chip for those who want low heat and massive performance. Although I also think the GTX 980M will run maybe cooler than GTX 680M. Remains to be seen but I am positive

Man you are lucky. I`ve had mine for sale for a month now and always get these "can I buy it for a dollar" or "I have an awesome overpriced Apple Macbook with GT 650M. It cost the same as your machine. Obviously you should trade it with me"

I have them all, but too lazy to go fetch them for you. Sorry

The comparison I made should suffice for most people

-

http://forum.notebookreview.com/gam...w-maxwell-cards-incoming-209.html#post9774880

880M is being compared. Pointless comparison, considering it will just throttle and die. The 780M outperforms it. -

No it doesnt.

I checked the GTX 780M scores and they were lower on all benchmarks. The comparison is valid and reflects the 880M synthetic performance.

Well, 4GB or 8GB, they will probably charge an arm and an leg for it anyway. Just wait til they release the marketing slides when Maxwell announced and it will sell like hotcakes

But I agree, we dont need 8GB. -

Yeah. Hoping for a machine similar to my Clevo 13" with 970m. Would be awesome.

LOL, yeah, it's pretty sad. I get that too after I do my reviews and go to sell. It's a $3500 SLI laptop, less than a month old, and people offer like $2000. I just respond "ok for $2000 please consider this single 870m laptop" For some reason $500-600 off a laptop just isn't enough I guess.

Cloudfire likes this.

For some reason $500-600 off a laptop just isn't enough I guess.

Cloudfire likes this. -

Food for thoughts.

GTX 660M:

384 cores. 50W TDP

GTX 680M:

1344 cores. <100W TDP

3.5x more cores than GTX 660M

GTX 680M based on GTX 670, a 170W GPU

GTX 860M:

640 cores. 45W TDP

GTX 980M

1664 cores. ? TDP

2.6x more cores than GTX 860M.

GTX 980M based on GTX 970, a 150W GPU

I think we might be pleasantly surprised.D2 Ultima likes this. -

Ain't technology wonderful!?

-

That's because the 880M is clocked higher. If you raise the clock speed of the 780M to match it, it will perform better.

-

memory bandwidth doesn't mean a HUGE amount in terms of game performance. Card-to-card it probably will, but across core counts/architectures/etc? Consider it a null point for the most part. It's VERY rare you'll run into a game where a stronger card will be bottlenecked by a bit less memory bandwidth.

Also, please note, memory bus width is only half the story. Memory speed MUST be looked at in tandem to find memory bandwidth. A 192-bit, 5000MHz mem clock card (120GB/s) has more memory bandwidth than a 256-bit, 3600MHz mem clock card (115.2GB/s). Examples used were GTX 870M stock versus GTX 680M stock, respectively.Cloudfire likes this. -

When I bought my D900F in 2009, people at school were offering me $1000 TTD (read: $155 USD) for the machine. They all thought it was some super super old, heavy laptop from like 6 years before =D. Based on the reactions by that and how I was able to leave a $2808 USD laptop in the open, unattended for extended periods of time without anyone stealing it (don't worry I had someone keeping an eye on it to see if anyone would try taking it), I decided that Clevo would be my next machine.

Hey, who am I to complain if potential thieves decide that the strongest laptop in my entire country is a really big business laptop? :laugh: It's the primary reason I did not consider originally going for an alienware this time around. And true to fact, most people just assume it's really big and old, and don't bother with even giving it another thought XD.HTWingNut, Cloudfire and Mr Najsman like this. -

The MSI GS60 gets the 970M. The thin Gigabyte P35v3 get both 970M and GTX 980M. A machine that could do top x7xM GPUs earlier.

I think GTX 970M should absolutely be featured in thin and small notebooks.

Like I posted a few pages back,

GTX 970: 1664 cores. 150W TDP

GTX 970M: 1280 cores with lower clock and reduced voltage. Should run nice and cool I think.

I can understand that people try to make a move and get a computer with big discounts, but some people clearly cross the line and are heading to rude town

Almost 50% off a pretty much new notebook is stretching it bigtime yes.

Good points there D2.

Nvidia usually design cards and put a memory bus thats it meant to have. Sometimes they do scummy things, but mostly they have the bandwidth they need. You can overclock the memory to compensate a little luckily.

I am very interested to see what the L2 cache on the GM204 mobile cards will be like. Should help

Maybe

I`m still not convinced that all 880M cards and all brands have throttling issue with the 880M. Many reviews on Notebookcheck have no issues with theirs. -

I already checked and notebookcheck has the same scores I do with the stock vbios, that is to say, throttled. Modded vbios my scores are all over theirs by a decent margin. Again, people aren't looking at the core speed so they aren't seeing what's happening.

Sent from my HTC One_M8 using Tapatalk

Brace yourself: NEW MAXWELL CARDS INCOMING!

Discussion in 'Gaming (Software and Graphics Cards)' started by Cloudfire, Jul 14, 2014.