165W! 256-bit interface though. What the heck?

-

More efficient with bandwidth compared to Kepler I suppose. Good news for us mobile users.

But Im thrilled about the TDP.

GTX 980:

2048 cores @1216MHz (sick high clock)

165W

GTX 980M:

1664 cores @1085MHz

80-90W TDP??

GTX 970M:

1280 cores @920MHz

60-75W??

Kepler:

GTX 670

1344 cores @920MHz

170W TDP

GTX 680M

1344 cores@720MHz

100W

Not only do we get lower TDP for the desktop card vs Kepler, but also a lot less cores!Ningyo likes this. -

Yeah I hear ya. Maybe holding off on 384-bit for next year so they have an upgrade...

Wonder what release date will be for mobile? Sept 19 for desktop... any definitive information on that yet? -

King of Interns Simply a laptop enthusiast

Pretty sick indeed!

-

Where is everyone seeing the p35g v3?

Anyone have a link to this?

What card do you think will fit in the P34g v3?

Will razer blade do the 970m?

Ahhhhhjjjjj! -

So basically YES, that photo was a photoshop and NO it was not fake

Cloudfire likes this.

Cloudfire likes this. -

King of Interns Simply a laptop enthusiast

Any idea of pricing on the 970M/980M cloud?

-

I guess I will wait for the full GM204 mobile chip since GTA V is coming to PC in January 2015. No rush for me to get the 980M now.

I will tell myself that I can wait, I can wait, I can wait........ -

True. Last week, a mobile network provider in China uploaded the photos of iPhone 6 and part of its specification few days before Apple officially announced the phone.

Cloudfire likes this.

Cloudfire likes this. -

Killerinstinct Notebook Evangelist

-

I have no idea. I`m hoping they will present both Maxwell for mobile and desktop on Sept 19th event.

But if they don`t, mobile release should be very close anyway.

Here is P35v3 with GTX 980M

Here is Gigabyte P37 with GTX 970M

Here is Gigabyte P34v3 with GTX 970M

So we are atleast looking at 3 new Gigabyte notebooks with 970M/980M

I have no idea about Razer and their plans. I think the TDP should be low enough to even feature the GTX 980M considering it use a GTX 870M that will run hotter.

Not sure. If I should guess, same price as current 880M/870M cards, maybe priced a little above.

Good luck with that once the 980M users chime in

Full GM204 is probably not here until next summer. -

I think Maxwell is going to bring mobile graphics and desktop graphics closer than ever before, in regards to performance.

I'm very impressed and very happy to see this. The TDP is great. Now you SLI guys don't need to mod your PSU's. Let's hope Broadwell is just as efficient.

Cloudfire and lonelywolf90 like this.

Let's hope Broadwell is just as efficient.

Cloudfire and lonelywolf90 like this. -

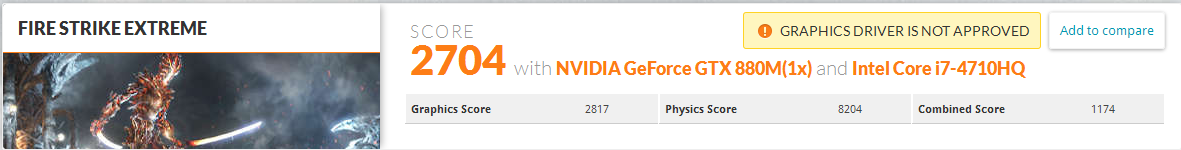

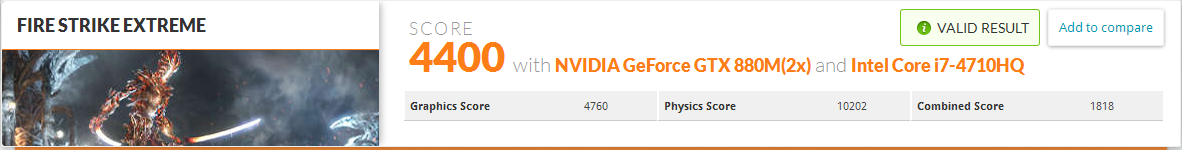

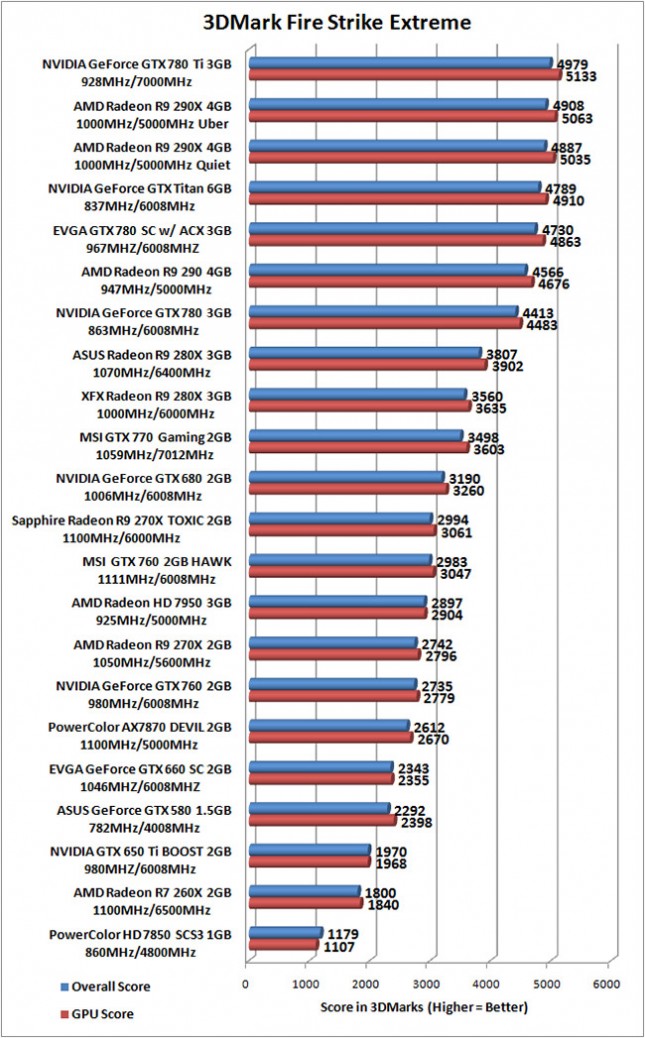

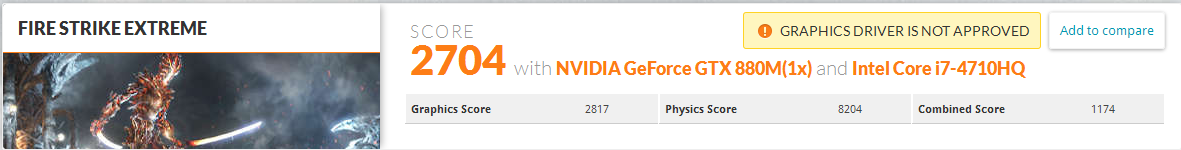

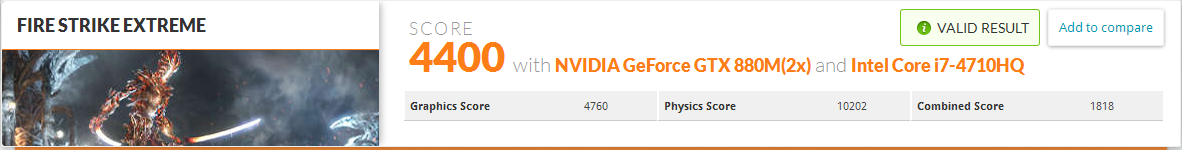

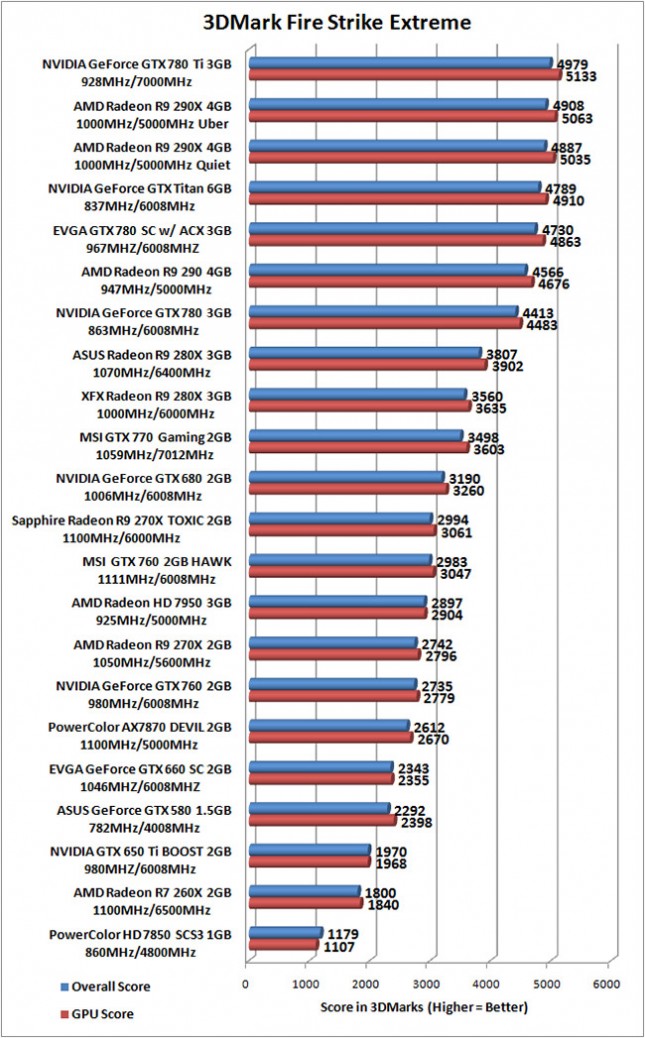

Firestrike Extreme benchmarks.

1440P resolution, 1.5GB memory usage, lots of shadows and AA. Should really show what these cards are capable of.

As always, pay attention to the Graphic score.

First up,

GTX 880M

GTX 880M SLI

GTX 980M

Comparison with current desktop cards. Yup, thats right. GTX 980M is 4% from a GTX 780

deadsmiley, vanfanel, Tonrac and 1 other person like this.

deadsmiley, vanfanel, Tonrac and 1 other person like this. -

If the 980 really is priced at $599 then forget it, I'm not paying $600 for a $400 card. Now I'm getting more curious about the 970.

-

Hi everybody, maxwell enthusiasts:hi2:

So we are saying, next year, we will have full chip plus 20nm?

Dont you think guys, that would be another huge improvement?, again?...

Excesive gain in my opinion for two consecutive years ^^U -

That's what we think. It won't be a huge jump over 28nm. It will be approximately another 20%-30% at the most. Think of the GTX 780 and 780Ti to reference the "gap" between Maxwell 28nm and 20nm, assuming 20nm will even exist for mobile. We haven't even had any legit leaks of the 980M performance, yet. So, we're still waiting to confirm what it is in fact supporting.

-

Robbo99999 Notebook Prophet

If they do ever do Maxwell in 20nm I think you can expect a bigger jump than just 20-30% when comparing to 28nm. You can fit nearly twice the number of transistors per mm squared by shrinking down from 28nm to 20nm - so I think the difference would be more like 80% performance improvement. -

Well MSI GT72 review up on notebook check, just the 880m kepler version not the soon to exist 980m maxwell. I doubt the rest of it will change though.

Looks on par with Asus g750.

Similar noise and temperatures.

better sound and mic ports.

slightly worse display.

48mm thick (1.9 inch)

seems a large number of small improvements over the MSI GT70 with no real steps backward.

Pretty much out of the running for me though, the mediocre TN display just has too bad of color and vertical viewing angles for me. If that isn't a deal breaker for you though, it looks pretty nice otherwise.Cloudfire likes this. -

There will be a 970m in there!?!

There will be a 970m in there!?!

Well that changes things - I wasn't expecting anything higher than a 965m (if it exists).

I wonder how long until we'll be able to buy. -

If this is true and 980m hits your lower estimate of 80w, I really might consider a Aorus x7 2x980m SLI if they drop them in it. Still run a bit hot since its thin form factor, but the portability would be nice. Even with these really efficient GPUs ultra thin gaming just won't be perfect till we get a haswell replacement its really a CPU limit more than a GPU one I think. (personally I am suspecting a 90w 980m, your upper estimate, is more likely though.)

-

Incredible. How much is the performance increase verse 980m and 970m?

If it's only a little I'll stick with the 970m because I want the small form factor. But if the 980 blows the 970 out of the water I'll go with that.

Will there be a 980m in a decent form factor? -

Also the p34g v3 link is dead.

Also do we have pictures of these laptops or just these nimbers?

-

Nvidia is making great progress with Maxwell on 28nm.

I don`t think we will get Maxwell in 20nm. That is for Pascal using the technique "16nm FinFet" which is really 20nm.

Yeah the cooling on GT72 is really really great. Great progress over the GT70 for sure.

The display they use isnt IPS worthy for sure, but it isnt so bad as some reviews say it is. Its an average display I think.

Yup, GTX 970M. About 20% ish faster than a GTX 880M

Remains to be seen but I think GTX 980M should be around 30% faster than a GTX 970M.

Yeah I think GTX 980M is around 80.90W somewhere. Official specs will of course be 100W (they always brand high end cards with that) but I think we will see a lot of surprised and happy owners commenting how cool they run

Although GTX 980M SLI probably is feasable with an Aorus I think they will go with GTX 970M SLI. Still a lot of heat for a 17" notebook.

Will be for sure a sick upgrade over current Aorus

Sorry. the link is "ttp://www.3dmark.com/xxxxx" instead of "http://www.3dmark.com/xxxx". Fixed it now.

Just numbers so far. Gigabyte have been testing their notebooks extensively and putting up results on 3dmark.com -

Actually the 28nm and 20nm numbers are very misleading. Those are just 1 of like 20 important measurements. Its sort of like the labeling of laptops as 17.3" or 15.6" or 13.3", it is a useful measurement, but don't take it to give you all the information you need.

I am not an expert but based on the other numbers I have seen:

20nm will likely have 50% to 75% more transistors possible on a same size die (not 100% more)

The heat per transistor will be lower, but the heat per mm^2 will be higher (assuming area is fully utilized)

we will have to wait to see though really there are just so many factors, not to mention it is completely possible they will end up skipping 20nm and going straight to 16nm pascal. -

I wasn't aware of that. If this is the case, then yeah, it may be true that we will see a much greater performance increase. However, it seems unlikely to me that they will release a 980M with a 50%-60% improvement over the 780M/880M, then 6-8 months later release a "990M" with 80% improvement over the 980M, then 6-8 months after that, release Pascal which should double performance, yet again. That seems very unlikely to me. That's nearly 300% performance increase in two years ([60 + 80] x 2).MorejaSparda likes this.

-

This wait is unbearable - I'm already stretched beyond when I intended to buy a p34g v2 - back in august.

I do hope there are no delays and they can push these laptops to retailers in a matter of weeks, otherwise I may have to just go for the v2 with 860m.

-

Yeah I said mediocre, which I equate to average it doesn't look horrible maybe 5% worse than the Asus G750 display which was also an average TN display. That said I want better than that, I have seen some above average TN displays I would be happy with, and there are many newer technologies available (mainly in 15.6" or smaller laptops) which have better displays.Cloudfire said: ↑Yeah the cooling on GT72 is really really great. Great progress over the GT70 for sure.

The display they use isnt IPS worthy for sure, but it isnt so bad as some reviews say it is. Its an average display I think.Click to expand...

I would prefer to get a 17.3" or larger laptop but so far with very few exceptions (HP dreamcolor as an extreme example) they tend to have at most an average quality display. If thats all I can get I will probably end up getting a far more portable 15.6" with a VERY good display instead. -

Very good benchmarks. Have been following this. Have a weeks trip till 2nd of Oct and was praying that somehow notebooks will launch with themCurunen said: ↑This wait is unbearable - I'm already stretched beyond when I intended to buy a p34g v2 - back in august.

I do hope there are no delays and they can push these laptops to retailers in a matter of weeks, otherwise I may have to just go for the v2 with 860m. Click to expand...

Click to expand... yeah well not happening. Not sure what to do. Should i wait and order internationally later on in Oct/Nov or grab what i get while i am in US? Also think even if spend now they will be getting price cuts when 900 series launches so would be a waste to purchase now.

yeah well not happening. Not sure what to do. Should i wait and order internationally later on in Oct/Nov or grab what i get while i am in US? Also think even if spend now they will be getting price cuts when 900 series launches so would be a waste to purchase now.

-

The GTX 980M is about 800 points above what my 880M did on it's best run at Firestrike Extreme.Cloudfire said: ↑Firestrike Extreme benchmarks.

1440P resolution, 1.5GB memory usage, lots of shadows and AA. Should really show what these cards are capable of.

As always, pay attention to the Graphic score.

First up,

GTX 880M

GTX 880M SLI

GTX 980M

Comparison with current desktop cards. Yup, thats right. GTX 980M is 4% from a GTX 780

Click to expand...

Click to expand...

NVIDIA GeForce GTX 880M video card benchmark result - Intel Core i7-4810MQ Processor,Notebook P17SM-A -

Robbo99999 Notebook Prophet

It does seem "unlikely" I agree, too good to be true. Mathematically it's nearly twice the number of transistors per mm squared, but from what Ningyo is saying there might be some other limitations to consider that affect possible transistor density which I'm not aware of.J.Dre said: ↑I wasn't aware of that. If this is the case, then yeah, it may be true that we will see a much greater performance increase. However, it seems unlikely to me that they will release a 980M with a 50%-60% improvement over the 780M/880M, then 6-8 months later release a "990M" with 80% improvement over the 980M, then 6-8 months after that, release Pascal which should double performance, yet again. That seems very unlikely to me. That's nearly 300% performance increase in two years ([60 + 80] x 2).Click to expand... -

Yeah, the CPU in the benchmarks Cloud is presenting is crap for benchmarking (no offense, Clouddeadsmiley said: ↑The GTX 980M is about 800 points above what my 880M did on it's best run at Firestrike Extreme.

NVIDIA GeForce GTX 880M video card benchmark result - Intel Core i7-4810MQ Processor,Notebook P17SM-AClick to expand... ). It makes Kepler look really bad.

). It makes Kepler look really bad.

Well, I mean, according to Moore's Law, and assuming technology follows this law, it should be theoretically possible to achieve at least 100% in less than two years. We will have to wait and see. I hope you are right, and I hope 20nm actually exists. But I can see NVIDIA & AMD just re-branding their stuff for 2015 to make some extra money for the lost time and having to skip Volta (because of the delay with Intel), ultimately giving us another 20%~ for mid-2015.Robbo99999 said: ↑It does seem "unlikely" I agree, too good to be true. Mathematically it's nearly twice the number of transistors per mm squared, but from what Ningyo is saying there might be some other limitations to consider than affect possible transistor density which I'm not aware of.Click to expand...

I'd rather see Pascal double Maxwell's performance, than have two variants of Maxwell and sacrifice Pascal's impact to the gaming world by introducing small processes sooner. -

Not too shabby that a stock GTX 980M is beating a overclocked GTX 880M by 24%. But its nice to see that a GTX 880M can do over 1200MHz.deadsmiley said: ↑The GTX 980M is about 800 points above what my 880M did on it's best run at Firestrike Extreme.

NVIDIA GeForce GTX 880M video card benchmark result - Intel Core i7-4810MQ Processor,Notebook P17SM-AClick to expand...

Look at theJ.Dre said: ↑Yeah, the CPU in the benchmarks Cloud is presenting is crap for benchmarking (no offense, Cloud ). It makes Kepler look really bad.

). It makes Kepler look really bad.

Click to expand...

Click to expand...

Graphic

Score

!

deadsmiley and J.Dre like this.

deadsmiley and J.Dre like this. -

-

Exactly this. As chips get smaller the heat is only going to get worse, not improve, unless significant increases in power efficiency are realized via improved architectures.Ningyo said: ↑Actually the 28nm and 20nm numbers are very misleading. Those are just 1 of like 20 important measurements. Its sort of like the labeling of laptops as 17.3" or 15.6" or 13.3", it is a useful measurement, but don't take it to give you all the information you need.

I am not an expert but based on the other numbers I have seen:

20nm will likely have 50% to 75% more transistors possible on a same size die (not 100% more)

The heat per transistor will be lower, but the heat per mm^2 will be higher (assuming area is fully utilized)

we will have to wait to see though really there are just so many factors, not to mention it is completely possible they will end up skipping 20nm and going straight to 16nm pascal.Click to expand... -

Can`t blame you. The graphic score is always the important one, because there are just too many different notebooks with different CPUs that makes the totals score all crazy. As long as the CPU doesnt bottleneck the GPUs, the Graphic Score = GPU performance.J.Dre said: ↑Aff, yeah, I keep forgetting that little tiny sucker. I always look straight at the big orange text. :hi2:Click to expand...Robbo99999 likes this.

-

p34v3 would be excellent with the 980m if there are no throttling issues, though I'd rather customise a barebones version at MySN on their XMG C504, which was actually the laptop I originally wanted (which is based off the p34). if they can throw a 980m in the XMG and it runs fine, then I will be one of their first customers.

-

As these processes get smaller and smaller, what happens to reliability and overclocking potential? If they're packing nearly twice as many transistors on a smaller die, won't binning be way more difficult and overclocking for the chips that do make it to retail?

Again, I'm pretty much a noob when it comes to the numbers and technology of these gpus. -

So if the p34g v3 will have a gtx 970m does that mean there will be a soldered version of the 970m along with a MXM version? I'm not even sure if there is a soldered version of the 870m or not...that why I'm asking.

-

Robbo99999 Notebook Prophet

(Although Moore's Law is doubling every 2 years, which is a 100% increase (not a 200% increase - a 200% increase is tripling).)J.Dre said: ↑Yeah, the CPU in the benchmarks Cloud is presenting is crap for benchmarking (no offense, Cloud ). It makes Kepler look really bad.

). It makes Kepler look really bad.

Well, I mean, according to Moore's Law, and assuming technology follows this law, it should be theoretically possible to achieve at least 200% in less than two years. We will have to wait and see. I hope you are right, and I hope 20nm actually exists. But I can see NVIDIA & AMD just re-branding their stuff for 2015 to make some extra money for the lost time and having to skip Volta (because of the delay with Intel), ultimately giving us another 20%~ for mid-2015.

I'd rather see Pascal double Maxwell's performance, than have two variants of Maxwell and sacrifice Pascal's impact to the gaming world by introducing small processes sooner.Click to expand... -

Ha, yeah, you're right. What was I thinking? I'm confused.Robbo99999 said: ↑(Although Moore's Law is doubling every 2 years, which is a 100% increase (not a 200% increase - a 200% increase is tripling).)Click to expand...

Well, that just makes the idea of an almost 300% increase in performance (referring to previous posts) even more unlikely. -

It's already happened over on the CPU side. Look at Sandy Bridge then compare it to Haswell.ericc191 said: ↑As these processes get smaller and smaller, what happens to reliability and overclocking potential? If they're packing nearly twice as many transistors on a smaller die, won't binning be way more difficult and overclocking for the chips that do make it to retail?

Again, I'm pretty much a noob when it comes to the numbers and technology of these gpus.Click to expand...ericc191 likes this. -

This is true.. I remember my 2600K running cool and overclocking like a BOSS.

-

I think this thread is in Orgy status now. I think we should start doing sexual things to increase creativity.

Curunen, Tonrac and Firebat246 like this. -

Going down to 20nm will not increase max core count in GM204. It will be just smaller chip with somewhat better performance.

I guess Moor's Law will take a battle not with Physics as expected but with Nvidia's greedy soon. -

The fact that people are willing to pay for the product (even at subjectively elevated prices) simply means that the market will bear that price.James D said: ↑Going down to 20nm will not increase max core count in GM204. It will be just smaller chip with somewhat better performance.

I guess Moor's Law will take a battle not with Physics as expected but with Nvidia's greedy soon.Click to expand...

Simply put. If a person does not like the price then they won't pay it. Nobody is being forced to buy nvidia's products.Mr. Fox likes this. -

The FIVR didn't help the Haswell heat. I suspect that Skylake will have much lower heat now that Intel is abandoning FIVR after Broadwell. It was always a dumb idea from a heat perspective.n=1 said: ↑It's already happened over on the CPU side. Look at Sandy Bridge then compare it to Haswell.Click to expand...

Sent from my HTC One_M8 using TapatalkMr. Fox likes this. -

irfan wikaputra Notebook Consultant

I have a very strong feeling that Nvidia is playing their game slower than last gen kepler

smaller architecture means they can put more transistor with the same die size hence they can increase the number of shaders

just remember the old Fermi (40nm) can squeeze 512 shaders in GF 110 while Kepler (28 nm) can squeeze 2880 in GK110

fyi, one SMX of Fermi = 48 cuda cores (referring to the smallest Fermi card, GT 415M) while 1 SMX of Kepler is 192 cuda cores

so, if we do the math GTX 580 (512 shaders) compared to GTX 780Ti (2880 shaders)

512/48 = 10.67

2880/192 = 15

10.67 : 15 = ~0.7

28 nm : 40 nm = 0.7

now who's saying that smaller manufacture (from 40nm down 28nm) won't increase number of SMX they can squeeze in?

now talking about Maxwell. the way they did the magic was they could optimize a 128 shaders to be 1 SMX and is 90% performance of 1 SMX of 192 shaders. so let's say they have same 28nm and same die size.

and I am gonna compare the ratio of gtx 660M to gtx 860m

gtx 660m : gtx 860m

2 smx : 5 smx

gtx 680m : gtx 980m

7 smx : (5/2) x 7 smx

= 7 : 17.5

gtx 980M supposedly shaders = (17 or 18) x 128 = 2176 or 2240 shaders

this makes me have a very strong feeling that the next top dog after 980M might not be full fledge GM 204 but instead the one after

heck I am even doubting GTX 980 is the full fledge GM 204 anyway

but yeah, please don't shoot me with too many bullets whether it's correct or wrong

THIS IS JUST MY SPECULATION AND OPINIONS -

Oh you are wrong. I was not telling about prices. I was telling about their roadmap. people can't stop buying to force Nvidia give better products now. They already waited too long.deadsmiley said: ↑The fact that people are willing to pay for the product (even at subjectively elevated prices) simply means that the market will bear that price.

Simply put. If a person does not like the price then they won't pay it. Nobody is being forced to buy nvidia's products.Click to expand... -

Of course people can stop buying. It is a choice.James D said: ↑Oh you are wrong. I was not telling about prices. I was telling about their roadmap. people can't stop buying to force Nvidia give better products now. They already waited too long.Click to expand...

Brace yourself: NEW MAXWELL CARDS INCOMING!

Discussion in 'Gaming (Software and Graphics Cards)' started by Cloudfire, Jul 14, 2014.