Oh I do think GPU Boost 2.0 will get in the way for serious overclockers (those who did 1000MHz+ on the GPU with the 680M). For the rest, it will be fine but it might be a little more tweaking involved since the system is always trying to maximize the clocks at all times if its below a temperature thresshold.

I`m interested to see how it will play out this time.

-

-

you can actually pick this machine up for far less than you think... https://www.google.com/search?q=inf...16,d.cGE&fp=44bb4c5010ba8026&biw=1745&bih=868

ill buy it just to bench it lololol

-

Brilliant! Give this man a prize! And that is why I'm waiting.

The improvement will not be noticeable from a 680m to 780m at stock voltage with overclock. Meaker just showed why for the most part and explained better than I ever could. 680m has a larger margin for overclocking the core than the 780m likely will, keeping peak clocks within 5% of each other. With 680m vRAM able to run at about 1200MHz as well, and even if 780m GDDR5 can run at 1400MHz, that won't make up for the lack of GPU increase. Shaders should offer the biggest improvement.

All I was saying is that it's a hard justification to go from 680m to 780m since actual FPS will increase possibly 20% at best for what will likely be a $500 net cost (sell 680m, buy 780m). It may be enough justification for you or others, and that's your prerogative. But I wouldn't recommend an upgrade from 680m to 780m to anyone for that cost.

All that said, it is looking like a fine improvement over the stock 680m. -

That be really awesome

You better act though, looks like many people have tried to give him offer.

Its a Clevo GPU btw. -

Its dropped in price now!

US $1,299.95 -

As much as I would like to bench the GPU before hands, such price is a bit... prohibitive haha. I shall patiently wait for actual release

-

Wont the fully released card be very expensive anyways?

-

Eurocom charge around $880 for it. Thats for the final retail version, not QS lke the ebay guy is selling. But I guess they are pretty alike anyway. Its not like its a Engineering sample.

-

Of course it will, but this GPU is selling almost at the cost of a barebone with 680m gpu, cpu, etc etc. The final laptop with the GPU included should cost almost half of what the asking price is.

Buying it/upgrading it is most of the time more expensive than machines designed around it and sold as is. -

Can anyone purchase from them? Or do you need to own one of their machines?

Sent from my Nexus 4 -

QS cards are a bit dangerous, watch out. I had one die in my hands 13 months ago, the day after I received.. a big bummer when it happens..

-

"Getting in the way" is an understatement. It doesn't allow you to run the card at the clocks you want it to run. I.e. kills overclocking.

In my opinion this is explanation is clearly misrepresenting the boost function (1.0 and 2.0). Let me put it this way: The system is always trying to minimize the clock when it is above a certain power threshold.

Doesn't sound that cool anymore, and yes... it isn't.

One has to be blind to see this feature as automatic overclocking. You're much closer to the truth when you look at it as a sophisticated underclocking / throttling mechanism.

Disabling boost will give you more performance, not only with extreme overclocks. It's crazy to see how far boost 2.0 clocks down sometimes, resulting in a severe performance drop.

While a more variable core clock certainly can help to keep temps down in a mobile system, it is nothing you want for high-end hardware. The chip will get to the same temperature when running a certain clock with boost 2.0 just as when it runs that clock without the boost thing.

Keeping temps down by lowering clock is one thing and one thing only - throttling!!

I really can't see why this boost stuff gets hyped so much, it cripples the card's performance. OEMs should really start implementing cooling solutions which are adequate for the used hardware, with enough headroom so that higher ambient temps are okay as well.

A card with boost will perform a lot different when the ambient temperature changes, e.g. summer vs. winter. That's the wrong approach, the card should perform the same all year, provided the room temperature is within a reasonable limit.

Some of you certainly remember the throttling of the 580m in the AW systems... happened at about 72°C if I remember correctly. Nasty story... but the point is that boost 2.0 is essentially the same, just with a new livery.

Let's say the "boost" clock is 850MHz and default is 750MHz - fact is the system is always throttling when it is not running at 850MHz, running the card at the actually desired speed and calling it "boost" is clever, but it's also non-sense. When the card can run stable at this clock, why not run it at this clock all the time? Every time it is not at "boost" it is actually throttling. Boost fans have to face this.

With a proper cooling boost should never throttle down. That's why I say the clocks should be fixed, with a sufficient cooling.

Of course the bad thing is that even with acceptable temps boost 2.0 cards will throttle (i.e. not run at boost clocks), that's just how it's implemented. And I tell you... you'll notice this downclocking in games. -

I'm running a Intel QS CPU and it's been fine for me. I would take a chance but I don't have the funds. Still waiting for a gpu that I purchased to turn up!

Sent from my Nexus 4 -

I`m just trying to understand GPU Boost SVL, and since you wrote so much I had to quote parts of what you wrote.

I`m curious: Why does it kill overclocking when tons of reviews had no problem overclocking the GTX Titan? Does it throttle and not always leave a straight smooth line, yes. But the GPU is still operating above stock clocks = overclocked. Thats why I said it involves a bit more tweaking when overclocking with a GPU running 2.0.

Guru3D overclocked the Titan using Afterburner. Titan stock clocks is 836-876MHz. They overclocked it to 1176MHz, but the GPU went down and up from that to 1100MHz. Did it throttle? Yes, but it was still a successfull overclock way above base clocks.

Why is it underclocking and not overclocking? Why not both? GPU Boost 2.0 overclocks the GPU when it operates below the max power consumption and below the temperature threshold. It start downclock it when it goes above. I have problem seeing that the system doesn`t do both.

I`m not sure how that will play out but I know some of the guys who use Titan still have GPU Boost 2.0 enabled, but they have edited the vbios to allow much more smooth clocks. i.e not downclocking and upclocking all the time. So yeah, for those who overclock a bit high may want to disable it.

Except the GPU Boost 2.0 is much better at reading available TDP headroom while the old 1.0 reads worst case scenario, which is the reason why GPU Boost 2.0 than 1.0 allows higher clocks while they still is below the same TDP limit. Will 2.0 mean higher temps in average than 1.0? Yeah I think so because the system is always pushing the GPU up to its limit. But with Afterburner or any other third party software, you can select a lower temperature limit, limiting the heat output from the GPU.

It seem to me that you are only trying to see it from your point of view, overclocking. How does it now offer more performance than the old system for those who don`t overclock? They will get max performance out of your GPU at all times.

I think the temperature limits is put up pretty high, thus avoiding that issue because a notebook should be able to properly cool it below 90C anyway, even in the summer. But GPU Boost 1.0 is inaccurate compared to 2.0, explained by Anandtech here. So 2.0 is here to stay I think.

Thats was Dell specific problem, a BIOS limit they put there to throttle when it hit a certain temperature. Do you really believe OEMs will put the limit on 780M that low? Not ever gonna happen. My GTX 680M runs hotter than 72C sometimes and I don`t see any throttling. Neither do any other OEMs unless you reach the 90s.

Once again, the old method is broken. Read the Anandtech link above. GPU Boost 2.0 is the future wether you like it or not because it is more accurate and squeeze out more performance than 1.0.

I think the idea behind 2.0 is to allow OEMs to put a decent GPU inside whatever shell they want, ensure that the system is not overheated by base clocks, and let the 2.0 do the rest of the job. If you put a system that is running max clocks at any time, you will have to deal with a hot GPU that will throttle down from the specifications made by Nvidia (base clocks) if paste get bad, hot inside the house, fan is clogged up by dust etc. With 2.0 the GPU will always stay within the specifications, and the rest is just a bonus. -

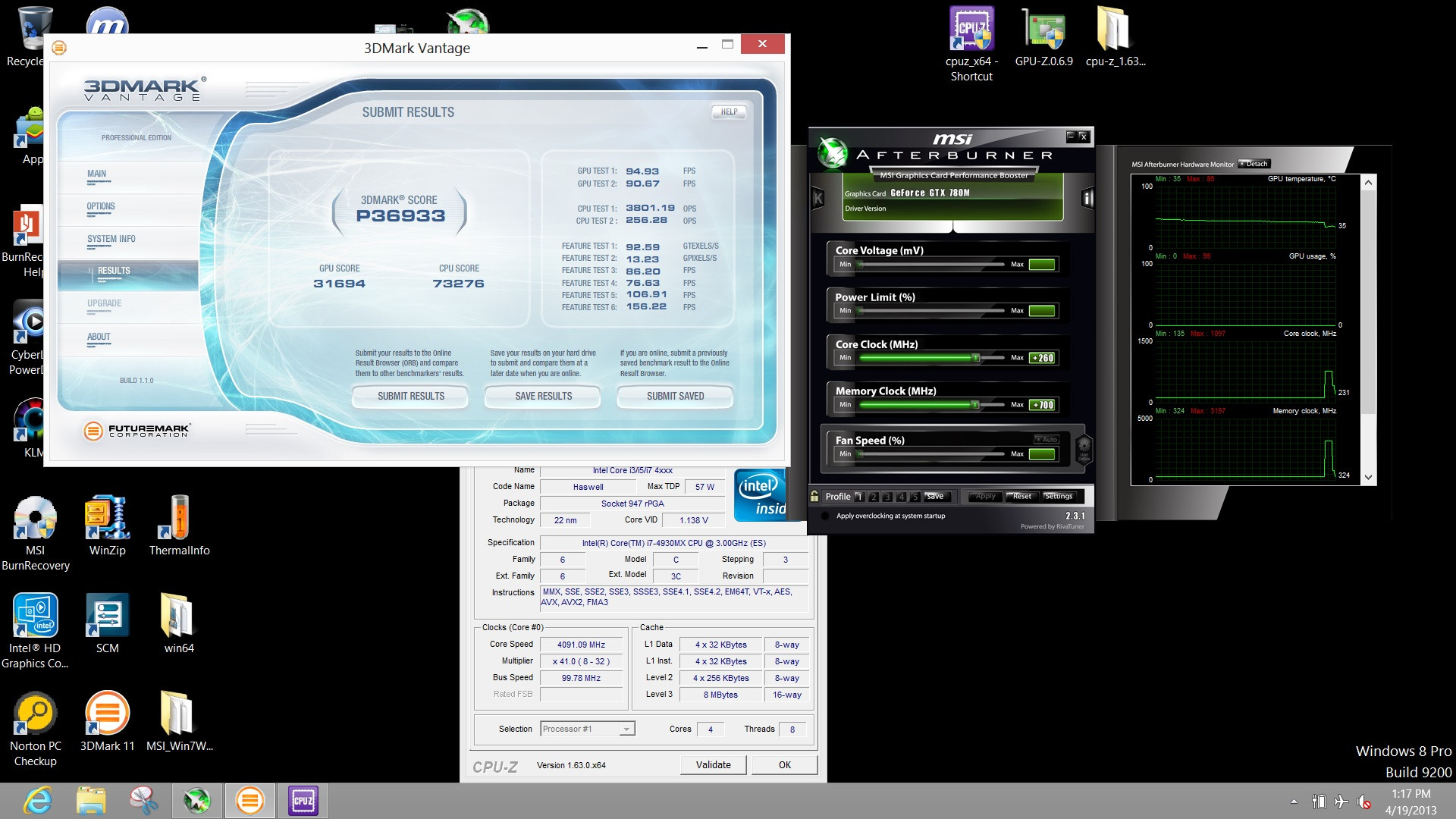

GTX 780M overclocked. :hi2:

[x] Beast confirmed

A dude running GTX 780M on the MSI GT70 have tried overclocking the GTX 780M. He OC the GPU by +260MHz and the VRAM +700MHz

You can see from the picture that the GPU was overclocked to 1097MHz (Holy crap!) and the VRAM to 1599MHz (Holy Crap!). Stock is @ 797MHz/1250MHz.

Click on the picture to see bigger:

Stock 780M score 27142. this overclocked 780M scored 31694.

-

One of the basic premises of boost, that the system is constantly aware of the precise temperature, is a shaky one at best. The DTS in the chips simply cannot give temperature readings with both high precision and responsiveness so dynamic clock oscillations according to temperature is bound to be poorly damped, leading to noticeable bumps in performance.

-

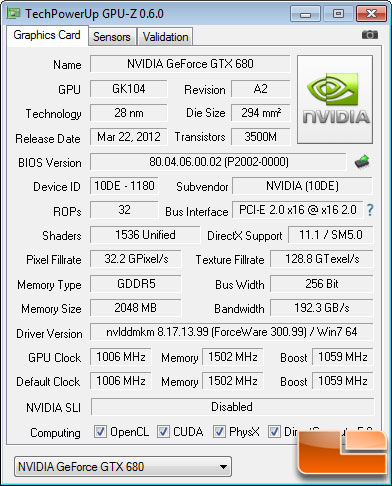

LOL I just found TechInferno`s review. Their extreme overclock on the GTX 680M score 28820. That means that the 780M Overclock above beats it by 10%. And this is just the start. Look really promising.

nVidia GTX 680M Performance Review

![[IMG]](images/storyImages/Vantageb.jpg)

-

780m is certainly a beast!

As for the GPU boost discussion, the main idea is that it gives uneven/unstable performance based on its own specifications. If you overclock under the scheme of boost, you will most likely never maintain the OC'd clocks, or will fluctuate enough to give inconsistent performance.

I prefer to maintain a steady performance, and it will be my job to watch the temps

-

P36933 is equivalent to which desktop GPU?

Would be nice if there was a 3DMark11 too. I mean new card with latest bench right? -

Not sure about desktop but that overall score actually beat my 7970M crossfire

I do wonder how the 4930MX got 73k GPU score without seeming to break a sweat though

-

maybe desktop 680 GTX.who knows...this vantage score omg..!!

-

They ran Physx with the nvidia GPU inflating the CPU score most likely.

-

Meaker@Sager Company Representative

I wonder what memory chips are on the MSI card to let the memory clocks go that high....

-

EDIT: That are not the correct clocks...neither the +260Mhz nor the +700Mhz RAM match up...

Stock 780M clocks are 771Mhz core, 1250Mhz RAM and 797 boost, so AB would have to show 1057 / 1950 instead it reports 1097 / 3197...

EDIT2: I just realized that it keeps reporting the highest clocks that where ever set and not the current highest...so yeah then that adds up.

-

1250MHz+1250MHz = 2500MHz

+700MHz OC = 3200MHz

797MHz

+260MHz OC = 1057MHz (+GPU Boost 2.0 doing some magic)

? -

I even have some video of the GT70 with 780M if you guys don`t believe me

<iframe width='640' height="360" src="http://www.youtube.com/embed/ofXupG58-e8" frameborder='0' allowfullscreen></iframe>Last edited by a moderator: May 6, 2015 -

Nope no magic and RAM is reported 1:1 it uses same values as GPU-Z etc, but he put the sliders higher before and then again reduced it to +260 / +700 AB lets the red letter max values stick even if you just set it for a sec...

EDIT: To sum it up that run was at 1057 / 1950 -

You sure its not 1057MHz/1600MHz?

EDIT: Nevermind, I think its me thats maybe mixing up some afterburner numbers here

EDIT2: can`t wait til MSI release the GT70 with 780M. Gonna be fun

-

Meaker@Sager Company Representative

Max temps show 85C though which is certainly on the toasty side for a 3dmark run.

-

Sigh... XM CPU? Really? Look at GPU score not overall score. Vantage is highly influenced by CPU.

i7-3740QM with 680m @ 1000/2400 = 28731 GPU score, 31694 GPU scores is ~ 10% faster...

Cloudfire makes a good salesman!!! OMG LOOK IT's SSOOOOOO FAST!

-

Just as expected. Even if you clock it 2ghz on mem, it won't do magic.. This is Vantage though, I really don't get people benching with Vantage to demonstrate GPU power, why not do a 3dmark11 run instead? (please don't tell me, oh look so cool! it scores bzillion!!! then try an even older benchmark... **This is for the guy who initially benched 780m with Vantage**)

-

Right.

I already posted 3DMark score from TechInferno test. They used a 2920XM CPU, @ 4GHz. The dude who overclocked the 780M used a 4930MX @ 4.1GHz. Not a big difference there.

Look at the GPU score. It score 10% better than a GTX 680M on 1037MHz/1250MHz. Pretty much max what you can get out of a 680M without doing modifications. And that was the first OC score we have ever seen from GTX 780M.

Then we have the memory bandwidth with that overclock. Its way higher than what GTX 680M is capable of doing. A pure Performance benchmark at 720p couldn`t give a rats behind about big bandwidth. Games with 4*AA or 8*AA on 1080p however love higher bandwidth.

You were super sceptical about the 7600 3DMark11 score, yet it turned out to be true. You didn`t believe GTX 780M as being a 1536 core with GPU Boost because that wasn`t really possible, although I tried to explain to you why that would work. And would you know, that came true too. You are sceptical about 780M overclocking past 680M, yet it already show signs of beating a heavily overclocked 680M. We haven`t even seen game FPS with this overclock yet. Are you sure you really want to continue the 780M bashing? -

Meaker@Sager Company Representative

You need to run 3dmark 11 extreme of the new 3dmark to see the results of memory speed (or do 1080p gaming tests).

-

I bet the memory overclock played a big role in this. 1950MHz on the VRAM is probably causing a lot of heat from the memory. Lowering it to 1500MHz (like most desktop models run at) will most likely give enough bandwidth while still lower the temps down to 80C or below. 80C is pretty cool for a GPU with that power.

We also don`t know if the guy used the turbo mode on the fan. -

Meaker@Sager Company Representative

Well I have to bow out of the speculation now

but have fun everyone.

but have fun everyone.

-

Does this mean you got yourself a 780m!?

-

Meaker@Sager Company Representative

You'll just have to speculate on such a thing as well. Maybe I think the details are confirmed now....

-

Good, hopefully we can get some real details. I don't want to sound so negative about this card, just that some people seem to have unrealistic expectations for its performance improvement over 680m. I do hope it will blow everything out of the water, but also know these early Chinese screen grabs aren't always accurate to the end item product. Considering the potential the 680m has with overclocking, I feel this card will be more in line with what the 680m *should* have been, considering how easily it overclocks and temps remain low.

Any idea when official specs will be announced?

Hopefully if I get a review machine with 780m, I may change my tune a bit if it's truly 30%+ improvement over 680m.

I do appreciate the excitement Cloudfire has for the product, he's the NBR cheerleader, lol. But it also makes it sound like it's going to be a desktop 680 in a 100W TDP form factor. -

The link I made to Vantage with 680m was run at 1000/1200 not 1037/1250 with i7-3740QM @ 3.9GHz and still 10% (my machine). My point is that Vantage is not a good indicator of performance, and it's not even that impressive of an overclocked 780m vs 680m, yet it was touted as "BEAST CONFIRMED". If the best it can do is 10% OC vs OC then it's a sad upgrade really. And there's no indication of cooling on that thing, if it was sitting on a bucket over dry ice, or if it was just stock cooling. I'd throw that benchmark away because it really means nothing.

I wasn't skeptical about it overclocking past 680m. I just said the 780m likely has less overclock headroom, meaning overclocked GPU clocks at stock voltage won't be significantly different. Plus the official TDP of this card has not been disclosed yet. It may still be more than 100W, not to mention overclock power and dissipation requirements. Show me the power drawn during the benchmark and compare with 680m, then I might be convinced.

Show me a run with 3DMark 11 at 1920x1080 (X Score) and 3DMark Firestrike at 1080p with Extreme preset, along with specs of the machine, cooling used, and power drawn from the system. Then I'll have confidence in your cheerleading.

Call me a skeptic, but my whole point is I don't believe everything I see and then preach it as gospel without some evidence to back it up. While some or all may be true (or not) there's still no evidence of machine used, cooling used, or even official results or specs. You may be lucky, they may be right, but like Eurocom, things aren't always true to what they post on the internet. -

I'm just wondering does MSI G series laptops have under-utilization problems since it can't disable optimus like one can on AWs (MUX). I.e. GT70 with 780m.

-

The fact of the matter is that if we can throw the core clock somewhere around 950 and get memory to 1500, we *WILL* have a GTX 680 in 100W TDP form factor. Assuming it does not overdraw from the PSU. Since someone already posted a benchie at over 1000 core and WAY over 1500 memory, supposedly stable, without needing a huge power supply bump, I do believe the latter settings are quite easily attainable with a good old foil mod and/or a guitar pick mod like Prema used. I still have to figure out a way for Mythlogic to implant a guitar pick in my configuration before they ship it to me, because I'm just a little bit afraid I'll screw it up on SLI 780Ms XD

-

GT70 have only 1 fan for both GPU and CPU, correct? Then the temp results are awesome, heavily OC'ed GPU + XM CPU. May be the guy used some extra cooling...

Anyways, i agree that we have to see some 3dmark11 extreme runs or game test to confirm if it's a real beast indeed. Metro 2033: Last Light benchmark?) -

HTWingNut: Oh I do believe we have a GTX 680 in our notebooks this time. Look at the clocks, look at the bandwidth. Beats the stock GTX 680. So yeah I`m gonna be a cheerleader for this GPU. You be stupid not to.

![[IMG]](images/storyImages/oBK34aH.jpg)

Stock cooling on the GT70. No modifications done I think. Not sure if he ran the fan on turbo mode or not though. Im pretty certain that this GPU will stomp an overclocked 680M with gaming and on Extreme benchmarking. Just look at the bandwidth and the clocks on that GPU. Looks like GPU Boost have headroom to even clock it to 1222MHz lol

MSI have done some modifications on the GT70 with GTX 780M btw. They have attached the CPU and GPU now so the cooling have improved compared to older models.

![[IMG]](images/storyImages/VxJgF.jpg)

-

Meaker@Sager Company Representative

Lets just say it's going to be good guys.

Optimus has no real utilisation issues, just a flat 2-5% drop over dedicated mode.

It will be interesting to see who uses what memory chips. -

1)

Does that mean GX60/70 with AMD's card will have more utilization problems?

2) Cloudfire where did you get these pics?

![[IMG]](images/storyImages/oBK34aH.jpg)

![[IMG]](images/storyImages/VxJgF.jpg)

-

Meaker@Sager Company Representative

GX60 and GX70 are in a different bracket of performance to be honest and a comparison is a little silly.

-

So bring it Meaker! Bring it! When will you have your 780m sample?

-

To answer your message Observer:

MSI GT70 does not have a MUX like Alienware. You cannot disable Optimus.

But Optimus does not have any performance hit or problems anyway so you don`t need the option to disable it. So thats a "no" to your question regarding if GT70 have under utilization problems. That was with Enduro

Why are you excited? You can just overclock your 680M to reach the same performance as a 780M right? The clocks and the much better memory on 780M is just a lie

-

Okay I'll be more specific. Imagine GX70's new config was also available on GX60 (so only size and weight differences). Will it have more utilization problems compared to Nvidia's optimus?

-

You still don't understand. It's not the 780m or tech I'm questioning, it's the source material. I'm excited about the tech. And that I can get results from a known reliable source is all. Throwing up screenshots knowing nothing else is as interesting as showing the score of a sports game without even knowing who is playing.

I`m upgrading, are you? (GTX 780M review inside)

Discussion in 'Gaming (Software and Graphics Cards)' started by Cloudfire, May 8, 2013.