Exactly, such a big difference in the core frequency should result in a distinctly higher GPU score... which it apparently doesn't. Something doesn't add up here. Fermi was definitely easier to tweak.

-

Not if the cores are hitting a bottleneck by the memory right? Maybe the extra 200 is a requirement for the extra 85MHz or whatever it was?

-

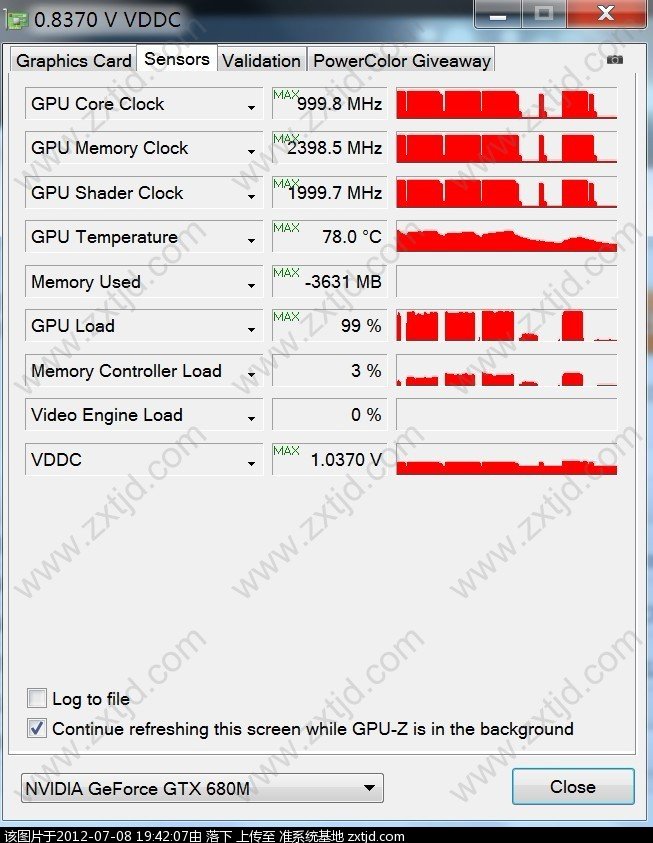

If he showed the Memory controller load we'd have more info for this lol

-

-

Shows current but not max I think

-

That's only current, need max to know what's happening.

-

looks to be about 25 percent at best...

-

OH yeah you are right, its the current

-

Either way is still weird to see 1000/2300 (GPU score 7496) performs poorer than 893/2497(GPU score 7503)

-

have you talked to the guy that makes nibitor? i bet he could help you out, or slv7?

-

Maybe its some throttling involved too?

-

Oh well, you probably know your ways around these things. I`m shure you will figure it out

http://4.bp.blogspot.com/-jnnAebJCe0c/T1Q6KuQP4LI/AAAAAAAABto/Ek4y11yiI-U/s1600/challenge-accepted.jpg -

so will the 3720qm give better performance from overclocking than the 3610qm?

-

That's normally a yes

-

That's what I'm thinking, also with the modded vbios at the same clock vs stock vbios, there's a performance degration, about 300 points deducted in the 3DMark11 test. So basically my GTX680M with svl7's modded vbios 893/2497 scores higher than his 1000/2300 and if the stock vbios was able to reach 893/2497, it should score even higher than mine.

-

i meant by how much? fps or percentage wise?

-

It depends on how the games run, how heavy the cpu was involved.

-

There really aren't any games that are CPU bound with the power offered by the 3610QM, nor will there likely be any time soon. These are just absurdly powerful chips.

-

For gaming or using Photoshop or Adobe Premiere or CAD? Zero. None of the games, or CAD/Adobe products will benefit, since as said before, the 3610QM is absurdly powerful.

The only time you will truly see a difference if you are doing some serious encoding, rendering, compiling etc work. But you would know this already if this was you are going to.

The fact you have to ask, tells me, you're not doing anything anything close to be able to utilize all the at the 3610QM has to offer. -

It should make a significant difference when playing Starcraft II and FFXIV(in Town). But yea not all games are cpu heavy so it's going to depends on what games the user likes to play.

-

sorry to correct you, but the source states that they did NOT use identical systems for the consumptions tests and the results should thus be viewed as "lockere richtwerte" (which translates roughly into something like: rough estimates or guideline values)

link: Test: NVIDIA GeForce GTX 680M im Schenker P702

still amazing to see that the 680M is pretty much on par with the 670M under peak load!

btw, its weird that they show such a low 3dmark11 score for the 680m in that review... -

on par with 670m? or you mean gtx 670 desktop gpu?

-

He means in power consumption.

-

yep i meant wattage not performance

vs. 670M not 670 desktop

vs. 670M not 670 desktop

-

sh*t just got real on the GTX 680m "the truth" thread. grab popcorn, the ending was epic lol

teh intarnetz iz srs bidness. -

Oh ok, didn`t know they where estimates.

Here is the P170EM, with identical systems, well the difference is 2670QM vs 3720QM, but we can take away 4-5 watt for the 680M system to compensate.

![[IMG]](images/storyImages/o4udM.jpg)

http://www.notebookcheck.net/Review-GeForce-GTX-680M-vs-Radeon-HD-7970M.77110.0.html

http://www.notebookcheck.net/Review-Clevo-P170EM-Notebook.73442.0.html

218W for the 675M minus 178W for 680M = 40W

So not entirely 65W less, but around 40W less than 675M. Not bad

-

but twice as powerful as 675m, cant wait for mines to arrive.

-

well hell...cloudfire done went and got him self banned?

-

I think only a daily ban. On his behalf it was in relation to a 680M vs 7970M thread which we have all been banned for at some point

-

well at the rate things were going either all of the people bantering him would have to go or he would have to go. kind of sucks though since he had spent so much time on this forum.

-

I was kind of watching Cloudfire, he jumped on anything new about nvidia haha. Sad to see him banned because of trolls.

-

learn2changeIPaddresslulz

-

The account is banned, no the IP Address.

Still waiting for you to upgrade to a 28nm beast!

That review itself is bizarre. Read the whole article and not just snippets. It's weird.

Haven't tested it since I don't play FF XIV (worst reviewed MMORPG from a major developer/publisher, probably ever), and don't plan to re-install SC2, but I highly doubt that. GPU will bottleneck before the 3610QM will. The Physics, and AI etc, are not that intensive in SC2. SC2 was made to run on the lowest denominator like EVERY Blizzard game, so are MMORPGs, more people play = More Money. 3610QM is just so much more powerful than any CPU was back in the day when people used to say WoW or whatever MMO was CPU bound. 3610QM is just well beyond that tired old saying. Heck even on BF3, it was proven a fast dual core was just as good as a Quad for that game despite the legions of hardware junkies claiming that game requires a Quad. -

Enduro 7970m in clevo's are a bottleneck

-

Ok, so when are you getting a 680M? hackness here is getting great results with the 680M.

-

just ordered it this morning, i had to wait for my np9150 w/ 7970m to get refunded lmao. will benchmark 3dmark11 and bf3 first thing after installing win 7.

-

Not really, in FFXIV when in a town if 3610QM quits Turbo boost mode I lost at least 10 fps the next second just from that. It also depends on what's on your screen, in FFXIV characters are closely related to your CPU, and when there are many characters on the screen plus the 3610QM quits Turbo mode and back down to 2.3GHz I can tell you the fps is pretty bad in town.

-

Uh huh, so this would be an issue with the game then. These morons made a terrible game and it doesn't support multi-threading properly that you need Turbo on a single thread? Wow, that's just horrible. In that case, I'd just bail and boycott that game.

Spend more time playing BF3 when you get it then. Forget 3DMark 11. Have fun. -

lol. It seems like you either didn't play it or didn't try the FFXIV after the job patch, after they fired the original team, the new team has been doing a good job on updating so far.

Also it's not single threaded, it's multi-threaded that's why you needed 3.0GHz x 4 to run smoothly in town. 3610QM's non-turbo boost mode really isn't enough for that. -

That's not how Intel Turbo works.

-

The Turbo boost in Ivy bridge CPU is different to 1st gen now

. If the TDP and temperature is allowed it'll OC

all 4 cores to a certain level, 3.1GHz for 3610QM for example. When 3610QM is at 3.1GHz quad, the game runs fine, but when it quits Turbo boost mode the FPS turns ugly

. If the TDP and temperature is allowed it'll OC

all 4 cores to a certain level, 3.1GHz for 3610QM for example. When 3610QM is at 3.1GHz quad, the game runs fine, but when it quits Turbo boost mode the FPS turns ugly

.

.

-

is there a way to keep the cpu in turbo boost mode the whole time while gaming? will throttlestop do that?

and LoL @ people getting banned left and right. mods are on fire today. -

looks like someone will soon figure out what I was talking about on changing IP address.

![[IMG]](images/storyImages/half-trollface_copy.png)

-

ThrottleStop 5.00 beta seems to be able to keep it Turbo boosted, I've just started using it these days. My scores in most benchmarks went up too.

Edit: If you do try it, don't enable the GPU temperature reading, as monitoring the dedicated GPU temperature is counted as using the dedicated GPU, that means your VGA fan will be spinning on and off all the time. Any GPU monitoring program will prevent the dedicated GPU from shutting down even on battery mode. -

902-1100.jpg

Attached Files:

-

-

Thanks

. If you can, could you post Max Memory Controller Load for 3DMark11 @902-1100 please?

. If you can, could you post Max Memory Controller Load for 3DMark11 @902-1100 please?

-

Please wait

-

or you will follow that guy as well...

-

Is it right? -

been trying to say that the memory controller portion of gpuz may not work and i would not go by that if i were you.

edit:

side note:

why do you post the voltage on the top left corner of the screen?

P150EM Upgrading from 675M to 680M!

Discussion in 'Sager and Clevo' started by hackness, Jun 28, 2012.